Deck 18: Professional Data Engineer on Google Cloud Platform

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/256

Play

Full screen (f)

Deck 18: Professional Data Engineer on Google Cloud Platform

1

MJTelco Case Study Company Overview MJTelco is a startup that plans to build networks in rapidly growing, underserved markets around the world. The company has patents for innovative optical communications hardware. Based on these patents, they can create many reliable, high-speed backbone links with inexpensive hardware. Company Background Founded by experienced telecom executives, MJTelco uses technologies originally developed to overcome communications challenges in space. Fundamental to their operation, they need to create a distributed data infrastructure that drives real-time analysis and incorporates machine learning to continuously optimize their topologies. Because their hardware is inexpensive, they plan to overdeploy the network allowing them to account for the impact of dynamic regional politics on location availability and cost. Their management and operations teams are situated all around the globe creating many-to-many relationship between data consumers and provides in their system. After careful consideration, they decided public cloud is the perfect environment to support their needs. Solution Concept MJTelco is running a successful proof-of-concept (PoC) project in its labs. They have two primary needs: Scale and harden their PoC to support significantly more data flows generated when they ramp to more than 50,000 installations. Refine their machine-learning cycles to verify and improve the dynamic models they use to control topology definition. MJTelco will also use three separate operating environments - development/test, staging, and production - to meet the needs of running experiments, deploying new features, and serving production customers. Business Requirements Scale up their production environment with minimal cost, instantiating resources when and where needed in an unpredictable, distributed telecom user community. Ensure security of their proprietary data to protect their leading-edge machine learning and analysis. Provide reliable and timely access to data for analysis from distributed research workers Maintain isolated environments that support rapid iteration of their machine-learning models without affecting their customers. Technical Requirements Ensure secure and efficient transport and storage of telemetry data Rapidly scale instances to support between 10,000 and 100,000 data providers with multiple flows each. Allow analysis and presentation against data tables tracking up to 2 years of data storing approximately 100m records/day Support rapid iteration of monitoring infrastructure focused on awareness of data pipeline problems both in telemetry flows and in production learning cycles. CEO Statement Our business model relies on our patents, analytics and dynamic machine learning. Our inexpensive hardware is organized to be highly reliable, which gives us cost advantages. We need to quickly stabilize our large distributed data pipelines to meet our reliability and capacity commitments. CTO Statement Our public cloud services must operate as advertised. We need resources that scale and keep our data secure. We also need environments in which our data scientists can carefully study and quickly adapt our models. Because we rely on automation to process our data, we also need our development and test environments to work as we iterate. CFO Statement The project is too large for us to maintain the hardware and software required for the data and analysis. Also, we cannot afford to staff an operations team to monitor so many data feeds, so we will rely on automation and infrastructure. Google Cloud's machine learning will allow our quantitative researchers to work on our high-value problems instead of problems with our data pipelines. MJTelco's Google Cloud Dataflow pipeline is now ready to start receiving data from the 50,000 installations. You want to allow Cloud Dataflow to scale its compute power up as required. Which Cloud Dataflow pipeline configuration setting should you update?

A) The zone

B) The number of workers

C) The disk size per worker

D) The maximum number of workers

A) The zone

B) The number of workers

C) The disk size per worker

D) The maximum number of workers

The zone

2

Your company is streaming real-time sensor data from their factory floor into Bigtable and they have noticed extremely poor performance. How should the row key be redesigned to improve Bigtable performance on queries that populate real-time dashboards?

A) Use a row key of the form. Use a row key of the form .

B) Use a row key of the form. .

C) Use a row key of the form#. #.

D) Use a row key of the form >##. >##.

A) Use a row key of the form

B) Use a row key of the form

C) Use a row key of the form

D) Use a row key of the form >#

Use a row key of the form . Use a row key of the form .

3

You are designing a basket abandonment system for an ecommerce company. The system will send a message to a user based on these rules: No interaction by the user on the site for 1 hour Has added more than $30 worth of products to the basket Has not completed a transaction You use Google Cloud Dataflow to process the data and decide if a message should be sent. How should you design the pipeline?

A) Use a fixed-time window with a duration of 60 minutes.

B) Use a sliding time window with a duration of 60 minutes.

C) Use a session window with a gap time duration of 60 minutes.

D) Use a global window with a time based trigger with a delay of 60 minutes.

A) Use a fixed-time window with a duration of 60 minutes.

B) Use a sliding time window with a duration of 60 minutes.

C) Use a session window with a gap time duration of 60 minutes.

D) Use a global window with a time based trigger with a delay of 60 minutes.

Use a global window with a time based trigger with a delay of 60 minutes.

4

Your company uses a proprietary system to send inventory data every 6 hours to a data ingestion service in the cloud. Transmitted data includes a payload of several fields and the timestamp of the transmission. If there are any concerns about a transmission, the system re-transmits the data. How should you deduplicate the data most efficiency?

A) Assign global unique identifiers (GUID) to each data entry.

B) Compute the hash value of each data entry, and compare it with all historical data.

C) Store each data entry as the primary key in a separate database and apply an index.

D) Maintain a database table to store the hash value and other metadata for each data entry.

A) Assign global unique identifiers (GUID) to each data entry.

B) Compute the hash value of each data entry, and compare it with all historical data.

C) Store each data entry as the primary key in a separate database and apply an index.

D) Maintain a database table to store the hash value and other metadata for each data entry.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

5

Your company's customer and order databases are often under heavy load. This makes performing analytics against them difficult without harming operations. The databases are in a MySQL cluster, with nightly backups taken using mysqldump. You want to perform analytics with minimal impact on operations. What should you do?

A) Add a node to the MySQL cluster and build an OLAP cube there.

B) Use an ETL tool to load the data from MySQL into Google BigQuery.

C) Connect an on-premises Apache Hadoop cluster to MySQL and perform ETL.

D) Mount the backups to Google Cloud SQL, and then process the data using Google Cloud Dataproc.

A) Add a node to the MySQL cluster and build an OLAP cube there.

B) Use an ETL tool to load the data from MySQL into Google BigQuery.

C) Connect an on-premises Apache Hadoop cluster to MySQL and perform ETL.

D) Mount the backups to Google Cloud SQL, and then process the data using Google Cloud Dataproc.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

6

Your company is in a highly regulated industry. One of your requirements is to ensure individual users have access only to the minimum amount of information required to do their jobs. You want to enforce this requirement with Google BigQuery. Which three approaches can you take? (Choose three.)

A) Disable writes to certain tables.

B) Restrict access to tables by role.

C) Ensure that the data is encrypted at all times.

D) Restrict BigQuery API access to approved users.

E) Segregate data across multiple tables or databases.

F) Use Google Stackdriver Audit Logging to determine policy violations.

A) Disable writes to certain tables.

B) Restrict access to tables by role.

C) Ensure that the data is encrypted at all times.

D) Restrict BigQuery API access to approved users.

E) Segregate data across multiple tables or databases.

F) Use Google Stackdriver Audit Logging to determine policy violations.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

7

You work for a car manufacturer and have set up a data pipeline using Google Cloud Pub/Sub to capture anomalous sensor events. You are using a push subscription in Cloud Pub/Sub that calls a custom HTTPS endpoint that you have created to take action of these anomalous events as they occur. Your custom HTTPS endpoint keeps getting an inordinate amount of duplicate messages. What is the most likely cause of these duplicate messages?

A) The message body for the sensor event is too large.

B) Your custom endpoint has an out-of-date SSL certificate.

C) The Cloud Pub/Sub topic has too many messages published to it.

D) Your custom endpoint is not acknowledging messages within the acknowledgement deadline.

A) The message body for the sensor event is too large.

B) Your custom endpoint has an out-of-date SSL certificate.

C) The Cloud Pub/Sub topic has too many messages published to it.

D) Your custom endpoint is not acknowledging messages within the acknowledgement deadline.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

8

Business owners at your company have given you a database of bank transactions. Each row contains the user ID, transaction type, transaction location, and transaction amount. They ask you to investigate what type of machine learning can be applied to the data. Which three machine learning applications can you use? (Choose three.)

A) Supervised learning to determine which transactions are most likely to be fraudulent.

B) Unsupervised learning to determine which transactions are most likely to be fraudulent.

C) Clustering to divide the transactions into N categories based on feature similarity.

D) Supervised learning to predict the location of a transaction.

E) Reinforcement learning to predict the location of a transaction.

F) Unsupervised learning to predict the location of a transaction.

A) Supervised learning to determine which transactions are most likely to be fraudulent.

B) Unsupervised learning to determine which transactions are most likely to be fraudulent.

C) Clustering to divide the transactions into N categories based on feature similarity.

D) Supervised learning to predict the location of a transaction.

E) Reinforcement learning to predict the location of a transaction.

F) Unsupervised learning to predict the location of a transaction.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

9

You are working on a sensitive project involving private user data. You have set up a project on Google Cloud Platform to house your work internally. An external consultant is going to assist with coding a complex transformation in a Google Cloud Dataflow pipeline for your project. How should you maintain users' privacy?

A) Grant the consultant the Viewer role on the project.

B) Grant the consultant the Cloud Dataflow Developer role on the project.

C) Create a service account and allow the consultant to log on with it.

D) Create an anonymized sample of the data for the consultant to work with in a different project.

A) Grant the consultant the Viewer role on the project.

B) Grant the consultant the Cloud Dataflow Developer role on the project.

C) Create a service account and allow the consultant to log on with it.

D) Create an anonymized sample of the data for the consultant to work with in a different project.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

10

Your company is migrating their 30-node Apache Hadoop cluster to the cloud. They want to re-use Hadoop jobs they have already created and minimize the management of the cluster as much as possible. They also want to be able to persist data beyond the life of the cluster. What should you do?

A) Create a Google Cloud Dataflow job to process the data.

B) Create a Google Cloud Dataproc cluster that uses persistent disks for HDFS.

C) Create a Hadoop cluster on Google Compute Engine that uses persistent disks.

D) Create a Cloud Dataproc cluster that uses the Google Cloud Storage connector.

E) Create a Hadoop cluster on Google Compute Engine that uses Local SSD disks.

A) Create a Google Cloud Dataflow job to process the data.

B) Create a Google Cloud Dataproc cluster that uses persistent disks for HDFS.

C) Create a Hadoop cluster on Google Compute Engine that uses persistent disks.

D) Create a Cloud Dataproc cluster that uses the Google Cloud Storage connector.

E) Create a Hadoop cluster on Google Compute Engine that uses Local SSD disks.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

11

You have spent a few days loading data from comma-separated values (CSV) files into the Google BigQuery table CLICK_STREAM . The column DT stores the epoch time of click events. For convenience, you chose a simple schema where every field is treated as the STRING type. Now, you want to compute web session durations of users who visit your site, and you want to change its data type to the TIMESTAMP . You want to minimize the migration effort without making future queries computationally expensive. What should you do?

A) Delete the table CLICK_STREAM , and then re-create it such that the column DT is of the TIMESTAMP type. Reload the data. Delete the table , and then re-create it such that the column is of the type. Reload the data.

B) Add a column TS of the TIMESTAMP type to the table CLICK_STREAM , and populate the numeric values from the column TS for each row. Reference the column TS instead of the column DT from now on. Add a column TS of the type to the table , and populate the numeric values from the column for each row. Reference the column instead of the column from now on.

C) Create a view CLICK _ STREAM _ V , where strings from the column DT are cast into TIMESTAMP values. Reference the view CLICK_STREAM_V instead of the table CLICK_STREAM from now on. Create a view CLICK _ STREAM V , where strings from the column are cast into values. Reference the view CLICK_STREAM_V instead of the table

D) Add two columns to the table CLICK STREAM: TS of the TIMESTAMP type and IS_NEW of the BOOLEAN type. Reload all data in append mode. For each appended row, set the value of IS_NEW to true. For future queries, reference the column TS instead of the column DT , with the WHERE clause ensuring that the value of IS_NEW must be true. Add two columns to the table CLICK STREAM: TS type and IS_NEW BOOLEAN type. Reload all data in append mode. For each appended row, set the value of to true. For future queries, reference the column , with the WHERE clause ensuring that the value of must be true.

E) Construct a query to return every row of the table CLICK_STREAM , while using the built-in function to cast strings from the column DT into TIMESTAMP values. Run the query into a destination table NEW_CLICK_STREAM , in which the column TS is the TIMESTAMP type. Reference the table NEW_CLICK_STREAM instead of the table CLICK_STREAM from now on. In the future, new data is loaded into the table NEW_CLICK_STREAM . Construct a query to return every row of the table , while using the built-in function to cast strings from the column into values. Run the query into a destination table NEW_CLICK_STREAM , in which the column is the type. Reference the table from now on. In the future, new data is loaded into the table .

A) Delete the table CLICK_STREAM , and then re-create it such that the column DT is of the TIMESTAMP type. Reload the data. Delete the table , and then re-create it such that the column is of the type. Reload the data.

B) Add a column TS of the TIMESTAMP type to the table CLICK_STREAM , and populate the numeric values from the column TS for each row. Reference the column TS instead of the column DT from now on. Add a column TS of the type to the table , and populate the numeric values from the column for each row. Reference the column instead of the column from now on.

C) Create a view CLICK _ STREAM _ V , where strings from the column DT are cast into TIMESTAMP values. Reference the view CLICK_STREAM_V instead of the table CLICK_STREAM from now on. Create a view CLICK _ STREAM V , where strings from the column are cast into values. Reference the view CLICK_STREAM_V instead of the table

D) Add two columns to the table CLICK STREAM: TS of the TIMESTAMP type and IS_NEW of the BOOLEAN type. Reload all data in append mode. For each appended row, set the value of IS_NEW to true. For future queries, reference the column TS instead of the column DT , with the WHERE clause ensuring that the value of IS_NEW must be true. Add two columns to the table CLICK STREAM: TS type and IS_NEW BOOLEAN type. Reload all data in append mode. For each appended row, set the value of to true. For future queries, reference the column , with the WHERE clause ensuring that the value of must be true.

E) Construct a query to return every row of the table CLICK_STREAM , while using the built-in function to cast strings from the column DT into TIMESTAMP values. Run the query into a destination table NEW_CLICK_STREAM , in which the column TS is the TIMESTAMP type. Reference the table NEW_CLICK_STREAM instead of the table CLICK_STREAM from now on. In the future, new data is loaded into the table NEW_CLICK_STREAM . Construct a query to return every row of the table , while using the built-in function to cast strings from the column into values. Run the query into a destination table NEW_CLICK_STREAM , in which the column is the type. Reference the table from now on. In the future, new data is loaded into the table .

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

12

Flowlogistic Case Study Company Overview Flowlogistic is a leading logistics and supply chain provider. They help businesses throughout the world manage their resources and transport them to their final destination. The company has grown rapidly, expanding their offerings to include rail, truck, aircraft, and oceanic shipping. Company Background The company started as a regional trucking company, and then expanded into other logistics market. Because they have not updated their infrastructure, managing and tracking orders and shipments has become a bottleneck. To improve operations, Flowlogistic developed proprietary technology for tracking shipments in real time at the parcel level. However, they are unable to deploy it because their technology stack, based on Apache Kafka, cannot support the processing volume. In addition, Flowlogistic wants to further analyze their orders and shipments to determine how best to deploy their resources. Solution Concept Flowlogistic wants to implement two concepts using the cloud: Use their proprietary technology in a real-time inventory-tracking system that indicates the location of their loads Perform analytics on all their orders and shipment logs, which contain both structured and unstructured data, to determine how best to deploy resources, which markets to expand info. They also want to use predictive analytics to learn earlier when a shipment will be delayed. Existing Technical Environment Flowlogistic architecture resides in a single data center: Databases 8 physical servers in 2 clusters - SQL Server - user data, inventory, static data 3 physical servers - Cassandra - metadata, tracking messages 10 Kafka servers - tracking message aggregation and batch insert Application servers - customer front end, middleware for order/customs 60 virtual machines across 20 physical servers - Tomcat - Java services - Nginx - static content - Batch servers Storage appliances - iSCSI for virtual machine (VM) hosts - Fibre Channel storage area network (FC SAN) - SQL server storage - Network-attached storage (NAS) image storage, logs, backups 10 Apache Hadoop /Spark servers - Core Data Lake - Data analysis workloads 20 miscellaneous servers - Jenkins, monitoring, bastion hosts, Business Requirements Build a reliable and reproducible environment with scaled panty of production. Aggregate data in a centralized Data Lake for analysis Use historical data to perform predictive analytics on future shipments Accurately track every shipment worldwide using proprietary technology Improve business agility and speed of innovation through rapid provisioning of new resources Analyze and optimize architecture for performance in the cloud Migrate fully to the cloud if all other requirements are met Technical Requirements Handle both streaming and batch data Migrate existing Hadoop workloads Ensure architecture is scalable and elastic to meet the changing demands of the company. Use managed services whenever possible Encrypt data flight and at rest Connect a VPN between the production data center and cloud environment SEO Statement We have grown so quickly that our inability to upgrade our infrastructure is really hampering further growth and efficiency. We are efficient at moving shipments around the world, but we are inefficient at moving data around. We need to organize our information so we can more easily understand where our customers are and what they are shipping. CTO Statement IT has never been a priority for us, so as our data has grown, we have not invested enough in our technology. I have a good staff to manage IT, but they are so busy managing our infrastructure that I cannot get them to do the things that really matter, such as organizing our data, building the analytics, and figuring out how to implement the CFO' s tracking technology. CFO Statement Part of our competitive advantage is that we penalize ourselves for late shipments and deliveries. Knowing where out shipments are at all times has a direct correlation to our bottom line and profitability. Additionally, I don't want to commit capital to building out a server environment. Flowlogistic's CEO wants to gain rapid insight into their customer base so his sales team can be better informed in the field. This team is not very technical, so they've purchased a visualization tool to simplify the creation of BigQuery reports. However, they've been overwhelmed by all the data in the table, and are spending a lot of money on queries trying to find the data they need. You want to solve their problem in the most cost-effective way. What should you do?

A) Export the data into a Google Sheet for virtualization.

B) Create an additional table with only the necessary columns.

C) Create a view on the table to present to the virtualization tool.

D) Create identity and access management (IAM) roles on the appropriate columns, so only they appear in a query.

A) Export the data into a Google Sheet for virtualization.

B) Create an additional table with only the necessary columns.

C) Create a view on the table to present to the virtualization tool.

D) Create identity and access management (IAM) roles on the appropriate columns, so only they appear in a query.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

13

Your company is performing data preprocessing for a learning algorithm in Google Cloud Dataflow. Numerous data logs are being are being generated during this step, and the team wants to analyze them. Due to the dynamic nature of the campaign, the data is growing exponentially every hour. The data scientists have written the following code to read the data for a new key features in the logs. BigQueryIO.Read .named("ReadLogData") .from("clouddataflow-readonly:samples.log_data") You want to improve the performance of this data read. What should you do?

A) Specify the TableReference object in the code. Specify the TableReference object in the code.

B) Use .fromQuery operation to read specific fields from the table. Use .fromQuery operation to read specific fields from the table.

C) Use of both the Google BigQuery TableSchema and TableFieldSchema classes. Use of both the Google BigQuery TableSchema and TableFieldSchema classes.

D) Call a transform that returns TableRow objects, where each element in the PCollection represents a single row in the table. Call a transform that returns TableRow objects, where each element in the PCollection represents a single row in the table.

A) Specify the TableReference object in the code. Specify the TableReference object in the code.

B) Use .fromQuery operation to read specific fields from the table. Use .fromQuery operation to read specific fields from the table.

C) Use of both the Google BigQuery TableSchema and TableFieldSchema classes. Use of both the Google BigQuery TableSchema and TableFieldSchema classes.

D) Call a transform that returns TableRow objects, where each element in the PCollection represents a single row in the table. Call a transform that returns TableRow objects, where each element in the PCollection represents a single row in the table.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

14

You create an important report for your large team in Google Data Studio 360. The report uses Google BigQuery as its data source. You notice that visualizations are not showing data that is less than 1 hour old. What should you do?

A) Disable caching by editing the report settings.

B) Disable caching in BigQuery by editing table details.

C) Refresh your browser tab showing the visualizations.

D) Clear your browser history for the past hour then reload the tab showing the virtualizations.

A) Disable caching by editing the report settings.

B) Disable caching in BigQuery by editing table details.

C) Refresh your browser tab showing the visualizations.

D) Clear your browser history for the past hour then reload the tab showing the virtualizations.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

15

Your company is running their first dynamic campaign, serving different offers by analyzing real-time data during the holiday season. The data scientists are collecting terabytes of data that rapidly grows every hour during their 30-day campaign. They are using Google Cloud Dataflow to preprocess the data and collect the feature (signals) data that is needed for the machine learning model in Google Cloud Bigtable. The team is observing suboptimal performance with reads and writes of their initial load of 10 TB of data. They want to improve this performance while minimizing cost. What should they do?

A) Redefine the schema by evenly distributing reads and writes across the row space of the table.

B) The performance issue should be resolved over time as the site of the BigDate cluster is increased.

C) Redesign the schema to use a single row key to identify values that need to be updated frequently in the cluster.

D) Redesign the schema to use row keys based on numeric IDs that increase sequentially per user viewing the offers.

A) Redefine the schema by evenly distributing reads and writes across the row space of the table.

B) The performance issue should be resolved over time as the site of the BigDate cluster is increased.

C) Redesign the schema to use a single row key to identify values that need to be updated frequently in the cluster.

D) Redesign the schema to use row keys based on numeric IDs that increase sequentially per user viewing the offers.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

16

Your weather app queries a database every 15 minutes to get the current temperature. The frontend is powered by Google App Engine and server millions of users. How should you design the frontend to respond to a database failure?

A) Issue a command to restart the database servers.

B) Retry the query with exponential backoff, up to a cap of 15 minutes.

C) Retry the query every second until it comes back online to minimize staleness of data.

D) Reduce the query frequency to once every hour until the database comes back online.

A) Issue a command to restart the database servers.

B) Retry the query with exponential backoff, up to a cap of 15 minutes.

C) Retry the query every second until it comes back online to minimize staleness of data.

D) Reduce the query frequency to once every hour until the database comes back online.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

17

MJTelco Case Study Company Overview MJTelco is a startup that plans to build networks in rapidly growing, underserved markets around the world. The company has patents for innovative optical communications hardware. Based on these patents, they can create many reliable, high-speed backbone links with inexpensive hardware. Company Background Founded by experienced telecom executives, MJTelco uses technologies originally developed to overcome communications challenges in space. Fundamental to their operation, they need to create a distributed data infrastructure that drives real-time analysis and incorporates machine learning to continuously optimize their topologies. Because their hardware is inexpensive, they plan to overdeploy the network allowing them to account for the impact of dynamic regional politics on location availability and cost. Their management and operations teams are situated all around the globe creating many-to-many relationship between data consumers and provides in their system. After careful consideration, they decided public cloud is the perfect environment to support their needs. Solution Concept MJTelco is running a successful proof-of-concept (PoC) project in its labs. They have two primary needs: Scale and harden their PoC to support significantly more data flows generated when they ramp to more than 50,000 installations. Refine their machine-learning cycles to verify and improve the dynamic models they use to control topology definition. MJTelco will also use three separate operating environments - development/test, staging, and production - to meet the needs of running experiments, deploying new features, and serving production customers. Business Requirements Scale up their production environment with minimal cost, instantiating resources when and where needed in an unpredictable, distributed telecom user community. Ensure security of their proprietary data to protect their leading-edge machine learning and analysis. Provide reliable and timely access to data for analysis from distributed research workers Maintain isolated environments that support rapid iteration of their machine-learning models without affecting their customers. Technical Requirements Ensure secure and efficient transport and storage of telemetry data Rapidly scale instances to support between 10,000 and 100,000 data providers with multiple flows each. Allow analysis and presentation against data tables tracking up to 2 years of data storing approximately 100m records/day Support rapid iteration of monitoring infrastructure focused on awareness of data pipeline problems both in telemetry flows and in production learning cycles. CEO Statement Our business model relies on our patents, analytics and dynamic machine learning. Our inexpensive hardware is organized to be highly reliable, which gives us cost advantages. We need to quickly stabilize our large distributed data pipelines to meet our reliability and capacity commitments. CTO Statement Our public cloud services must operate as advertised. We need resources that scale and keep our data secure. We also need environments in which our data scientists can carefully study and quickly adapt our models. Because we rely on automation to process our data, we also need our development and test environments to work as we iterate. CFO Statement The project is too large for us to maintain the hardware and software required for the data and analysis. Also, we cannot afford to staff an operations team to monitor so many data feeds, so we will rely on automation and infrastructure. Google Cloud's machine learning will allow our quantitative researchers to work on our high-value problems instead of problems with our data pipelines. You need to compose visualizations for operations teams with the following requirements: The report must include telemetry data from all 50,000 installations for the most resent 6 weeks (sampling once every minute). The report must not be more than 3 hours delayed from live data. The actionable report should only show suboptimal links. Most suboptimal links should be sorted to the top. Suboptimal links can be grouped and filtered by regional geography. User response time to load the report must be <5 seconds. Which approach meets the requirements?

A) Load the data into Google Sheets, use formulas to calculate a metric, and use filters/sorting to show only suboptimal links in a table.

B) Load the data into Google BigQuery tables, write Google Apps Script that queries the data, calculates the metric, and shows only suboptimal rows in a table in Google Sheets.

C) Load the data into Google Cloud Datastore tables, write a Google App Engine Application that queries all rows, applies a function to derive the metric, and then renders results in a table using the Google charts and visualization API.

D) Load the data into Google BigQuery tables, write a Google Data Studio 360 report that connects to your data, calculates a metric, and then uses a filter expression to show only suboptimal rows in a table.

A) Load the data into Google Sheets, use formulas to calculate a metric, and use filters/sorting to show only suboptimal links in a table.

B) Load the data into Google BigQuery tables, write Google Apps Script that queries the data, calculates the metric, and shows only suboptimal rows in a table in Google Sheets.

C) Load the data into Google Cloud Datastore tables, write a Google App Engine Application that queries all rows, applies a function to derive the metric, and then renders results in a table using the Google charts and visualization API.

D) Load the data into Google BigQuery tables, write a Google Data Studio 360 report that connects to your data, calculates a metric, and then uses a filter expression to show only suboptimal rows in a table.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

18

Your company built a TensorFlow neutral-network model with a large number of neurons and layers. The model fits well for the training data. However, when tested against new data, it performs poorly. What method can you employ to address this?

A) Threading

B) Serialization

C) Dropout Methods

D) Dimensionality Reduction

A) Threading

B) Serialization

C) Dropout Methods

D) Dimensionality Reduction

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

19

You are building a model to make clothing recommendations. You know a user's fashion preference is likely to change over time, so you build a data pipeline to stream new data back to the model as it becomes available. How should you use this data to train the model?

A) Continuously retrain the model on just the new data.

B) Continuously retrain the model on a combination of existing data and the new data.

C) Train on the existing data while using the new data as your test set.

D) Train on the new data while using the existing data as your test set.

A) Continuously retrain the model on just the new data.

B) Continuously retrain the model on a combination of existing data and the new data.

C) Train on the existing data while using the new data as your test set.

D) Train on the new data while using the existing data as your test set.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

20

You are creating a model to predict housing prices. Due to budget constraints, you must run it on a single resource-constrained virtual machine. Which learning algorithm should you use?

A) Linear regression

B) Logistic classification

C) Recurrent neural network

D) Feedforward neural network

A) Linear regression

B) Logistic classification

C) Recurrent neural network

D) Feedforward neural network

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

21

Your company is loading comma-separated values (CSV) files into Google BigQuery. The data is fully imported successfully; however, the imported data is not matching byte-to-byte to the source file. What is the most likely cause of this problem?

A) The CSV data loaded in BigQuery is not flagged as CSV.

B) The CSV data has invalid rows that were skipped on import.

C) The CSV data loaded in BigQuery is not using BigQuery's default encoding.

D) The CSV data has not gone through an ETL phase before loading into BigQuery.

A) The CSV data loaded in BigQuery is not flagged as CSV.

B) The CSV data has invalid rows that were skipped on import.

C) The CSV data loaded in BigQuery is not using BigQuery's default encoding.

D) The CSV data has not gone through an ETL phase before loading into BigQuery.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

22

You are designing the database schema for a machine learning-based food ordering service that will predict what users want to eat. Here is some of the information you need to store: The user profile: What the user likes and doesn't like to eat The user account information: Name, address, preferred meal times The order information: When orders are made, from where, to whom The database will be used to store all the transactional data of the product. You want to optimize the data schema. Which Google Cloud Platform product should you use?

A) BigQuery

B) Cloud SQL

C) Cloud Bigtable

D) Cloud Datastore

A) BigQuery

B) Cloud SQL

C) Cloud Bigtable

D) Cloud Datastore

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

23

An organization maintains a Google BigQuery dataset that contains tables with user-level data. They want to expose aggregates of this data to other Google Cloud projects, while still controlling access to the user-level data. Additionally, they need to minimize their overall storage cost and ensure the analysis cost for other projects is assigned to those projects. What should they do?

A) Create and share an authorized view that provides the aggregate results.

B) Create and share a new dataset and view that provides the aggregate results.

C) Create and share a new dataset and table that contains the aggregate results.

D) Create dataViewer Identity and Access Management (IAM) roles on the dataset to enable sharing.

A) Create and share an authorized view that provides the aggregate results.

B) Create and share a new dataset and view that provides the aggregate results.

C) Create and share a new dataset and table that contains the aggregate results.

D) Create dataViewer Identity and Access Management (IAM) roles on the dataset to enable sharing.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

24

Your company receives both batch- and stream-based event data. You want to process the data using Google Cloud Dataflow over a predictable time period. However, you realize that in some instances data can arrive late or out of order. How should you design your Cloud Dataflow pipeline to handle data that is late or out of order?

A) Set a single global window to capture all the data.

B) Set sliding windows to capture all the lagged data.

C) Use watermarks and timestamps to capture the lagged data.

D) Ensure every datasource type (stream or batch) has a timestamp, and use the timestamps to define the logic for lagged data.

A) Set a single global window to capture all the data.

B) Set sliding windows to capture all the lagged data.

C) Use watermarks and timestamps to capture the lagged data.

D) Ensure every datasource type (stream or batch) has a timestamp, and use the timestamps to define the logic for lagged data.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

25

You are deploying a new storage system for your mobile application, which is a media streaming service. You decide the best fit is Google Cloud Datastore. You have entities with multiple properties, some of which can take on multiple values. For example, in the entity 'Movie' the property 'actors' and the property 'tags' have multiple values but the property 'date released' does not. A typical query would ask for all movies with actor= ordered by date _ released or all movies with tag=Comedy date_released. How should you avoid a combinatorial explosion in the number of indexes?

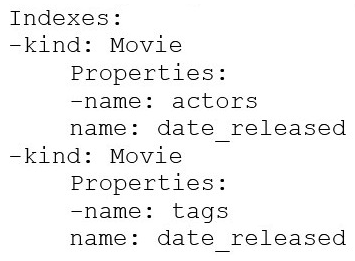

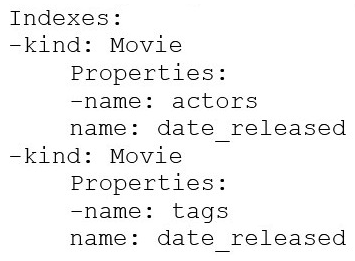

A) Manually configure the index in your index config as follows:

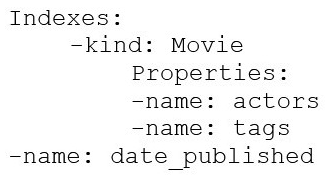

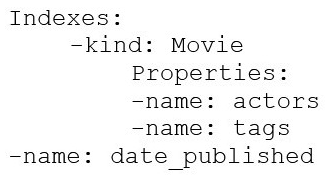

B)

C) Set the following in your entity options: exclude_from_indexes = 'actors, tags' Set the following in your entity options: exclude_from_indexes = 'actors, tags'

D) Set the following in your entity options: exclude_from_indexes = 'date_published' exclude_from_indexes = 'date_published'

A) Manually configure the index in your index config as follows:

B)

C) Set the following in your entity options: exclude_from_indexes = 'actors, tags' Set the following in your entity options: exclude_from_indexes = 'actors, tags'

D) Set the following in your entity options: exclude_from_indexes = 'date_published' exclude_from_indexes = 'date_published'

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

26

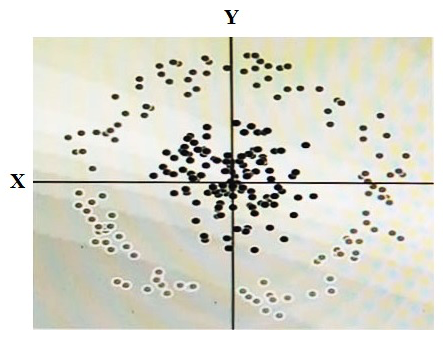

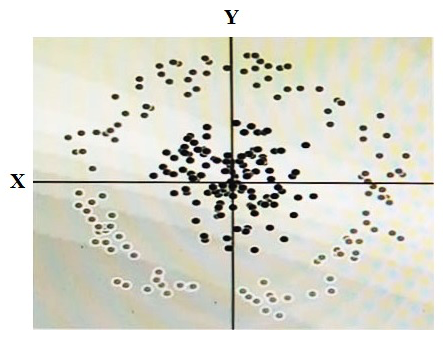

You have some data, which is shown in the graphic below. The two dimensions are X and Y, and the shade of each dot represents what class it is. You want to classify this data accurately using a linear algorithm. To do this you need to add a synthetic feature. What should the value of that feature be?

A) X^2+Y^2

B) X^2

C) Y^2

D) cos(X)

A) X^2+Y^2

B) X^2

C) Y^2

D) cos(X)

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

27

An online retailer has built their current application on Google App Engine. A new initiative at the company mandates that they extend their application to allow their customers to transact directly via the application. They need to manage their shopping transactions and analyze combined data from multiple datasets using a business intelligence (BI) tool. They want to use only a single database for this purpose. Which Google Cloud database should they choose?

A) BigQuery

B) Cloud SQL

C) Cloud BigTable

D) Cloud Datastore

A) BigQuery

B) Cloud SQL

C) Cloud BigTable

D) Cloud Datastore

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

28

Your company produces 20,000 files every hour. Each data file is formatted as a comma separated values (CSV) file that is less than 4 KB. All files must be ingested on Google Cloud Platform before they can be processed. Your company site has a 200 ms latency to Google Cloud, and your Internet connection bandwidth is limited as 50 Mbps. You currently deploy a secure FTP (SFTP) server on a virtual machine in Google Compute Engine as the data ingestion point. A local SFTP client runs on a dedicated machine to transmit the CSV files as is. The goal is to make reports with data from the previous day available to the executives by 10:00 a.m. each day. This design is barely able to keep up with the current volume, even though the bandwidth utilization is rather low. You are told that due to seasonality, your company expects the number of files to double for the next three months. Which two actions should you take? (Choose two.)

A) Introduce data compression for each file to increase the rate file of file transfer.

B) Contact your internet service provider (ISP) to increase your maximum bandwidth to at least 100 Mbps.

C) Redesign the data ingestion process to use gsutil tool to send the CSV files to a storage bucket in parallel.

D) Assemble 1,000 files into a tape archive (TAR) file. Transmit the TAR files instead, and disassemble the CSV files in the cloud upon receiving them.

E) Create an S3-compatible storage endpoint in your network, and use Google Cloud Storage Transfer Service to transfer on-premices data to the designated storage bucket.

A) Introduce data compression for each file to increase the rate file of file transfer.

B) Contact your internet service provider (ISP) to increase your maximum bandwidth to at least 100 Mbps.

C) Redesign the data ingestion process to use gsutil tool to send the CSV files to a storage bucket in parallel.

D) Assemble 1,000 files into a tape archive (TAR) file. Transmit the TAR files instead, and disassemble the CSV files in the cloud upon receiving them.

E) Create an S3-compatible storage endpoint in your network, and use Google Cloud Storage Transfer Service to transfer on-premices data to the designated storage bucket.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

29

Your analytics team wants to build a simple statistical model to determine which customers are most likely to work with your company again, based on a few different metrics. They want to run the model on Apache Spark, using data housed in Google Cloud Storage, and you have recommended using Google Cloud Dataproc to execute this job. Testing has shown that this workload can run in approximately 30 minutes on a 15-node cluster, outputting the results into Google BigQuery. The plan is to run this workload weekly. How should you optimize the cluster for cost?

A) Migrate the workload to Google Cloud Dataflow

B) Use pre-emptible virtual machines (VMs) for the cluster

C) Use a higher-memory node so that the job runs faster

D) Use SSDs on the worker nodes so that the job can run faster

A) Migrate the workload to Google Cloud Dataflow

B) Use pre-emptible virtual machines (VMs) for the cluster

C) Use a higher-memory node so that the job runs faster

D) Use SSDs on the worker nodes so that the job can run faster

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

30

You have enabled the free integration between Firebase Analytics and Google BigQuery. Firebase now automatically creates a new table daily in BigQuery in the format app_events_YYYYMMDD. You want to query all of the tables for the past 30 days in legacy SQL. What should you do?

A) Use the TABLE_DATE_RANGE function Use the TABLE_DATE_RANGE function

B) Use the WHERE_PARTITIONTIME pseudo column WHERE_PARTITIONTIME pseudo column

C) Use WHERE date BETWEEN YYYY-MM-DD AND YYYY-MM-DD

D) Use SELECT IF.(date >= YYYY-MM-DD AND date <= YYYY-MM-DD

A) Use the TABLE_DATE_RANGE function Use the TABLE_DATE_RANGE function

B) Use the WHERE_PARTITIONTIME pseudo column WHERE_PARTITIONTIME pseudo column

C) Use WHERE date BETWEEN YYYY-MM-DD AND YYYY-MM-DD

D) Use SELECT IF.(date >= YYYY-MM-DD AND date <= YYYY-MM-DD

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

31

You are developing an application that uses a recommendation engine on Google Cloud. Your solution should display new videos to customers based on past views. Your solution needs to generate labels for the entities in videos that the customer has viewed. Your design must be able to provide very fast filtering suggestions based on data from other customer preferences on several TB of data. What should you do?

A) Build and train a complex classification model with Spark MLlib to generate labels and filter the results. Deploy the models using Cloud Dataproc. Call the model from your application.

B) Build and train a classification model with Spark MLlib to generate labels. Build and train a second classification model with Spark MLlib to filter results to match customer preferences. Deploy the models using Cloud Dataproc. Call the models from your application.

C) Build an application that calls the Cloud Video Intelligence API to generate labels. Store data in Cloud Bigtable, and filter the predicted labels to match the user's viewing history to generate preferences.

D) Build an application that calls the Cloud Video Intelligence API to generate labels. Store data in Cloud SQL, and join and filter the predicted labels to match the user's viewing history to generate preferences.

A) Build and train a complex classification model with Spark MLlib to generate labels and filter the results. Deploy the models using Cloud Dataproc. Call the model from your application.

B) Build and train a classification model with Spark MLlib to generate labels. Build and train a second classification model with Spark MLlib to filter results to match customer preferences. Deploy the models using Cloud Dataproc. Call the models from your application.

C) Build an application that calls the Cloud Video Intelligence API to generate labels. Store data in Cloud Bigtable, and filter the predicted labels to match the user's viewing history to generate preferences.

D) Build an application that calls the Cloud Video Intelligence API to generate labels. Store data in Cloud SQL, and join and filter the predicted labels to match the user's viewing history to generate preferences.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

32

Your financial services company is moving to cloud technology and wants to store 50 TB of financial time-series data in the cloud. This data is updated frequently and new data will be streaming in all the time. Your company also wants to move their existing Apache Hadoop jobs to the cloud to get insights into this data. Which product should they use to store the data?

A) Cloud Bigtable

B) Google BigQuery

C) Google Cloud Storage

D) Google Cloud Datastore

A) Cloud Bigtable

B) Google BigQuery

C) Google Cloud Storage

D) Google Cloud Datastore

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

33

Your organization has been collecting and analyzing data in Google BigQuery for 6 months. The majority of the data analyzed is placed in a time-partitioned table named events_partitioned . To reduce the cost of queries, your organization created a view called events , which queries only the last 14 days of data. The view is described in legacy SQL. Next month, existing applications will be connecting to BigQuery to read the data via an ODBC connection. You need to ensure the applications can connect. Which two actions should you take? (Choose two.)

A) Create a new view over events using standard SQL

B) Create a new partitioned table using a standard SQL query

C) Create a new view over events_partitioned using standard SQL

D) Create a service account for the ODBC connection to use for authentication

E) Create a Google Cloud Identity and Access Management (Cloud IAM) role for the ODBC connection and shared "events"

A) Create a new view over events using standard SQL

B) Create a new partitioned table using a standard SQL query

C) Create a new view over events_partitioned using standard SQL

D) Create a service account for the ODBC connection to use for authentication

E) Create a Google Cloud Identity and Access Management (Cloud IAM) role for the ODBC connection and shared "events"

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

34

You are choosing a NoSQL database to handle telemetry data submitted from millions of Internet-of-Things (IoT) devices. The volume of data is growing at 100 TB per year, and each data entry has about 100 attributes. The data processing pipeline does not require atomicity, consistency, isolation, and durability (ACID). However, high availability and low latency are required. You need to analyze the data by querying against individual fields. Which three databases meet your requirements? (Choose three.)

A) Redis

B) HBase

C) MySQL

D) MongoDB

E) Cassandra

F) HDFS with Hive

A) Redis

B) HBase

C) MySQL

D) MongoDB

E) Cassandra

F) HDFS with Hive

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

35

Your company is currently setting up data pipelines for their campaign. For all the Google Cloud Pub/Sub streaming data, one of the important business requirements is to be able to periodically identify the inputs and their timings during their campaign. Engineers have decided to use windowing and transformation in Google Cloud Dataflow for this purpose. However, when testing this feature, they find that the Cloud Dataflow job fails for the all streaming insert. What is the most likely cause of this problem?

A) They have not assigned the timestamp, which causes the job to fail

B) They have not set the triggers to accommodate the data coming in late, which causes the job to fail

C) They have not applied a global windowing function, which causes the job to fail when the pipeline is created

D) They have not applied a non-global windowing function, which causes the job to fail when the pipeline is created

A) They have not assigned the timestamp, which causes the job to fail

B) They have not set the triggers to accommodate the data coming in late, which causes the job to fail

C) They have not applied a global windowing function, which causes the job to fail when the pipeline is created

D) They have not applied a non-global windowing function, which causes the job to fail when the pipeline is created

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

36

You work for a manufacturing plant that batches application log files together into a single log file once a day at 2:00 AM. You have written a Google Cloud Dataflow job to process that log file. You need to make sure the log file in processed once per day as inexpensively as possible. What should you do?

A) Change the processing job to use Google Cloud Dataproc instead.

B) Manually start the Cloud Dataflow job each morning when you get into the office.

C) Create a cron job with Google App Engine Cron Service to run the Cloud Dataflow job.

D) Configure the Cloud Dataflow job as a streaming job so that it processes the log data immediately.

A) Change the processing job to use Google Cloud Dataproc instead.

B) Manually start the Cloud Dataflow job each morning when you get into the office.

C) Create a cron job with Google App Engine Cron Service to run the Cloud Dataflow job.

D) Configure the Cloud Dataflow job as a streaming job so that it processes the log data immediately.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

37

Your infrastructure includes a set of YouTube channels. You have been tasked with creating a process for sending the YouTube channel data to Google Cloud for analysis. You want to design a solution that allows your world-wide marketing teams to perform ANSI SQL and other types of analysis on up-to-date YouTube channels log data. How should you set up the log data transfer into Google Cloud?

A) Use Storage Transfer Service to transfer the offsite backup files to a Cloud Storage Multi-Regional storage bucket as a final destination.

B) Use Storage Transfer Service to transfer the offsite backup files to a Cloud Storage Regional bucket as a final destination.

C) Use BigQuery Data Transfer Service to transfer the offsite backup files to a Cloud Storage Multi-Regional storage bucket as a final destination.

D) Use BigQuery Data Transfer Service to transfer the offsite backup files to a Cloud Storage Regional storage bucket as a final destination.

A) Use Storage Transfer Service to transfer the offsite backup files to a Cloud Storage Multi-Regional storage bucket as a final destination.

B) Use Storage Transfer Service to transfer the offsite backup files to a Cloud Storage Regional bucket as a final destination.

C) Use BigQuery Data Transfer Service to transfer the offsite backup files to a Cloud Storage Multi-Regional storage bucket as a final destination.

D) Use BigQuery Data Transfer Service to transfer the offsite backup files to a Cloud Storage Regional storage bucket as a final destination.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

38

You are implementing security best practices on your data pipeline. Currently, you are manually executing jobs as the Project Owner. You want to automate these jobs by taking nightly batch files containing non-public information from Google Cloud Storage, processing them with a Spark Scala job on a Google Cloud Dataproc cluster, and depositing the results into Google BigQuery. How should you securely run this workload?

A) Restrict the Google Cloud Storage bucket so only you can see the files

B) Grant the Project Owner role to a service account, and run the job with it

C) Use a service account with the ability to read the batch files and to write to BigQuery

D) Use a user account with the Project Viewer role on the Cloud Dataproc cluster to read the batch files and write to BigQuery

A) Restrict the Google Cloud Storage bucket so only you can see the files

B) Grant the Project Owner role to a service account, and run the job with it

C) Use a service account with the ability to read the batch files and to write to BigQuery

D) Use a user account with the Project Viewer role on the Cloud Dataproc cluster to read the batch files and write to BigQuery

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

39

Your company has recently grown rapidly and now ingesting data at a significantly higher rate than it was previously. You manage the daily batch MapReduce analytics jobs in Apache Hadoop. However, the recent increase in data has meant the batch jobs are falling behind. You were asked to recommend ways the development team could increase the responsiveness of the analytics without increasing costs. What should you recommend they do?

A) Rewrite the job in Pig.

B) Rewrite the job in Apache Spark.

C) Increase the size of the Hadoop cluster.

D) Decrease the size of the Hadoop cluster but also rewrite the job in Hive.

A) Rewrite the job in Pig.

B) Rewrite the job in Apache Spark.

C) Increase the size of the Hadoop cluster.

D) Decrease the size of the Hadoop cluster but also rewrite the job in Hive.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

40

You are selecting services to write and transform JSON messages from Cloud Pub/Sub to BigQuery for a data pipeline on Google Cloud. You want to minimize service costs. You also want to monitor and accommodate input data volume that will vary in size with minimal manual intervention. What should you do?

A) Use Cloud Dataproc to run your transformations. Monitor CPU utilization for the cluster. Resize the number of worker nodes in your cluster via the command line.

B) Use Cloud Dataproc to run your transformations. Use the diagnose command to generate an operational output archive. Locate the bottleneck and adjust cluster resources. Use Cloud Dataproc to run your transformations. Use the diagnose command to generate an operational output archive. Locate the bottleneck and adjust cluster resources.

C) Use Cloud Dataflow to run your transformations. Monitor the job system lag with Stackdriver. Use the default autoscaling setting for worker instances.

D) Use Cloud Dataflow to run your transformations. Monitor the total execution time for a sampling of jobs. Configure the job to use non-default Compute Engine machine types when needed.

A) Use Cloud Dataproc to run your transformations. Monitor CPU utilization for the cluster. Resize the number of worker nodes in your cluster via the command line.

B) Use Cloud Dataproc to run your transformations. Use the diagnose command to generate an operational output archive. Locate the bottleneck and adjust cluster resources. Use Cloud Dataproc to run your transformations. Use the diagnose command to generate an operational output archive. Locate the bottleneck and adjust cluster resources.

C) Use Cloud Dataflow to run your transformations. Monitor the job system lag with Stackdriver. Use the default autoscaling setting for worker instances.

D) Use Cloud Dataflow to run your transformations. Monitor the total execution time for a sampling of jobs. Configure the job to use non-default Compute Engine machine types when needed.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

41

MJTelco Case Study Company Overview MJTelco is a startup that plans to build networks in rapidly growing, underserved markets around the world. The company has patents for innovative optical communications hardware. Based on these patents, they can create many reliable, high-speed backbone links with inexpensive hardware. Company Background Founded by experienced telecom executives, MJTelco uses technologies originally developed to overcome communications challenges in space. Fundamental to their operation, they need to create a distributed data infrastructure that drives real-time analysis and incorporates machine learning to continuously optimize their topologies. Because their hardware is inexpensive, they plan to overdeploy the network allowing them to account for the impact of dynamic regional politics on location availability and cost. Their management and operations teams are situated all around the globe creating many-to-many relationship between data consumers and provides in their system. After careful consideration, they decided public cloud is the perfect environment to support their needs. Solution Concept MJTelco is running a successful proof-of-concept (PoC) project in its labs. They have two primary needs: Scale and harden their PoC to support significantly more data flows generated when they ramp to more than 50,000 installations. Refine their machine-learning cycles to verify and improve the dynamic models they use to control topology definition. MJTelco will also use three separate operating environments - development/test, staging, and production - to meet the needs of running experiments, deploying new features, and serving production customers. Business Requirements Scale up their production environment with minimal cost, instantiating resources when and where needed in an unpredictable, distributed telecom user community. Ensure security of their proprietary data to protect their leading-edge machine learning and analysis. Provide reliable and timely access to data for analysis from distributed research workers Maintain isolated environments that support rapid iteration of their machine-learning models without affecting their customers. Technical Requirements Ensure secure and efficient transport and storage of telemetry data Rapidly scale instances to support between 10,000 and 100,000 data providers with multiple flows each. Allow analysis and presentation against data tables tracking up to 2 years of data storing approximately 100m records/day Support rapid iteration of monitoring infrastructure focused on awareness of data pipeline problems both in telemetry flows and in production learning cycles. CEO Statement Our business model relies on our patents, analytics and dynamic machine learning. Our inexpensive hardware is organized to be highly reliable, which gives us cost advantages. We need to quickly stabilize our large distributed data pipelines to meet our reliability and capacity commitments. CTO Statement Our public cloud services must operate as advertised. We need resources that scale and keep our data secure. We also need environments in which our data scientists can carefully study and quickly adapt our models. Because we rely on automation to process our data, we also need our development and test environments to work as we iterate. CFO Statement The project is too large for us to maintain the hardware and software required for the data and analysis. Also, we cannot afford to staff an operations team to monitor so many data feeds, so we will rely on automation and infrastructure. Google Cloud's machine learning will allow our quantitative researchers to work on our high-value problems instead of problems with our data pipelines. You create a new report for your large team in Google Data Studio 360. The report uses Google BigQuery as its data source. It is company policy to ensure employees can view only the data associated with their region, so you create and populate a table for each region. You need to enforce the regional access policy to the data. Which two actions should you take? (Choose two.)

A) Ensure all the tables are included in global dataset.

B) Ensure each table is included in a dataset for a region.

C) Adjust the settings for each table to allow a related region-based security group view access.

D) Adjust the settings for each view to allow a related region-based security group view access.

E) Adjust the settings for each dataset to allow a related region-based security group view access.

A) Ensure all the tables are included in global dataset.

B) Ensure each table is included in a dataset for a region.

C) Adjust the settings for each table to allow a related region-based security group view access.

D) Adjust the settings for each view to allow a related region-based security group view access.

E) Adjust the settings for each dataset to allow a related region-based security group view access.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

42

Your company has hired a new data scientist who wants to perform complicated analyses across very large datasets stored in Google Cloud Storage and in a Cassandra cluster on Google Compute Engine. The scientist primarily wants to create labelled data sets for machine learning projects, along with some visualization tasks. She reports that her laptop is not powerful enough to perform her tasks and it is slowing her down. You want to help her perform her tasks. What should you do?

A) Run a local version of Jupiter on the laptop.

B) Grant the user access to Google Cloud Shell.

C) Host a visualization tool on a VM on Google Compute Engine.

D) Deploy Google Cloud Datalab to a virtual machine (VM) on Google Compute Engine.

A) Run a local version of Jupiter on the laptop.

B) Grant the user access to Google Cloud Shell.

C) Host a visualization tool on a VM on Google Compute Engine.

D) Deploy Google Cloud Datalab to a virtual machine (VM) on Google Compute Engine.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

43

Your company's on-premises Apache Hadoop servers are approaching end-of-life, and IT has decided to migrate the cluster to Google Cloud Dataproc. A like-for-like migration of the cluster would require 50 TB of Google Persistent Disk per node. The CIO is concerned about the cost of using that much block storage. You want to minimize the storage cost of the migration. What should you do?

A) Put the data into Google Cloud Storage.

B) Use preemptible virtual machines (VMs) for the Cloud Dataproc cluster.

C) Tune the Cloud Dataproc cluster so that there is just enough disk for all data.

D) Migrate some of the cold data into Google Cloud Storage, and keep only the hot data in Persistent Disk.

A) Put the data into Google Cloud Storage.

B) Use preemptible virtual machines (VMs) for the Cloud Dataproc cluster.

C) Tune the Cloud Dataproc cluster so that there is just enough disk for all data.

D) Migrate some of the cold data into Google Cloud Storage, and keep only the hot data in Persistent Disk.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

44

Government regulations in your industry mandate that you have to maintain an auditable record of access to certain types of data. Assuming that all expiring logs will be archived correctly, where should you store data that is subject to that mandate?

A) Encrypted on Cloud Storage with user-supplied encryption keys. A separate decryption key will be given to each authorized user.

B) In a BigQuery dataset that is viewable only by authorized personnel, with the Data Access log used to provide the auditability.

C) In Cloud SQL, with separate database user names to each user. The Cloud SQL Admin activity logs will be used to provide the auditability.

D) In a bucket on Cloud Storage that is accessible only by an AppEngine service that collects user information and logs the access before providing a link to the bucket.

A) Encrypted on Cloud Storage with user-supplied encryption keys. A separate decryption key will be given to each authorized user.

B) In a BigQuery dataset that is viewable only by authorized personnel, with the Data Access log used to provide the auditability.

C) In Cloud SQL, with separate database user names to each user. The Cloud SQL Admin activity logs will be used to provide the auditability.

D) In a bucket on Cloud Storage that is accessible only by an AppEngine service that collects user information and logs the access before providing a link to the bucket.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

45

Your neural network model is taking days to train. You want to increase the training speed. What can you do?

A) Subsample your test dataset.

B) Subsample your training dataset.

C) Increase the number of input features to your model.

D) Increase the number of layers in your neural network.

A) Subsample your test dataset.

B) Subsample your training dataset.

C) Increase the number of input features to your model.

D) Increase the number of layers in your neural network.

Unlock Deck

Unlock for access to all 256 flashcards in this deck.

Unlock Deck

k this deck

46