Deck 19: Professional Machine Learning Engineer

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/35

Play

Full screen (f)

Deck 19: Professional Machine Learning Engineer

1

You have trained a model on a dataset that required computationally expensive preprocessing operations. You need to execute the same preprocessing at prediction time. You deployed the model on AI Platform for high-throughput online prediction. Which architecture should you use?

A) Validate the accuracy of the model that you trained on preprocessed data. Create a new model that uses the raw data and is available in real time. Deploy the new model onto AI Platform for online prediction.

B) Send incoming prediction requests to a Pub/Sub topic. Transform the incoming data using a Dataflow job. Submit a prediction request to AI Platform using the transformed data. Write the predictions to an outbound Pub/Sub queue.

C) Stream incoming prediction request data into Cloud Spanner. Create a view to abstract your preprocessing logic. Query the view every second for new records.

D) Set up a Cloud Function that is triggered when messages are published to the Pub/Sub topic. Implement your preprocessing logic in the Cloud Function.

A) Validate the accuracy of the model that you trained on preprocessed data. Create a new model that uses the raw data and is available in real time. Deploy the new model onto AI Platform for online prediction.

B) Send incoming prediction requests to a Pub/Sub topic. Transform the incoming data using a Dataflow job. Submit a prediction request to AI Platform using the transformed data. Write the predictions to an outbound Pub/Sub queue.

C) Stream incoming prediction request data into Cloud Spanner. Create a view to abstract your preprocessing logic. Query the view every second for new records.

D) Set up a Cloud Function that is triggered when messages are published to the Pub/Sub topic. Implement your preprocessing logic in the Cloud Function.

Set up a Cloud Function that is triggered when messages are published to the Pub/Sub topic. Implement your preprocessing logic in the Cloud Function.

2

Your team trained and tested a DNN regression model with good results. Six months after deployment, the model is performing poorly due to a change in the distribution of the input data. How should you address the input differences in production?

A) Create alerts to monitor for skew, and retrain the model.

B) Perform feature selection on the model, and retrain the model with fewer features.

C) Retrain the model, and select an L2 regularization parameter with a hyperparameter tuning service.

D) Perform feature selection on the model, and retrain the model on a monthly basis with fewer features.

A) Create alerts to monitor for skew, and retrain the model.

B) Perform feature selection on the model, and retrain the model with fewer features.

C) Retrain the model, and select an L2 regularization parameter with a hyperparameter tuning service.

D) Perform feature selection on the model, and retrain the model on a monthly basis with fewer features.

Retrain the model, and select an L2 regularization parameter with a hyperparameter tuning service.

3

You have trained a text classification model in TensorFlow using AI Platform. You want to use the trained model for batch predictions on text data stored in BigQuery while minimizing computational overhead. What should you do?

A) Export the model to BigQuery ML.

B) Deploy and version the model on AI Platform.

C) Use Dataflow with the SavedModel to read the data from BigQuery.

D) Submit a batch prediction job on AI Platform that points to the model location in Cloud Storage.

A) Export the model to BigQuery ML.

B) Deploy and version the model on AI Platform.

C) Use Dataflow with the SavedModel to read the data from BigQuery.

D) Submit a batch prediction job on AI Platform that points to the model location in Cloud Storage.

Export the model to BigQuery ML.

4

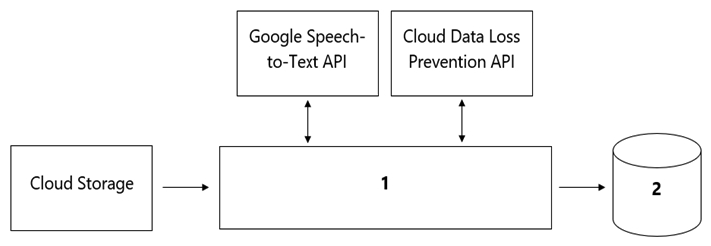

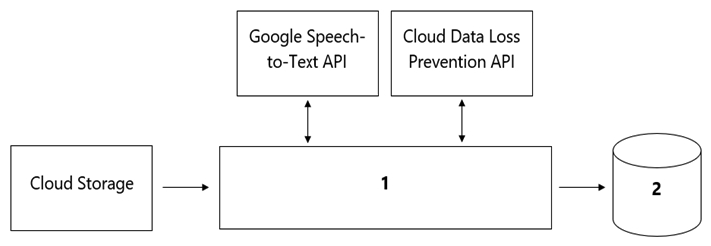

Your organization's call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (PII) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics. How should the data pipeline be designed?

A) 1= Dataflow, 2= BigQuery

B) 1 = Pub/Sub, 2= Datastore

C) 1 = Dataflow, 2 = Cloud SQL

D) 1 = Cloud Function, 2= Cloud SQL

A) 1= Dataflow, 2= BigQuery

B) 1 = Pub/Sub, 2= Datastore

C) 1 = Dataflow, 2 = Cloud SQL

D) 1 = Cloud Function, 2= Cloud SQL

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

5

You are training a Resnet model on AI Platform using TPUs to visually categorize types of defects in automobile engines. You capture the training profile using the Cloud TPU profiler plugin and observe that it is highly input-bound. You want to reduce the bottleneck and speed up your model training process. Which modifications should you make to the tf.data dataset? (Choose two.)

A) Use the interleave option for reading data.

B) Reduce the value of the repeat parameter.

C) Increase the buffer size for the shuttle option.

D) Set the prefetch option equal to the training batch size.

E) Decrease the batch size argument in your transformation.

A) Use the interleave option for reading data.

B) Reduce the value of the repeat parameter.

C) Increase the buffer size for the shuttle option.

D) Set the prefetch option equal to the training batch size.

E) Decrease the batch size argument in your transformation.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

6

You work for a social media company. You need to detect whether posted images contain cars. Each training example is a member of exactly one class. You have trained an object detection neural network and deployed the model version to AI Platform Prediction for evaluation. Before deployment, you created an evaluation job and attached it to the AI Platform Prediction model version. You notice that the precision is lower than your business requirements allow. How should you adjust the model's final layer softmax threshold to increase precision?

A) Increase the recall.

B) Decrease the recall.

C) Increase the number of false positives.

D) Decrease the number of false negatives.

A) Increase the recall.

B) Decrease the recall.

C) Increase the number of false positives.

D) Decrease the number of false negatives.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

7

You have trained a deep neural network model on Google Cloud. The model has low loss on the training data, but is performing worse on the validation data. You want the model to be resilient to overfitting. Which strategy should you use when retraining the model?

A) Apply a dropout parameter of 0.2, and decrease the learning rate by a factor of 10.

B) Apply a L2 regularization parameter of 0.4, and decrease the learning rate by a factor of 10.

C) Run a hyperparameter tuning job on AI Platform to optimize for the L2 regularization and dropout parameters.

D) Run a hyperparameter tuning job on AI Platform to optimize for the learning rate, and increase the number of neurons by a factor of 2.

A) Apply a dropout parameter of 0.2, and decrease the learning rate by a factor of 10.

B) Apply a L2 regularization parameter of 0.4, and decrease the learning rate by a factor of 10.

C) Run a hyperparameter tuning job on AI Platform to optimize for the L2 regularization and dropout parameters.

D) Run a hyperparameter tuning job on AI Platform to optimize for the learning rate, and increase the number of neurons by a factor of 2.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

8

You have written unit tests for a Kubeflow Pipeline that require custom libraries. You want to automate the execution of unit tests with each new push to your development branch in Cloud Source Repositories. What should you do?

A) Write a script that sequentially performs the push to your development branch and executes the unit tests on Cloud Run.

B) Using Cloud Build, set an automated trigger to execute the unit tests when changes are pushed to your development branch.

C) Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Configure a Pub/Sub trigger for Cloud Run, and execute the unit tests on Cloud Run.

D) Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Execute the unit tests using a Cloud Function that is triggered when messages are sent to the Pub/Sub topic.

A) Write a script that sequentially performs the push to your development branch and executes the unit tests on Cloud Run.

B) Using Cloud Build, set an automated trigger to execute the unit tests when changes are pushed to your development branch.

C) Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Configure a Pub/Sub trigger for Cloud Run, and execute the unit tests on Cloud Run.

D) Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Execute the unit tests using a Cloud Function that is triggered when messages are sent to the Pub/Sub topic.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

9

You developed an ML model with AI Platform, and you want to move it to production. You serve a few thousand queries per second and are experiencing latency issues. Incoming requests are served by a load balancer that distributes them across multiple Kubeflow CPU-only pods running on Google Kubernetes Engine (GKE). Your goal is to improve the serving latency without changing the underlying infrastructure. What should you do?

A) Significantly increase the max_batch_size TensorFlow Serving parameter. Significantly increase the max_batch_size TensorFlow Serving parameter.

B) Switch to the tensorflow-model-server-universal version of TensorFlow Serving.

C) Significantly increase the max_enqueued_batches TensorFlow Serving parameter. max_enqueued_batches

D) Recompile TensorFlow Serving using the source to support CPU-specific optimizations. Instruct GKE to choose an appropriate baseline minimum CPU platform for serving nodes.

A) Significantly increase the max_batch_size TensorFlow Serving parameter. Significantly increase the max_batch_size TensorFlow Serving parameter.

B) Switch to the tensorflow-model-server-universal version of TensorFlow Serving.

C) Significantly increase the max_enqueued_batches TensorFlow Serving parameter. max_enqueued_batches

D) Recompile TensorFlow Serving using the source to support CPU-specific optimizations. Instruct GKE to choose an appropriate baseline minimum CPU platform for serving nodes.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

10

You have been asked to develop an input pipeline for an ML training model that processes images from disparate sources at a low latency. You discover that your input data does not fit in memory. How should you create a dataset following Google-recommended best practices?

A) Create a tf.data.Dataset.prefetch transformation. Create a tf.data.Dataset.prefetch transformation.

B) Convert the images to tf.Tensor objects, and then run Dataset.from_tensor_slices() . Convert the images to tf.Tensor objects, and then run Dataset.from_tensor_slices() .

C) Convert the images to tf.Tensor objects, and then run tf.data.Dataset.from_tensors() . tf.data.Dataset.from_tensors()

D) Convert the images into TFRecords, store the images in Cloud Storage, and then use the tf.data API to read the images for training. Convert the images into TFRecords, store the images in Cloud Storage, and then use the tf.data API to read the images for training.

A) Create a tf.data.Dataset.prefetch transformation. Create a tf.data.Dataset.prefetch transformation.

B) Convert the images to tf.Tensor objects, and then run Dataset.from_tensor_slices() . Convert the images to tf.Tensor objects, and then run Dataset.from_tensor_slices() .

C) Convert the images to tf.Tensor objects, and then run tf.data.Dataset.from_tensors() . tf.data.Dataset.from_tensors()

D) Convert the images into TFRecords, store the images in Cloud Storage, and then use the tf.data API to read the images for training. Convert the images into TFRecords, store the images in Cloud Storage, and then use the tf.data API to read the images for training.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

11

You are building an ML model to detect anomalies in real-time sensor data. You will use Pub/Sub to handle incoming requests. You want to store the results for analytics and visualization. How should you configure the pipeline?

A) 1 = Dataflow, 2 = AI Platform, 3 = BigQuery

B) 1 = DataProc, 2 = AutoML, 3 = Cloud Bigtable

C) 1 = BigQuery, 2 = AutoML, 3 = Cloud Functions

D) 1 = BigQuery, 2 = AI Platform, 3 = Cloud Storage

A) 1 = Dataflow, 2 = AI Platform, 3 = BigQuery

B) 1 = DataProc, 2 = AutoML, 3 = Cloud Bigtable

C) 1 = BigQuery, 2 = AutoML, 3 = Cloud Functions

D) 1 = BigQuery, 2 = AI Platform, 3 = Cloud Storage

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

12

You work for a large hotel chain and have been asked to assist the marketing team in gathering predictions for a targeted marketing strategy. You need to make predictions about user lifetime value (LTV) over the next 20 days so that marketing can be adjusted accordingly. The customer dataset is in BigQuery, and you are preparing the tabular data for training with AutoML Tables. This data has a time signal that is spread across multiple columns. How should you ensure that AutoML fits the best model to your data?

A) Manually combine all columns that contain a time signal into an array. AIlow AutoML to interpret this array appropriately. Choose an automatic data split across the training, validation, and testing sets.

B) Submit the data for training without performing any manual transformations. AIlow AutoML to handle the appropriate transformations. Choose an automatic data split across the training, validation, and testing sets.

C) Submit the data for training without performing any manual transformations, and indicate an appropriate column as the Time column. AIlow AutoML to split your data based on the time signal provided, and reserve the more recent data for the validation and testing sets.

D) Submit the data for training without performing any manual transformations. Use the columns that have a time signal to manually split your data. Ensure that the data in your validation set is from 30 days after the data in your training set and that the data in your testing sets from 30 days after your validation set.

A) Manually combine all columns that contain a time signal into an array. AIlow AutoML to interpret this array appropriately. Choose an automatic data split across the training, validation, and testing sets.

B) Submit the data for training without performing any manual transformations. AIlow AutoML to handle the appropriate transformations. Choose an automatic data split across the training, validation, and testing sets.

C) Submit the data for training without performing any manual transformations, and indicate an appropriate column as the Time column. AIlow AutoML to split your data based on the time signal provided, and reserve the more recent data for the validation and testing sets.

D) Submit the data for training without performing any manual transformations. Use the columns that have a time signal to manually split your data. Ensure that the data in your validation set is from 30 days after the data in your training set and that the data in your testing sets from 30 days after your validation set.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

13

You are developing a Kubeflow pipeline on Google Kubernetes Engine. The first step in the pipeline is to issue a query against BigQuery. You plan to use the results of that query as the input to the next step in your pipeline. You want to achieve this in the easiest way possible. What should you do?

A) Use the BigQuery console to execute your query, and then save the query results into a new BigQuery table.

B) Write a Python script that uses the BigQuery API to execute queries against BigQuery. Execute this script as the first step in your Kubeflow pipeline.

C) Use the Kubeflow Pipelines domain-specific language to create a custom component that uses the Python BigQuery client library to execute queries.

D) Locate the Kubeflow Pipelines repository on GitHub. Find the BigQuery Query Component, copy that component's URL, and use it to load the component into your pipeline. Use the component to execute queries against BigQuery.

A) Use the BigQuery console to execute your query, and then save the query results into a new BigQuery table.

B) Write a Python script that uses the BigQuery API to execute queries against BigQuery. Execute this script as the first step in your Kubeflow pipeline.

C) Use the Kubeflow Pipelines domain-specific language to create a custom component that uses the Python BigQuery client library to execute queries.

D) Locate the Kubeflow Pipelines repository on GitHub. Find the BigQuery Query Component, copy that component's URL, and use it to load the component into your pipeline. Use the component to execute queries against BigQuery.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

14

You have a demand forecasting pipeline in production that uses Dataflow to preprocess raw data prior to model training and prediction. During preprocessing, you employ Z-score normalization on data stored in BigQuery and write it back to BigQuery. New training data is added every week. You want to make the process more efficient by minimizing computation time and manual intervention. What should you do?

A) Normalize the data using Google Kubernetes Engine.

B) Translate the normalization algorithm into SQL for use with BigQuery.

C) Use the normalizer_fn argument in TensorFlow's Feature Column API. Use the normalizer_fn argument in TensorFlow's Feature Column API.

D) Normalize the data with Apache Spark using the Dataproc connector for BigQuery.

A) Normalize the data using Google Kubernetes Engine.

B) Translate the normalization algorithm into SQL for use with BigQuery.

C) Use the normalizer_fn argument in TensorFlow's Feature Column API. Use the normalizer_fn argument in TensorFlow's Feature Column API.

D) Normalize the data with Apache Spark using the Dataproc connector for BigQuery.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

15

You need to train a computer vision model that predicts the type of government ID present in a given image using a GPU-powered virtual machine on Compute Engine. You use the following parameters: Optimizer: SGD Image shape = 224×224 Batch size = 64 Epochs = 10 Verbose =2 During training you encounter the following error: ResourceExhaustedError: Out Of Memory (OOM) when allocating tensor . What should you do?

A) Change the optimizer.

B) Reduce the batch size.

C) Change the learning rate.

D) Reduce the image shape.

A) Change the optimizer.

B) Reduce the batch size.

C) Change the learning rate.

D) Reduce the image shape.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

16

You are developing models to classify customer support emails. You created models with TensorFlow Estimators using small datasets on your on-premises system, but you now need to train the models using large datasets to ensure high performance. You will port your models to Google Cloud and want to minimize code refactoring and infrastructure overhead for easier migration from on-prem to cloud. What should you do?

A) Use AI Platform for distributed training.

B) Create a cluster on Dataproc for training.

C) Create a Managed Instance Group with autoscaling.

D) Use Kubeflow Pipelines to train on a Google Kubernetes Engine cluster.

A) Use AI Platform for distributed training.

B) Create a cluster on Dataproc for training.

C) Create a Managed Instance Group with autoscaling.

D) Use Kubeflow Pipelines to train on a Google Kubernetes Engine cluster.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

17

Your team needs to build a model that predicts whether images contain a driver's license, passport, or credit card. The data engineering team already built the pipeline and generated a dataset composed of 10,000 images with driver's licenses, 1,000 images with passports, and 1,000 images with credit cards. You now have to train a model with the following label map: ['drivers_license', 'passport', 'credit_card']. Which loss function should you use?

A) Categorical hinge

B) Binary cross-entropy

C) Categorical cross-entropy

D) Sparse categorical cross-entropy

A) Categorical hinge

B) Binary cross-entropy

C) Categorical cross-entropy

D) Sparse categorical cross-entropy

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

18

You need to design a customized deep neural network in Keras that will predict customer purchases based on their purchase history. You want to explore model performance using multiple model architectures, store training data, and be able to compare the evaluation metrics in the same dashboard. What should you do?

A) Create multiple models using AutoML Tables.

B) Automate multiple training runs using Cloud Composer.

C) Run multiple training jobs on AI Platform with similar job names.

D) Create an experiment in Kubeflow Pipelines to organize multiple runs.

A) Create multiple models using AutoML Tables.

B) Automate multiple training runs using Cloud Composer.

C) Run multiple training jobs on AI Platform with similar job names.

D) Create an experiment in Kubeflow Pipelines to organize multiple runs.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

19

You are responsible for building a unified analytics environment across a variety of on-premises data marts. Your company is experiencing data quality and security challenges when integrating data across the servers, caused by the use of a wide range of disconnected tools and temporary solutions. You need a fully managed, cloud-native data integration service that will lower the total cost of work and reduce repetitive work. Some members on your team prefer a codeless interface for building Extract, Transform, Load (ETL) process. Which service should you use?

A) Dataflow

B) Dataprep

C) Apache Flink

D) Cloud Data Fusion

A) Dataflow

B) Dataprep

C) Apache Flink

D) Cloud Data Fusion

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

20

You built and manage a production system that is responsible for predicting sales numbers. Model accuracy is crucial, because the production model is required to keep up with market changes. Since being deployed to production, the model hasn't changed; however the accuracy of the model has steadily deteriorated. What issue is most likely causing the steady decline in model accuracy?

A) Poor data quality

B) Lack of model retraining

C) Too few layers in the model for capturing information

D) Incorrect data split ratio during model training, evaluation, validation, and test

A) Poor data quality

B) Lack of model retraining

C) Too few layers in the model for capturing information

D) Incorrect data split ratio during model training, evaluation, validation, and test

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

21

You are training a TensorFlow model on a structured dataset with 100 billion records stored in several CSV files. You need to improve the input/output execution performance. What should you do?

A) Load the data into BigQuery, and read the data from BigQuery.

B) Load the data into Cloud Bigtable, and read the data from Bigtable.

C) Convert the CSV files into shards of TFRecords, and store the data in Cloud Storage.

D) Convert the CSV files into shards of TFRecords, and store the data in the Hadoop Distributed File System (HDFS).

A) Load the data into BigQuery, and read the data from BigQuery.

B) Load the data into Cloud Bigtable, and read the data from Bigtable.

C) Convert the CSV files into shards of TFRecords, and store the data in Cloud Storage.

D) Convert the CSV files into shards of TFRecords, and store the data in the Hadoop Distributed File System (HDFS).

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

22

You started working on a classification problem with time series data and achieved an area under the receiver operating characteristic curve (AUC ROC) value of 99% for training data after just a few experiments. You haven't explored using any sophisticated algorithms or spent any time on hyperparameter tuning. What should your next step be to identify and fix the problem?

A) Address the model overfitting by using a less complex algorithm.

B) Address data leakage by applying nested cross-validation during model training.

C) Address data leakage by removing features highly correlated with the target value.

D) Address the model overfitting by tuning the hyperparameters to reduce the AUC ROC value.

A) Address the model overfitting by using a less complex algorithm.

B) Address data leakage by applying nested cross-validation during model training.

C) Address data leakage by removing features highly correlated with the target value.

D) Address the model overfitting by tuning the hyperparameters to reduce the AUC ROC value.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

23

You have a functioning end-to-end ML pipeline that involves tuning the hyperparameters of your ML model using AI Platform, and then using the best-tuned parameters for training. Hypertuning is taking longer than expected and is delaying the downstream processes. You want to speed up the tuning job without significantly compromising its effectiveness. Which actions should you take? (Choose two.)

A) Decrease the number of parallel trials.

B) Decrease the range of floating-point values.

C) Set the early stopping parameter to TRUE.

D) Change the search algorithm from Bayesian search to random search.

E) Decrease the maximum number of trials during subsequent training phases.

A) Decrease the number of parallel trials.

B) Decrease the range of floating-point values.

C) Set the early stopping parameter to TRUE.

D) Change the search algorithm from Bayesian search to random search.

E) Decrease the maximum number of trials during subsequent training phases.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

24

You work for a large technology company that wants to modernize their contact center. You have been asked to develop a solution to classify incoming calls by product so that requests can be more quickly routed to the correct support team. You have already transcribed the calls using the Speech-to-Text API. You want to minimize data preprocessing and development time. How should you build the model?

A) Use the AI Platform Training built-in algorithms to create a custom model.

B) Use AutoMlL Natural Language to extract custom entities for classification.

C) Use the Cloud Natural Language API to extract custom entities for classification.

D) Build a custom model to identify the product keywords from the transcribed calls, and then run the keywords through a classification algorithm.

A) Use the AI Platform Training built-in algorithms to create a custom model.

B) Use AutoMlL Natural Language to extract custom entities for classification.

C) Use the Cloud Natural Language API to extract custom entities for classification.

D) Build a custom model to identify the product keywords from the transcribed calls, and then run the keywords through a classification algorithm.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

25

You are an ML engineer at a global car manufacture. You need to build an ML model to predict car sales in different cities around the world. Which features or feature crosses should you use to train city-specific relationships between car type and number of sales?

A) Thee individual features: binned latitude, binned longitude, and one-hot encoded car type.

B) One feature obtained as an element-wise product between latitude, longitude, and car type.

C) One feature obtained as an element-wise product between binned latitude, binned longitude, and one-hot encoded car type.

D) Two feature crosses as an element-wise product: the first between binned latitude and one-hot encoded car type, and the second between binned longitude and one-hot encoded car type.

A) Thee individual features: binned latitude, binned longitude, and one-hot encoded car type.

B) One feature obtained as an element-wise product between latitude, longitude, and car type.

C) One feature obtained as an element-wise product between binned latitude, binned longitude, and one-hot encoded car type.

D) Two feature crosses as an element-wise product: the first between binned latitude and one-hot encoded car type, and the second between binned longitude and one-hot encoded car type.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

26

You work for a bank and are building a random forest model for fraud detection. You have a dataset that includes transactions, of which 1% are identified as fraudulent. Which data transformation strategy would likely improve the performance of your classifier?

A) Write your data in TFRecords.

B) Z-normalize all the numeric features.

C) Oversample the fraudulent transaction 10 times.

D) Use one-hot encoding on all categorical features.

A) Write your data in TFRecords.

B) Z-normalize all the numeric features.

C) Oversample the fraudulent transaction 10 times.

D) Use one-hot encoding on all categorical features.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

27

You work for an advertising company and want to understand the effectiveness of your company's latest advertising campaign. You have streamed 500 MB of campaign data into BigQuery. You want to query the table, and then manipulate the results of that query with a pandas dataframe in an AI Platform notebook. What should you do?

A) Use AI Platform Notebooks' BigQuery cell magic to query the data, and ingest the results as a pandas dataframe.

B) Export your table as a CSV file from BigQuery to Google Drive, and use the Google Drive API to ingest the file into your notebook instance.

C) Download your table from BigQuery as a local CSV file, and upload it to your AI Platform notebook instance. Use pandas.read_csv to ingest he file as a pandas dataframe. Download your table from BigQuery as a local CSV file, and upload it to your AI Platform notebook instance. Use pandas.read_csv to ingest he file as a pandas dataframe.

D) From a bash cell in your AI Platform notebook, use the bq extract command to export the table as a CSV file to Cloud Storage, and then use gsutil cp to copy the data into the notebook. Use pandas.read_csv to ingest the file as a pandas dataframe. From a bash cell in your AI Platform notebook, use the bq extract command to export the table as a CSV file to Cloud Storage, and then use gsutil cp to copy the data into the notebook. Use to ingest the file as a pandas dataframe.

A) Use AI Platform Notebooks' BigQuery cell magic to query the data, and ingest the results as a pandas dataframe.

B) Export your table as a CSV file from BigQuery to Google Drive, and use the Google Drive API to ingest the file into your notebook instance.

C) Download your table from BigQuery as a local CSV file, and upload it to your AI Platform notebook instance. Use pandas.read_csv to ingest he file as a pandas dataframe. Download your table from BigQuery as a local CSV file, and upload it to your AI Platform notebook instance. Use pandas.read_csv to ingest he file as a pandas dataframe.

D) From a bash cell in your AI Platform notebook, use the bq extract command to export the table as a CSV file to Cloud Storage, and then use gsutil cp to copy the data into the notebook. Use pandas.read_csv to ingest the file as a pandas dataframe. From a bash cell in your AI Platform notebook, use the bq extract command to export the table as a CSV file to Cloud Storage, and then use gsutil cp to copy the data into the notebook. Use to ingest the file as a pandas dataframe.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

28

Your team is building a convolutional neural network (CNN)-based architecture from scratch. The preliminary experiments running on your on-premises CPU-only infrastructure were encouraging, but have slow convergence. You have been asked to speed up model training to reduce time-to-market. You want to experiment with virtual machines (VMs) on Google Cloud to leverage more powerful hardware. Your code does not include any manual device placement and has not been wrapped in Estimator model-level abstraction. Which environment should you train your model on?

A) AVM on Compute Engine and 1 TPU with all dependencies installed manually.

B) AVM on Compute Engine and 8 GPUs with all dependencies installed manually.

C) A Deep Learning VM with an n1-standard-2 machine and 1 GPU with all libraries pre-installed.

D) A Deep Learning VM with more powerful CPU e2-highcpu-16 machines with all libraries pre-installed.

A) AVM on Compute Engine and 1 TPU with all dependencies installed manually.

B) AVM on Compute Engine and 8 GPUs with all dependencies installed manually.

C) A Deep Learning VM with an n1-standard-2 machine and 1 GPU with all libraries pre-installed.

D) A Deep Learning VM with more powerful CPU e2-highcpu-16 machines with all libraries pre-installed.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

29

Your team has been tasked with creating an ML solution in Google Cloud to classify support requests for one of your platforms. You analyzed the requirements and decided to use TensorFlow to build the classifier so that you have full control of the model's code, serving, and deployment. You will use Kubeflow pipelines for the ML platform. To save time, you want to build on existing resources and use managed services instead of building a completely new model. How should you build the classifier?

A) Use the Natural Language API to classify support requests.

B) Use AutoML Natural Language to build the support requests classifier.

C) Use an established text classification model on AI Platform to perform transfer learning.

D) Use an established text classification model on AI Platform as-is to classify support requests.

A) Use the Natural Language API to classify support requests.

B) Use AutoML Natural Language to build the support requests classifier.

C) Use an established text classification model on AI Platform to perform transfer learning.

D) Use an established text classification model on AI Platform as-is to classify support requests.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

30

You recently joined a machine learning team that will soon release a new project. As a lead on the project, you are asked to determine the production readiness of the ML components. The team has already tested features and data, model development, and infrastructure. Which additional readiness check should you recommend to the team?

A) Ensure that training is reproducible.

B) Ensure that all hyperparameters are tuned.

C) Ensure that model performance is monitored.

D) Ensure that feature expectations are captured in the schema.

A) Ensure that training is reproducible.

B) Ensure that all hyperparameters are tuned.

C) Ensure that model performance is monitored.

D) Ensure that feature expectations are captured in the schema.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

31

You recently joined an enterprise-scale company that has thousands of datasets. You know that there are accurate descriptions for each table in BigQuery, and you are searching for the proper BigQuery table to use for a model you are building on AI Platform. How should you find the data that you need?

A) Use Data Catalog to search the BigQuery datasets by using keywords in the table description.

B) Tag each of your model and version resources on AI Platform with the name of the BigQuery table that was used for training.

C) Maintain a lookup table in BigQuery that maps the table descriptions to the table ID. Query the lookup table to find the correct table ID for the data that you need.

D) Execute a query in BigQuery to retrieve all the existing table names in your project using the INFORMATION_SCHEMA metadata tables that are native to BigQuery. Use the result o find the table that you need.

A) Use Data Catalog to search the BigQuery datasets by using keywords in the table description.

B) Tag each of your model and version resources on AI Platform with the name of the BigQuery table that was used for training.

C) Maintain a lookup table in BigQuery that maps the table descriptions to the table ID. Query the lookup table to find the correct table ID for the data that you need.

D) Execute a query in BigQuery to retrieve all the existing table names in your project using the INFORMATION_SCHEMA metadata tables that are native to BigQuery. Use the result o find the table that you need.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

32

Your company manages a video sharing website where users can watch and upload videos. You need to create an ML model to predict which newly uploaded videos will be the most popular so that those videos can be prioritized on your company's website. Which result should you use to determine whether the model is successful?

A) The model predicts videos as popular if the user who uploads them has over 10,000 likes.

B) The model predicts 97.5% of the most popular clickbait videos measured by number of clicks.

C) The model predicts 95% of the most popular videos measured by watch time within 30 days of being uploaded.

D) The Pearson correlation coefficient between the log-transformed number of views after 7 days and 30 days after publication is equal to 0.

A) The model predicts videos as popular if the user who uploads them has over 10,000 likes.

B) The model predicts 97.5% of the most popular clickbait videos measured by number of clicks.

C) The model predicts 95% of the most popular videos measured by watch time within 30 days of being uploaded.

D) The Pearson correlation coefficient between the log-transformed number of views after 7 days and 30 days after publication is equal to 0.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

33

Your data science team needs to rapidly experiment with various features, model architectures, and hyperparameters. They need to track the accuracy metrics for various experiments and use an API to query the metrics over time. What should they use to track and report their experiments while minimizing manual effort?

A) Use Kubeflow Pipelines to execute the experiments. Export the metrics file, and query the results using the Kubeflow Pipelines API.

B) Use AI Platform Training to execute the experiments. Write the accuracy metrics to BigQuery, and query the results using the BigQuery API.

C) Use AI Platform Training to execute the experiments. Write the accuracy metrics to Cloud Monitoring, and query the results using the Monitoring API.

D) Use AI Platform Notebooks to execute the experiments. Collect the results in a shared Google Sheets file, and query the results using the Google Sheets API.

A) Use Kubeflow Pipelines to execute the experiments. Export the metrics file, and query the results using the Kubeflow Pipelines API.

B) Use AI Platform Training to execute the experiments. Write the accuracy metrics to BigQuery, and query the results using the BigQuery API.

C) Use AI Platform Training to execute the experiments. Write the accuracy metrics to Cloud Monitoring, and query the results using the Monitoring API.

D) Use AI Platform Notebooks to execute the experiments. Collect the results in a shared Google Sheets file, and query the results using the Google Sheets API.

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

34

Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this: ![<strong>Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this: You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?</strong> A) Distribute texts randomly across the train-test-eval subsets: Train set: [TextA1, TextB2, ...] Test set: [TextA2, TextC1, TextD2, ...] Eval set: [TextB1, TextC2, TextD1, ...] B) Distribute authors randomly across the train-test-eval subsets: (*) Train set: [TextA1, TextA2, TextD1, TextD2, ...] Test set: [TextB1, TextB2, ...] Eval set: [TexC1,TextC2 ...] C) Distribute sentences randomly across the train-test-eval subsets: Train set: [SentenceA11, SentenceA21, SentenceB11, SentenceB21, SentenceC11, SentenceD21 ...] Test set: [SentenceA12, SentenceA22, SentenceB12, SentenceC22, SentenceC12, SentenceD22 ...] Eval set: [SentenceA13, SentenceA23, SentenceB13, SentenceC23, SentenceC13, SentenceD31 ...] D) Distribute paragraphs of texts (i.e., chunks of consecutive sentences) across the train-test-eval subsets: Train set: [SentenceA11, SentenceA12, SentenceD11, SentenceD12 ...] Test set: [SentenceA13, SentenceB13, SentenceB21, SentenceD23, SentenceC12, SentenceD13 ...] Eval set: [SentenceA11, SentenceA22, SentenceB13, SentenceD22, SentenceC23, SentenceD11 ...]](https://storage.examlex.com/C1428/11ec58c7_4d8b_db27_a4e0_753df2218a18_C1428_00.jpg) You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

A) Distribute texts randomly across the train-test-eval subsets: Train set: [TextA1, TextB2, ...]

Test set: [TextA2, TextC1, TextD2, ...]

Eval set: [TextB1, TextC2, TextD1, ...]

B) Distribute authors randomly across the train-test-eval subsets: (*) Train set: [TextA1, TextA2, TextD1, TextD2, ...] Test set: [TextB1, TextB2, ...]

Eval set: [TexC1,TextC2 ...]

C) Distribute sentences randomly across the train-test-eval subsets: Train set: [SentenceA11, SentenceA21, SentenceB11, SentenceB21, SentenceC11, SentenceD21 ...] Test set: [SentenceA12, SentenceA22, SentenceB12, SentenceC22, SentenceC12, SentenceD22 ...]

Eval set: [SentenceA13, SentenceA23, SentenceB13, SentenceC23, SentenceC13, SentenceD31 ...]

D) Distribute paragraphs of texts (i.e., chunks of consecutive sentences) across the train-test-eval subsets: Train set: [SentenceA11, SentenceA12, SentenceD11, SentenceD12 ...] Test set: [SentenceA13, SentenceB13, SentenceB21, SentenceD23, SentenceC12, SentenceD13 ...]

Eval set: [SentenceA11, SentenceA22, SentenceB13, SentenceD22, SentenceC23, SentenceD11 ...]

![<strong>Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this: You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?</strong> A) Distribute texts randomly across the train-test-eval subsets: Train set: [TextA1, TextB2, ...] Test set: [TextA2, TextC1, TextD2, ...] Eval set: [TextB1, TextC2, TextD1, ...] B) Distribute authors randomly across the train-test-eval subsets: (*) Train set: [TextA1, TextA2, TextD1, TextD2, ...] Test set: [TextB1, TextB2, ...] Eval set: [TexC1,TextC2 ...] C) Distribute sentences randomly across the train-test-eval subsets: Train set: [SentenceA11, SentenceA21, SentenceB11, SentenceB21, SentenceC11, SentenceD21 ...] Test set: [SentenceA12, SentenceA22, SentenceB12, SentenceC22, SentenceC12, SentenceD22 ...] Eval set: [SentenceA13, SentenceA23, SentenceB13, SentenceC23, SentenceC13, SentenceD31 ...] D) Distribute paragraphs of texts (i.e., chunks of consecutive sentences) across the train-test-eval subsets: Train set: [SentenceA11, SentenceA12, SentenceD11, SentenceD12 ...] Test set: [SentenceA13, SentenceB13, SentenceB21, SentenceD23, SentenceC12, SentenceD13 ...] Eval set: [SentenceA11, SentenceA22, SentenceB13, SentenceD22, SentenceC23, SentenceD11 ...]](https://storage.examlex.com/C1428/11ec58c7_4d8b_db27_a4e0_753df2218a18_C1428_00.jpg) You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?A) Distribute texts randomly across the train-test-eval subsets: Train set: [TextA1, TextB2, ...]

Test set: [TextA2, TextC1, TextD2, ...]

Eval set: [TextB1, TextC2, TextD1, ...]

B) Distribute authors randomly across the train-test-eval subsets: (*) Train set: [TextA1, TextA2, TextD1, TextD2, ...] Test set: [TextB1, TextB2, ...]

Eval set: [TexC1,TextC2 ...]

C) Distribute sentences randomly across the train-test-eval subsets: Train set: [SentenceA11, SentenceA21, SentenceB11, SentenceB21, SentenceC11, SentenceD21 ...] Test set: [SentenceA12, SentenceA22, SentenceB12, SentenceC22, SentenceC12, SentenceD22 ...]

Eval set: [SentenceA13, SentenceA23, SentenceB13, SentenceC23, SentenceC13, SentenceD31 ...]

D) Distribute paragraphs of texts (i.e., chunks of consecutive sentences) across the train-test-eval subsets: Train set: [SentenceA11, SentenceA12, SentenceD11, SentenceD12 ...] Test set: [SentenceA13, SentenceB13, SentenceB21, SentenceD23, SentenceC12, SentenceD13 ...]

Eval set: [SentenceA11, SentenceA22, SentenceB13, SentenceD22, SentenceC23, SentenceD11 ...]

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck

35

You work on a growing team of more than 50 data scientists who all use AI Platform. You are designing a strategy to organize your jobs, models, and versions in a clean and scalable way. Which strategy should you choose?

A) Set up restrictive IAM permissions on the AI Platform notebooks so that only a single user or group can access a given instance.

B) Separate each data scientist's work into a different project to ensure that the jobs, models, and versions created by each data scientist are accessible only to that user.

C) Use labels to organize resources into descriptive categories. Apply a label to each created resource so that users can filter the results by label when viewing or monitoring the resources.

D) Set up a BigQuery sink for Cloud Logging logs that is appropriately filtered to capture information about AI Platform resource usage. In BigQuery, create a SQL view that maps users to the resources they are using

A) Set up restrictive IAM permissions on the AI Platform notebooks so that only a single user or group can access a given instance.

B) Separate each data scientist's work into a different project to ensure that the jobs, models, and versions created by each data scientist are accessible only to that user.

C) Use labels to organize resources into descriptive categories. Apply a label to each created resource so that users can filter the results by label when viewing or monitoring the resources.

D) Set up a BigQuery sink for Cloud Logging logs that is appropriately filtered to capture information about AI Platform resource usage. In BigQuery, create a SQL view that maps users to the resources they are using

Unlock Deck

Unlock for access to all 35 flashcards in this deck.

Unlock Deck

k this deck