Exam 19: Professional Machine Learning Engineer

Exam 1: Google AdWords: Display Advertising122 Questions

Exam 2: Google AdWords Fundamentals153 Questions

Exam 3: Associate Android Developer86 Questions

Exam 4: Associate Cloud Engineer134 Questions

Exam 5: Cloud Digital Leader91 Questions

Exam 6: Google Analytics Individual Qualification (IQ)121 Questions

Exam 7: Google Analytics Individual Qualification78 Questions

Exam 8: GSuite202 Questions

Exam 9: Looker Business Analyst388 Questions

Exam 10: LookML Developer41 Questions

Exam 11: Mobile Web Specialist13 Questions

Exam 12: Professional Cloud Architect on Google Cloud Platform118 Questions

Exam 13: Professional Cloud Developer85 Questions

Exam 14: Professional Cloud DevOps Engineer28 Questions

Exam 15: Professional Cloud Network Engineer57 Questions

Exam 16: Professional Cloud Security Engineer80 Questions

Exam 17: Professional Collaboration Engineer71 Questions

Exam 18: Professional Data Engineer on Google Cloud Platform256 Questions

Exam 19: Professional Machine Learning Engineer35 Questions

Select questions type

You work on a growing team of more than 50 data scientists who all use AI Platform. You are designing a strategy to organize your jobs, models, and versions in a clean and scalable way. Which strategy should you choose?

Free

(Multiple Choice)

4.8/5  (37)

(37)

Correct Answer:

A

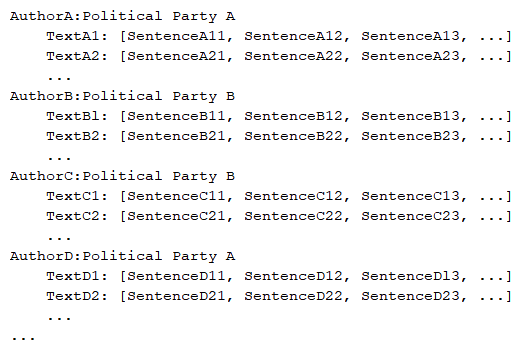

Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this:  You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

Free

(Multiple Choice)

4.9/5  (32)

(32)

Correct Answer:

C

Your company manages a video sharing website where users can watch and upload videos. You need to create an ML model to predict which newly uploaded videos will be the most popular so that those videos can be prioritized on your company's website. Which result should you use to determine whether the model is successful?

Free

(Multiple Choice)

5.0/5  (25)

(25)

Correct Answer:

C

You work for a large hotel chain and have been asked to assist the marketing team in gathering predictions for a targeted marketing strategy. You need to make predictions about user lifetime value (LTV) over the next 20 days so that marketing can be adjusted accordingly. The customer dataset is in BigQuery, and you are preparing the tabular data for training with AutoML Tables. This data has a time signal that is spread across multiple columns. How should you ensure that AutoML fits the best model to your data?

(Multiple Choice)

4.9/5  (48)

(48)

You are developing a Kubeflow pipeline on Google Kubernetes Engine. The first step in the pipeline is to issue a query against BigQuery. You plan to use the results of that query as the input to the next step in your pipeline. You want to achieve this in the easiest way possible. What should you do?

(Multiple Choice)

4.8/5  (33)

(33)

You are responsible for building a unified analytics environment across a variety of on-premises data marts. Your company is experiencing data quality and security challenges when integrating data across the servers, caused by the use of a wide range of disconnected tools and temporary solutions. You need a fully managed, cloud-native data integration service that will lower the total cost of work and reduce repetitive work. Some members on your team prefer a codeless interface for building Extract, Transform, Load (ETL) process. Which service should you use?

(Multiple Choice)

4.8/5  (40)

(40)

You have a demand forecasting pipeline in production that uses Dataflow to preprocess raw data prior to model training and prediction. During preprocessing, you employ Z-score normalization on data stored in BigQuery and write it back to BigQuery. New training data is added every week. You want to make the process more efficient by minimizing computation time and manual intervention. What should you do?

(Multiple Choice)

4.7/5  (47)

(47)

You work for a large technology company that wants to modernize their contact center. You have been asked to develop a solution to classify incoming calls by product so that requests can be more quickly routed to the correct support team. You have already transcribed the calls using the Speech-to-Text API. You want to minimize data preprocessing and development time. How should you build the model?

(Multiple Choice)

4.8/5  (39)

(39)

You are training a Resnet model on AI Platform using TPUs to visually categorize types of defects in automobile engines. You capture the training profile using the Cloud TPU profiler plugin and observe that it is highly input-bound. You want to reduce the bottleneck and speed up your model training process. Which modifications should you make to the tf.data dataset? (Choose two.)

(Multiple Choice)

4.8/5  (40)

(40)

Your team needs to build a model that predicts whether images contain a driver's license, passport, or credit card. The data engineering team already built the pipeline and generated a dataset composed of 10,000 images with driver's licenses, 1,000 images with passports, and 1,000 images with credit cards. You now have to train a model with the following label map: ['drivers_license', 'passport', 'credit_card']. Which loss function should you use?

(Multiple Choice)

4.8/5  (26)

(26)

You recently joined a machine learning team that will soon release a new project. As a lead on the project, you are asked to determine the production readiness of the ML components. The team has already tested features and data, model development, and infrastructure. Which additional readiness check should you recommend to the team?

(Multiple Choice)

4.8/5  (32)

(32)

You are training a TensorFlow model on a structured dataset with 100 billion records stored in several CSV files. You need to improve the input/output execution performance. What should you do?

(Multiple Choice)

4.9/5  (39)

(39)

You are an ML engineer at a global car manufacture. You need to build an ML model to predict car sales in different cities around the world. Which features or feature crosses should you use to train city-specific relationships between car type and number of sales?

(Multiple Choice)

4.8/5  (32)

(32)

You developed an ML model with AI Platform, and you want to move it to production. You serve a few thousand queries per second and are experiencing latency issues. Incoming requests are served by a load balancer that distributes them across multiple Kubeflow CPU-only pods running on Google Kubernetes Engine (GKE). Your goal is to improve the serving latency without changing the underlying infrastructure. What should you do?

(Multiple Choice)

4.8/5  (32)

(32)

You are building an ML model to detect anomalies in real-time sensor data. You will use Pub/Sub to handle incoming requests. You want to store the results for analytics and visualization. How should you configure the pipeline?

(Multiple Choice)

4.9/5  (33)

(33)

You have written unit tests for a Kubeflow Pipeline that require custom libraries. You want to automate the execution of unit tests with each new push to your development branch in Cloud Source Repositories. What should you do?

(Multiple Choice)

4.9/5  (27)

(27)

Your team trained and tested a DNN regression model with good results. Six months after deployment, the model is performing poorly due to a change in the distribution of the input data. How should you address the input differences in production?

(Multiple Choice)

4.7/5  (35)

(35)

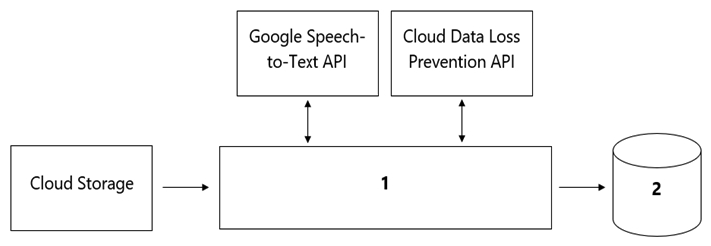

Your organization's call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (PII) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics. How should the data pipeline be designed?

(Multiple Choice)

4.9/5  (33)

(33)

You built and manage a production system that is responsible for predicting sales numbers. Model accuracy is crucial, because the production model is required to keep up with market changes. Since being deployed to production, the model hasn't changed; however the accuracy of the model has steadily deteriorated. What issue is most likely causing the steady decline in model accuracy?

(Multiple Choice)

4.8/5  (35)

(35)

Your data science team needs to rapidly experiment with various features, model architectures, and hyperparameters. They need to track the accuracy metrics for various experiments and use an API to query the metrics over time. What should they use to track and report their experiments while minimizing manual effort?

(Multiple Choice)

4.8/5  (30)

(30)

Showing 1 - 20 of 35

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)