Deck 6: Data Mining

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/60

Play

Full screen (f)

Deck 6: Data Mining

1

Jaccard's coefficient is different from the matching coefficient in that the:

A) former measures overlap while the latter measures dissimilarity.

B) former does not count matching zero entries while the latter does.

C) former deals with categorical variable while the latter deals with continuous variables.

D) former is affected by the scale used to measure variables while the latter is not.

A) former measures overlap while the latter measures dissimilarity.

B) former does not count matching zero entries while the latter does.

C) former deals with categorical variable while the latter deals with continuous variables.

D) former is affected by the scale used to measure variables while the latter is not.

former does not count matching zero entries while the latter does.

2

_____ is a measure of calculating dissimilarity between clusters by considering only the two most dissimilar observations in the two clusters.

A) Single linkage

B) Complete linkage

C) Average linkage

D) Average group linkage

A) Single linkage

B) Complete linkage

C) Average linkage

D) Average group linkage

Complete linkage

3

Which of the following reasons is responsible for the increase in the use of data-mining techniques in business?

A) The lack of methods to electronically track data

B) The dearth of information to analyze and interpret

C) The ability to electronically warehouse data

D) The ability to manually analyze all the data

A) The lack of methods to electronically track data

B) The dearth of information to analyze and interpret

C) The ability to electronically warehouse data

D) The ability to manually analyze all the data

The ability to electronically warehouse data

4

A sample is representative of the entire data population only if it:

A) includes all the observations as the original data repository.

B) can be used to draw the same conclusions as the database.

C) is drawn sequentially from the given database.

D) is small enough to be manipulated quickly.

A) includes all the observations as the original data repository.

B) can be used to draw the same conclusions as the database.

C) is drawn sequentially from the given database.

D) is small enough to be manipulated quickly.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

5

The simplest measure of similarity between observations consisting solely of categorical variables is given by _____.

A) the Euclidean distance

B) the Ward's distance

C) matching coefficient

D) Jaccard's coefficient

A) the Euclidean distance

B) the Ward's distance

C) matching coefficient

D) Jaccard's coefficient

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

6

Single linkage is a measure of calculating dissimilarity between clusters by:

A) considering only the two most dissimilar observations in the two clusters.

B) computing the average dissimilarity between every pair of observations between the two clusters.

C) considering only the two closest observations in the two clusters.

D) considering the distance between the cluster centroids.

A) considering only the two most dissimilar observations in the two clusters.

B) computing the average dissimilarity between every pair of observations between the two clusters.

C) considering only the two closest observations in the two clusters.

D) considering the distance between the cluster centroids.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

7

The process of reducing the number of variables to consider in a data-mining approach without losing any crucial information is termed as _____.

A) dimension reduction

B) data sampling

C) data reduction

D) aggregation

A) dimension reduction

B) data sampling

C) data reduction

D) aggregation

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

8

Which of the following methods is used by the analyst to decide if a particular variable needs to be retained in the sample during the sampling process?

A) Descriptive statistics and data visualization

B) Regression

C) Outlier analysis

D) Data Testing

A) Descriptive statistics and data visualization

B) Regression

C) Outlier analysis

D) Data Testing

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

9

In which of the following data-mining process steps is the data manipulated to make it suitable for formal modeling?

A) Data sampling

B) Data preparation

C) Model construction

D) Model assessment

A) Data sampling

B) Data preparation

C) Model construction

D) Model assessment

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

10

Which of the following is true of Euclidean distances?

A) It is used to measure dissimilarity between categorical variable observations.

B) It is not affected by the scale on which variables are measured.

C) It increases with the increase in similarity between variable values.

D) It is susceptible to distortions from outlier measurements.

A) It is used to measure dissimilarity between categorical variable observations.

B) It is not affected by the scale on which variables are measured.

C) It increases with the increase in similarity between variable values.

D) It is susceptible to distortions from outlier measurements.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

11

_____ is a category of data-mining techniques in which an algorithm learns how to predict or classify an outcome variable of interest.

A) Supervised learning

B) Unsupervised learning

C) Dimension reduction

D) Data sampling

A) Supervised learning

B) Unsupervised learning

C) Dimension reduction

D) Data sampling

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

12

The data preparation technique used in market segmentation to divide consumers into different homogeneous groups is _____.

A) data visualization

B) cluster analysis

C) market analysis

D) supervised learning

A) data visualization

B) cluster analysis

C) market analysis

D) supervised learning

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

13

Which of the following is true of unsupervised learning?

A) Its objective is to predict the outcome of a variable.

B) Its error tolerance is tightly controlled by accuracy measures.

C) Qualitative assessments are used to confirm the definite accuracy measures.

D) It detects patterns and relationships in the data.

A) Its objective is to predict the outcome of a variable.

B) Its error tolerance is tightly controlled by accuracy measures.

C) Qualitative assessments are used to confirm the definite accuracy measures.

D) It detects patterns and relationships in the data.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

14

Which of the following is true of hierarchical clustering?

A) All observations are put in a mega-cluster to begin with.

B) Each of the large clusters is broken down iteratively.

C) It is a bottom-up approach to clustering.

D) At the end of the process, observations in the same cluster have maximum distance.

A) All observations are put in a mega-cluster to begin with.

B) Each of the large clusters is broken down iteratively.

C) It is a bottom-up approach to clustering.

D) At the end of the process, observations in the same cluster have maximum distance.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

15

_____ is the process of estimating the value of a categorical outcome variable.

A) Sampling

B) Prediction

C) Classification

D) Validation

A) Sampling

B) Prediction

C) Classification

D) Validation

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

16

k-means clustering is the process of:

A) agglomerating observations into a series of nested groups based on a measure of similarity.

B) organizing observations into one of a number of groups based on a measure of similarity.

C) reducing the number of variables to consider in a data-mining approach.

D) estimating the value of a continuous outcome variable.

A) agglomerating observations into a series of nested groups based on a measure of similarity.

B) organizing observations into one of a number of groups based on a measure of similarity.

C) reducing the number of variables to consider in a data-mining approach.

D) estimating the value of a continuous outcome variable.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

17

Observation refers to the:

A) estimated continuous outcome variable.

B) set of recorded values of variables associated with a single entity.

C) goal of predicting a categorical outcome based on a set of variables.

D) mean of all variable values associated with one particular entity.

A) estimated continuous outcome variable.

B) set of recorded values of variables associated with a single entity.

C) goal of predicting a categorical outcome based on a set of variables.

D) mean of all variable values associated with one particular entity.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

18

The estimation of the value for a continuous outcome is done during _____.

A) classification

B) prediction

C) data preparation

D) data sampling

A) classification

B) prediction

C) data preparation

D) data sampling

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

19

_____methods do not attempt to predict an output value but are rather used to detect patterns and relationships in the data.

A) Supervised learning

B) Machine learning

C) Artificial intelligence

D) Unsupervised learning

A) Supervised learning

B) Machine learning

C) Artificial intelligence

D) Unsupervised learning

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

20

Average linkage is a measure of calculating dissimilarity between clusters by:

A) considering only the two most dissimilar observations in the two clusters.

B) computing the average dissimilarity between every pair of observations between the two clusters.

C) considering only the two closest observations in the two clusters.

D) considering the distance between the cluster centroids.

A) considering only the two most dissimilar observations in the two clusters.

B) computing the average dissimilarity between every pair of observations between the two clusters.

C) considering only the two closest observations in the two clusters.

D) considering the distance between the cluster centroids.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

21

A _____ refers to the number of times that a collection of items occur together in a transaction data set.

A) test set

B) validation count

C) support count

D) training set

A) test set

B) validation count

C) support count

D) training set

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

22

A tree diagram used to illustrate the sequence of nested clusters produced by hierarchical clustering is known as a _____.

A) dendrogram

B) scatter chart

C) decile-wise lift chart

D) cumulative lift tree

A) dendrogram

B) scatter chart

C) decile-wise lift chart

D) cumulative lift tree

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

23

The lift ratio of an association rule with a confidence value of 0.43 and in which the consequent occurs in 6 out of 10 cases is:

A) 1.40

B) 0.54

C) 1.00

D) 0.72

A) 1.40

B) 0.54

C) 1.00

D) 0.72

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

24

The endpoint of a k-means clustering algorithm occurs when:

A) Euclidean distance between clusters is minimum.

B) Euclidean distance between observations in a cluster is maximum.

C) no further changes are observed in cluster structure and number.

D) all of the observations are encompassed within a single large cluster with mean k.

A) Euclidean distance between clusters is minimum.

B) Euclidean distance between observations in a cluster is maximum.

C) no further changes are observed in cluster structure and number.

D) all of the observations are encompassed within a single large cluster with mean k.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

25

A cluster's _____ can be measured by the difference between the distance value at which a cluster is originally formed and the distance value at which it is merged with another cluster in a dendrogram.

A) dimension

B) affordability

C) durability

D) span

A) dimension

B) affordability

C) durability

D) span

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

26

Which of the following is a commonly used supervised learning method?

A) k-means clustering

B) k-nearest neighbors

C) hierarchical clustering

D) association rule development

A) k-means clustering

B) k-nearest neighbors

C) hierarchical clustering

D) association rule development

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

27

Test set is the data set used to:

A) build the data mining model.

B) estimate accuracy of candidate models on unseen data.

C) estimate accuracy of final model on unseen data.

D) show counts of actual versus predicted class values.

A) build the data mining model.

B) estimate accuracy of candidate models on unseen data.

C) estimate accuracy of final model on unseen data.

D) show counts of actual versus predicted class values.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

28

_____ can be used to partition observations in a manner to obtain clusters with the least amount of information loss due to the aggregation.

A) Single linkage

B) Ward's method

C) Average group linkage

D) Dendrogram

A) Single linkage

B) Ward's method

C) Average group linkage

D) Dendrogram

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

29

_____ refers to the scenario in which the analyst builds a model that does a great job of explaining the sample of data on which it is based but fails to accurately predict outside the sample data.

A) Underfitting

B) Overfitting

C) Oversampling

D) Undersampling

A) Underfitting

B) Overfitting

C) Oversampling

D) Undersampling

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

30

An analysis of items frequently co-occurring in transactions is known as _____.

A) market segmentation

B) market basket analysis

C) regression analysis

D) cluster analysis

A) market segmentation

B) market basket analysis

C) regression analysis

D) cluster analysis

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

31

Separate error rates with respect to the false negative and false positive cases are computed to take into account the:

A) assymetric costs in misclassification.

B) symmetric weights of these two cases.

C) distortions due to outliers.

D) effect of sampling error.

A) assymetric costs in misclassification.

B) symmetric weights of these two cases.

C) distortions due to outliers.

D) effect of sampling error.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

32

The impurity of a group of observations is based on the variance of the outcome value for the observations in the group for _____.

A) regression trees

B) time-series plots

C) classification trees

D) cumulative lift charts

A) regression trees

B) time-series plots

C) classification trees

D) cumulative lift charts

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

33

One minus the overall error rate is often referred to as the _____ of the model.

A) sensitivity

B) accuracy

C) specificity

D) cutoff value

A) sensitivity

B) accuracy

C) specificity

D) cutoff value

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

34

In the k-nearest neighbors method, when the value of k is set to 1,:

A) the classification or prediction of a new observation is based solely on the single most similar observation from the training set.

B) the new observation's class is naïvely assigned to the most common class in the training set.

C) the new observation's prediction is used to estimate the anticipated error rate on future data over the entire training set.

D) the classification or prediction of a new observation is subject to the smallest possible classification error.

A) the classification or prediction of a new observation is based solely on the single most similar observation from the training set.

B) the new observation's class is naïvely assigned to the most common class in the training set.

C) the new observation's prediction is used to estimate the anticipated error rate on future data over the entire training set.

D) the classification or prediction of a new observation is subject to the smallest possible classification error.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

35

_____ is a measure of the heterogeneity of observations in a classification tree.

A) Sensitivity

B) Specificity

C) Accuracy

D) Impurity

A) Sensitivity

B) Specificity

C) Accuracy

D) Impurity

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

36

_____ is a generalization of linear regression for predicting a categorical outcome variable.

A) Multiple linear regression

B) Logistic regression

C) Discriminant analysis

D) Cluster analysis

A) Multiple linear regression

B) Logistic regression

C) Discriminant analysis

D) Cluster analysis

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

37

In which of the following scenarios would it be appropriate to use hierarchical clustering?

A) When the number of observations in the dataset is relatively high

B) When it is not necessary to know the nesting of clusters

C) When the number of clusters is known beforehand

D) When binary or ordinal data needs to be clustered

A) When the number of observations in the dataset is relatively high

B) When it is not necessary to know the nesting of clusters

C) When the number of clusters is known beforehand

D) When binary or ordinal data needs to be clustered

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

38

_____ is a measure of calculating dissimilarity between clusters by considering the distance between the cluster centroids.

A) Single linkage

B) Complete linkage

C) Average linkage

D) Average group linkage

A) Single linkage

B) Complete linkage

C) Average linkage

D) Average group linkage

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

39

An observation classified as part of a group with a characteristic when it actually does not have the characteristic is termed as a(n) _____.

A) false negative

B) false positive

C) residual

D) outlier

A) false negative

B) false positive

C) residual

D) outlier

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

40

A _____ classifies a categorical outcome variable by splitting observations into groups via a sequence of hierarchical rules.

A) regression tree

B) scatter chart

C) classification tree

D) classification confusion matrix

A) regression tree

B) scatter chart

C) classification tree

D) classification confusion matrix

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

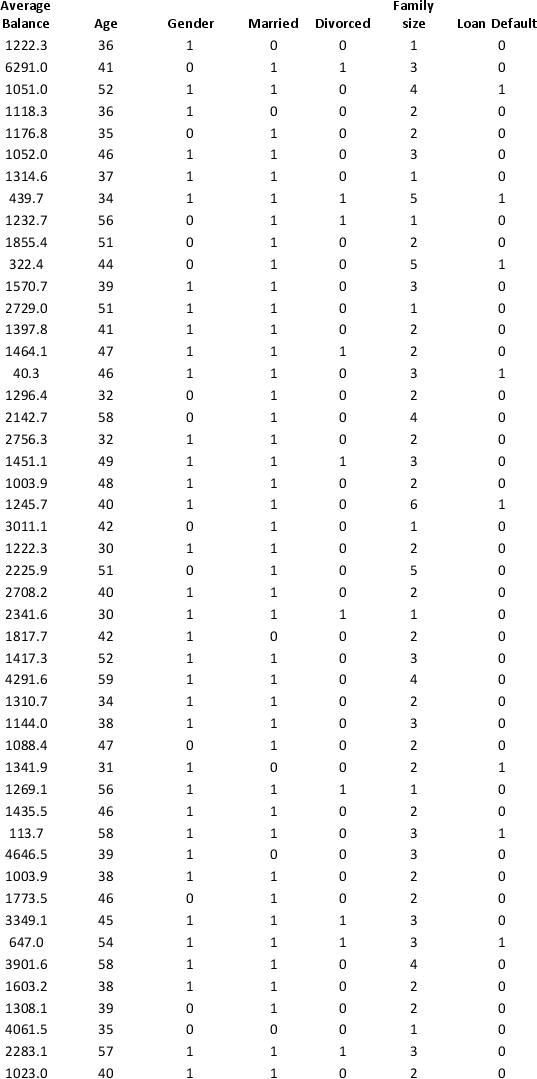

41

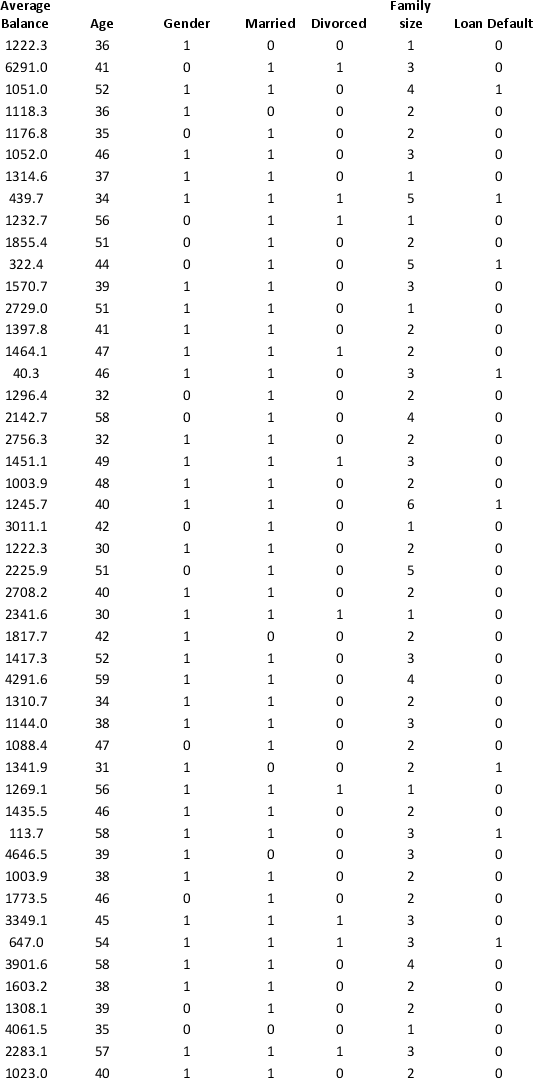

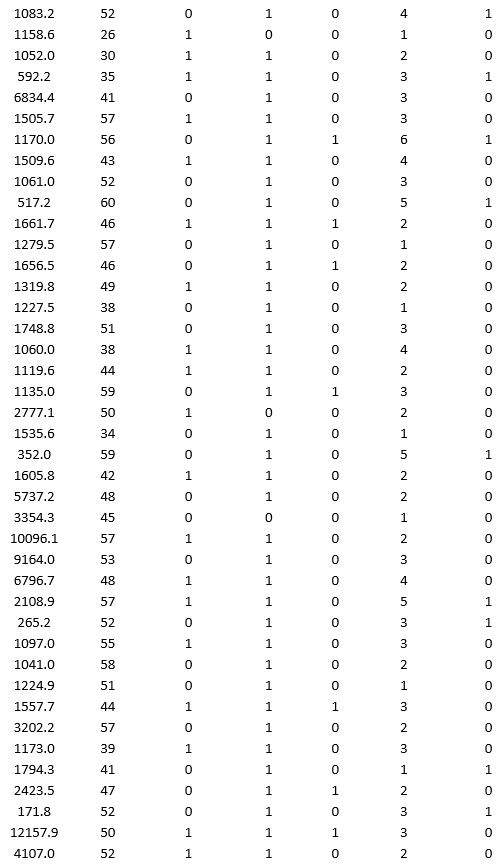

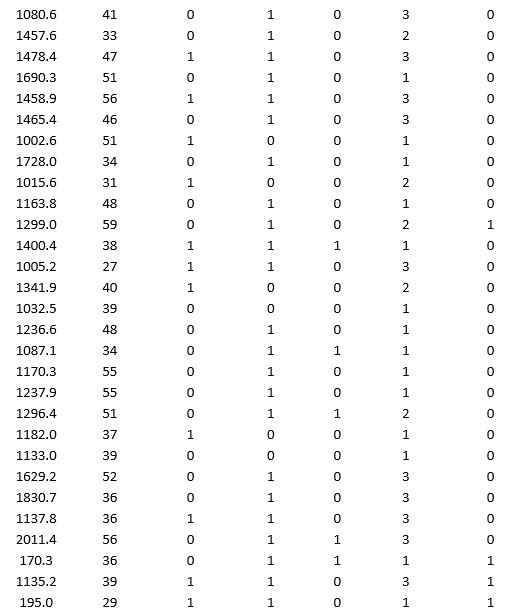

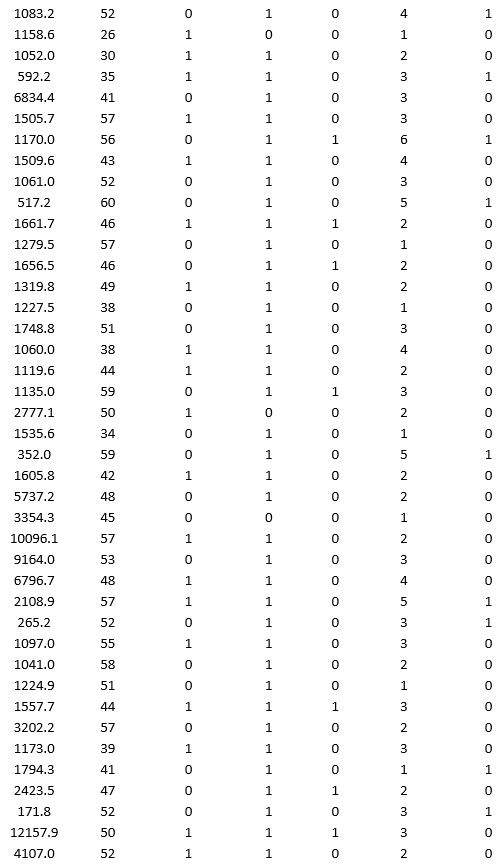

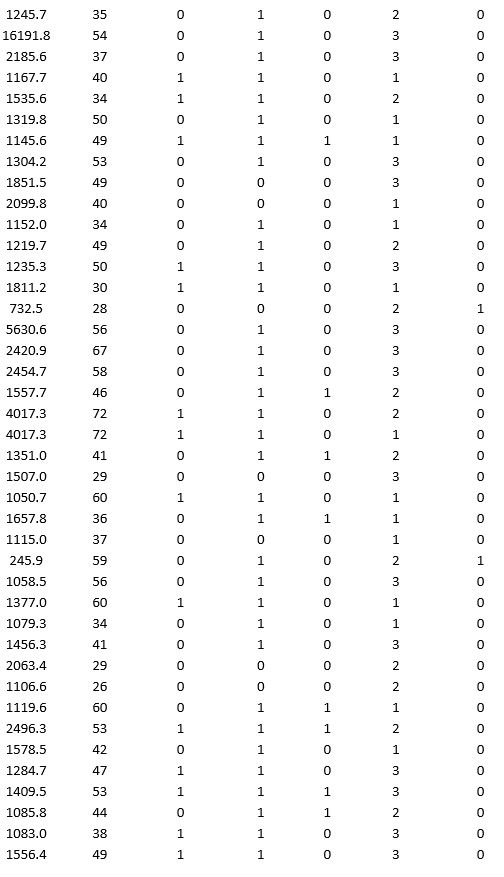

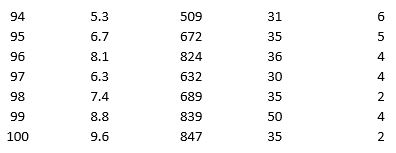

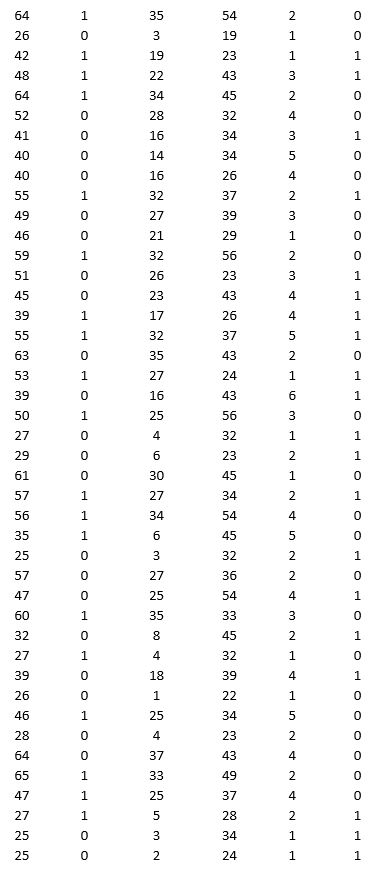

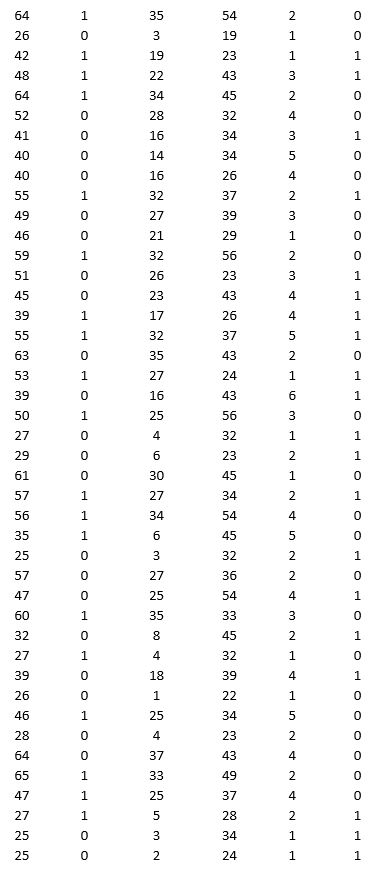

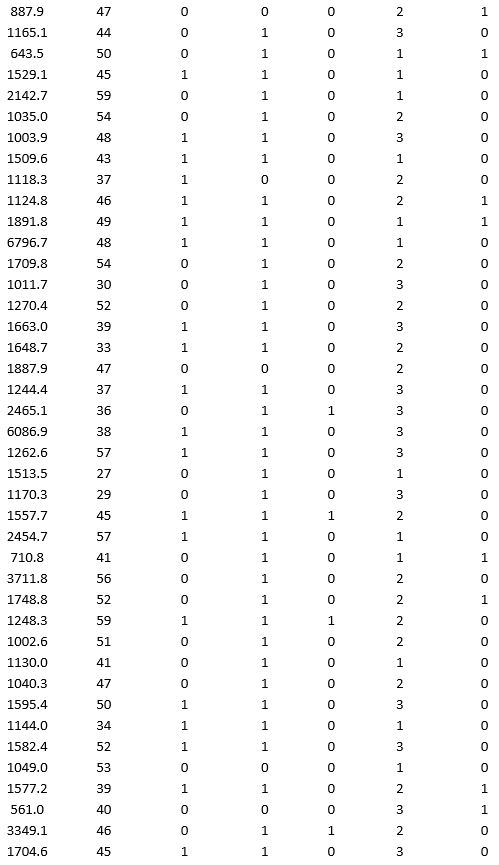

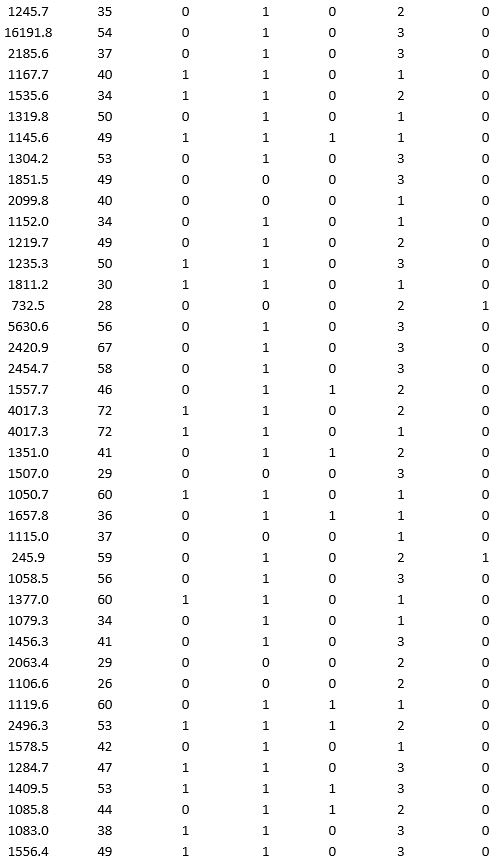

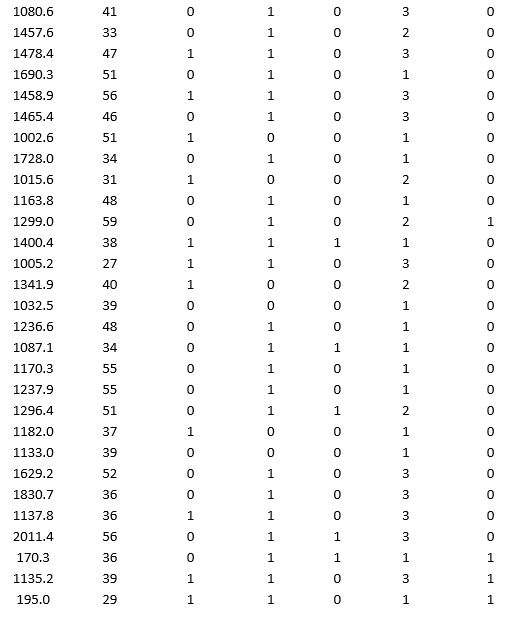

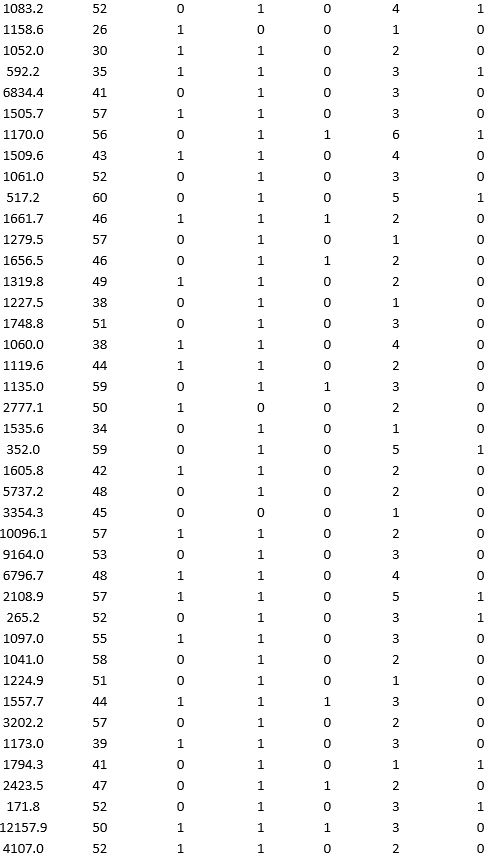

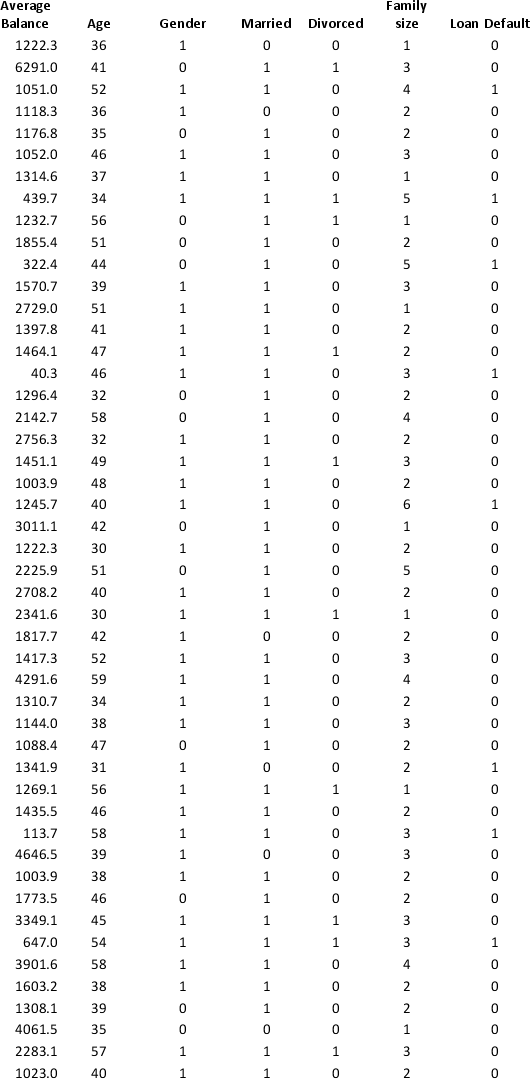

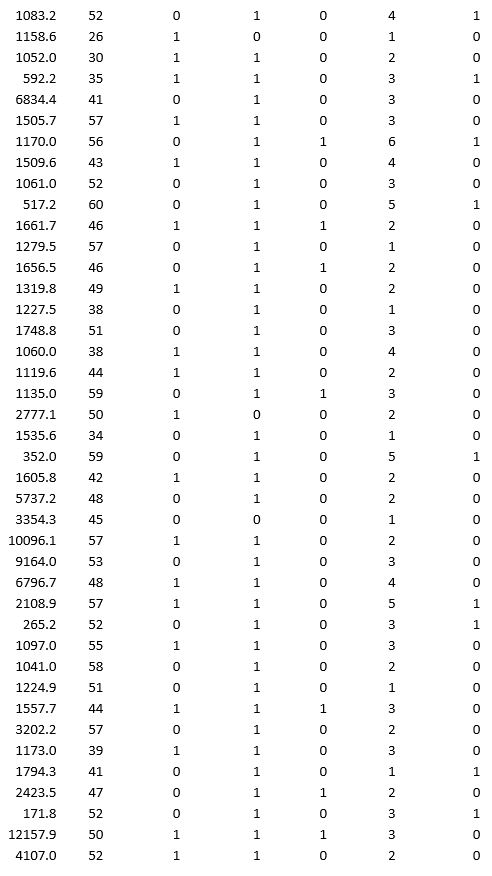

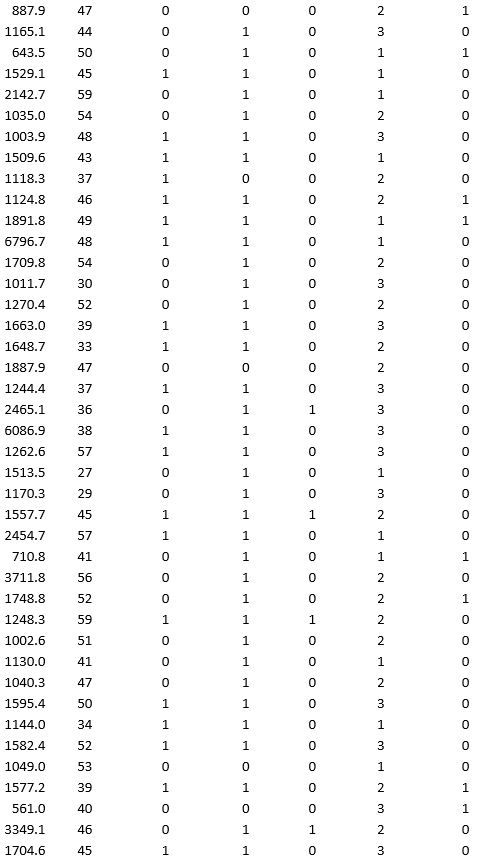

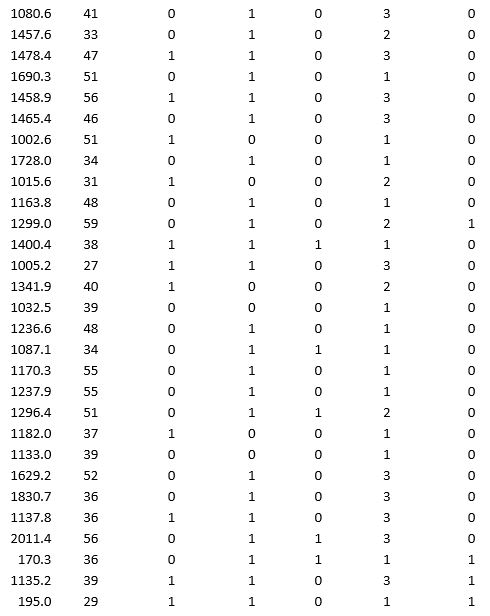

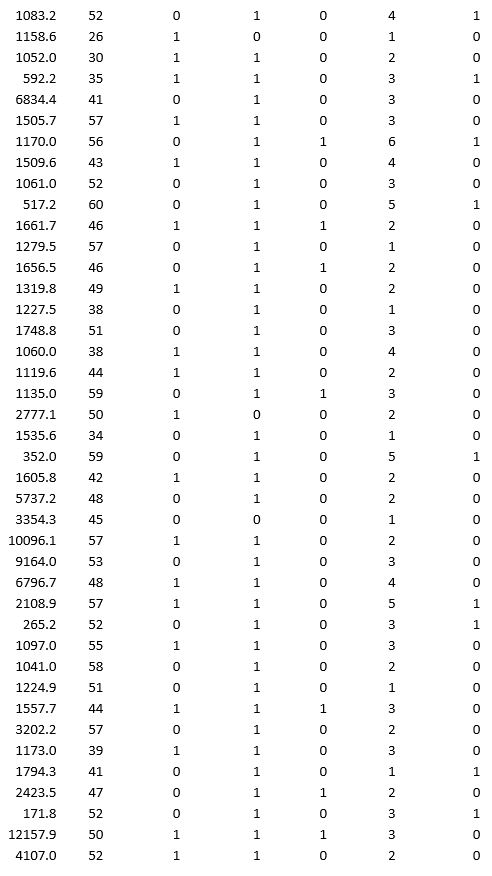

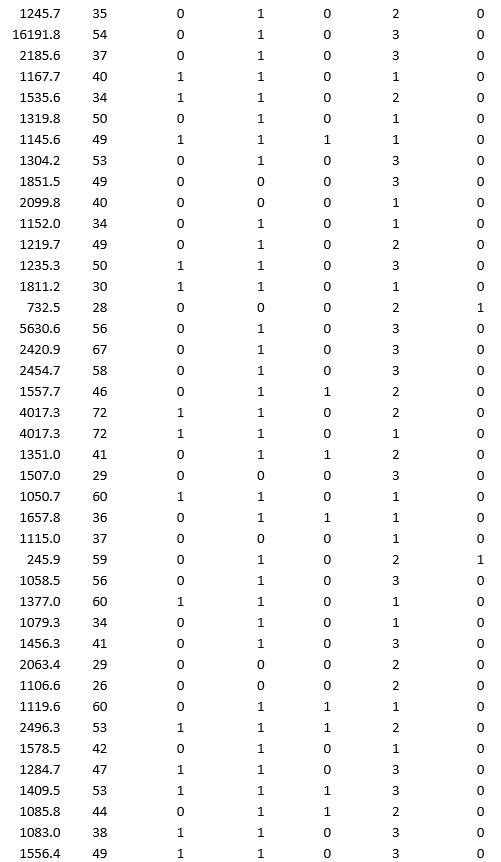

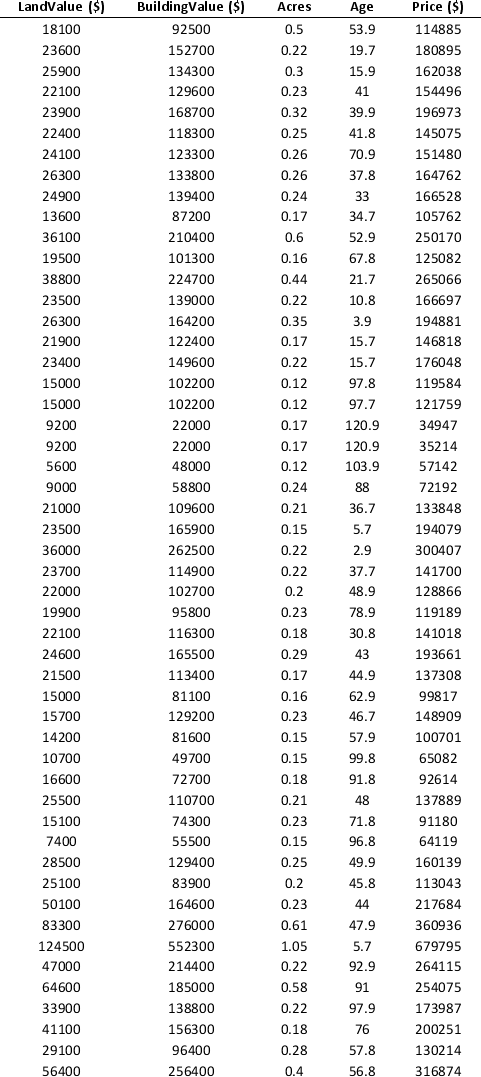

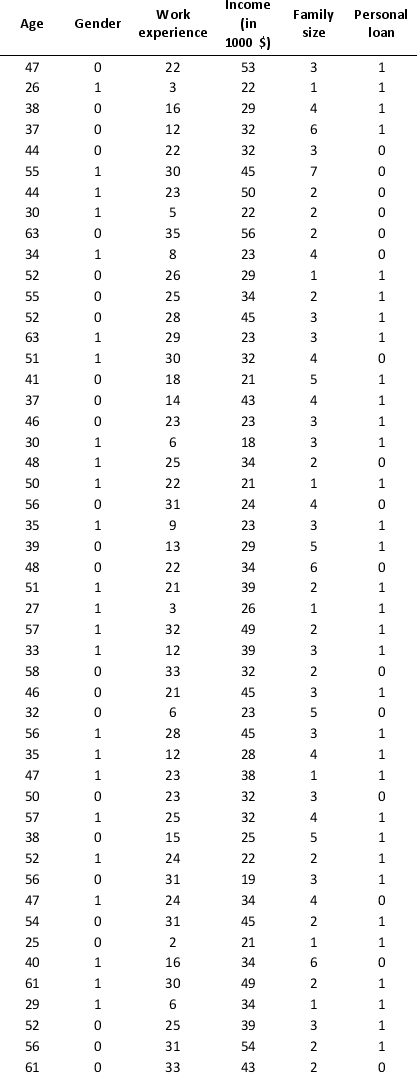

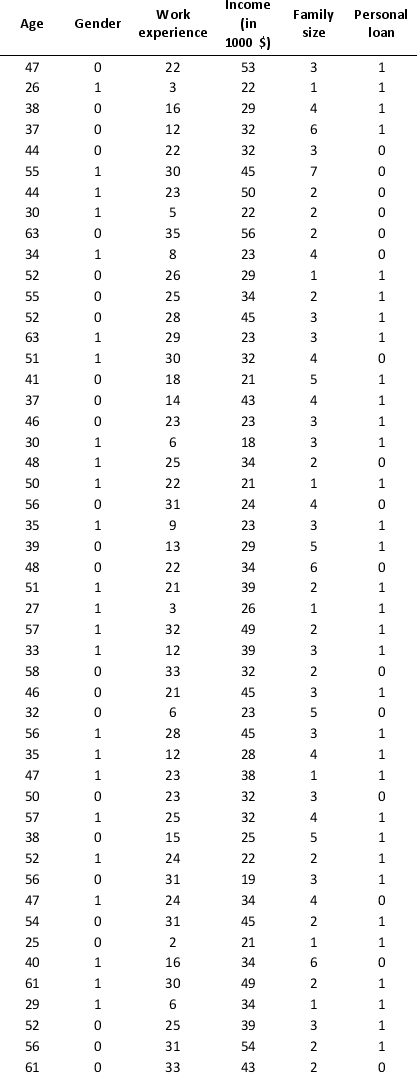

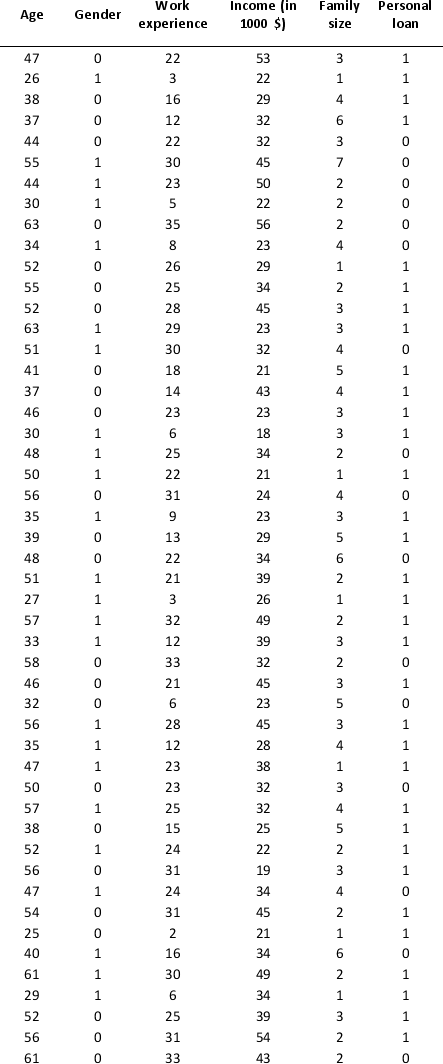

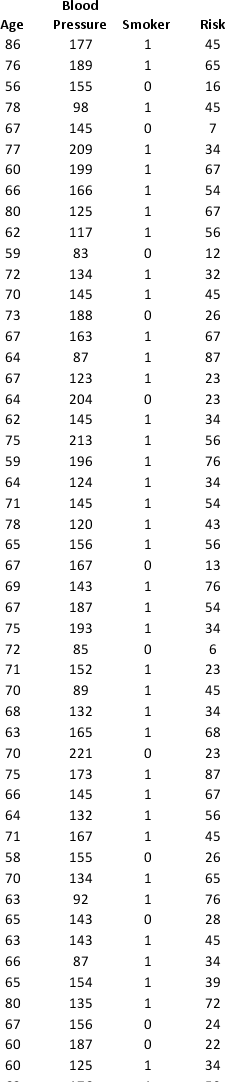

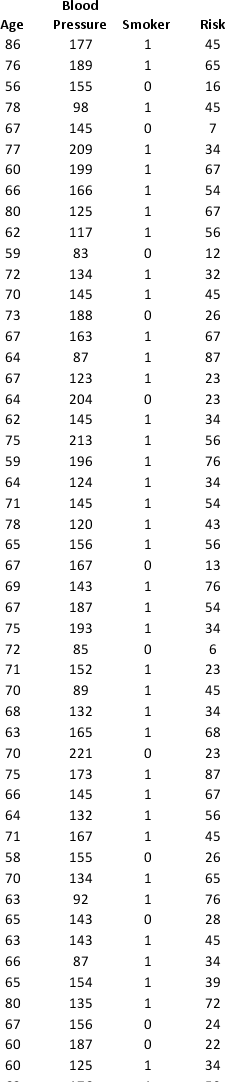

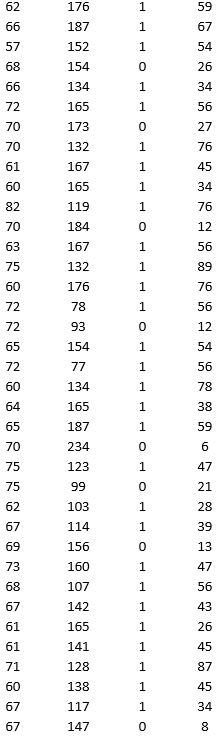

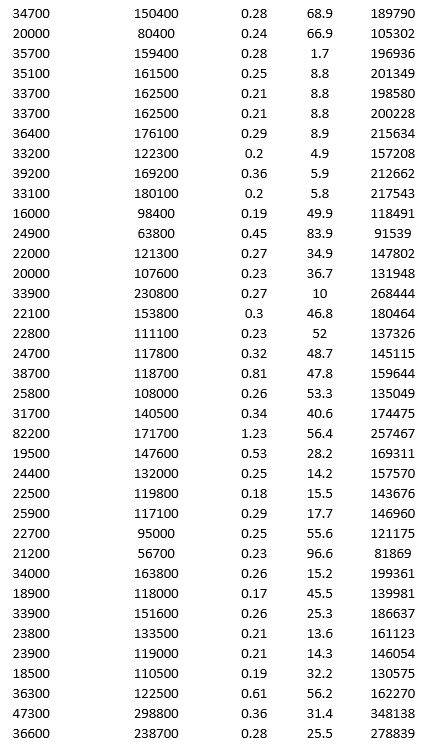

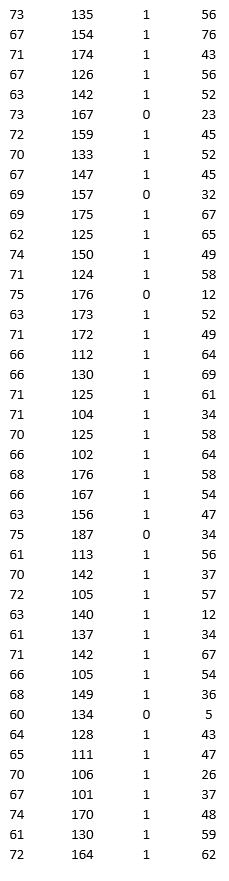

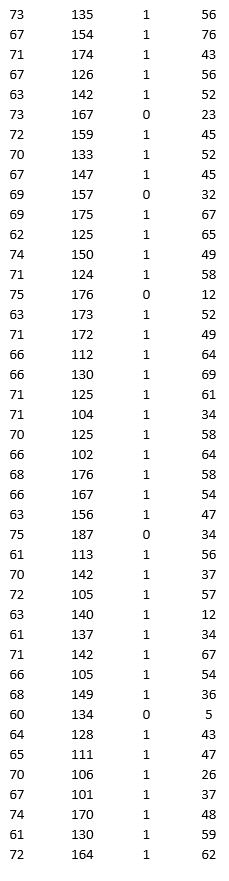

A bank wants to understand better the details of customers who are likely to default the loan. In order to analyze this, the data from a random sample of 200 customers are given below:

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data are taken away as test data. Construct a logistic regression model using Loan default as the output variable and all the other variables as input variables. Perform an exhaustive-search best subset selection with the number of best subsets equal to 2. Generate lift charts for both the validation data and test data.

a. From the generated set of logistic regression models, select one that is a good fit. Express the model as a mathematical equation relating the output variable to the input variables. Do the relationships suggested by the model make sense? Try to explain them.

b. Using the default cutoff value of 0.5 for your logistic regression model, what is the overall error rate on the test data?

c. Examine the decile-wise lift chart for your model on the test data. What is the first decile lift? Interpret this value.

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data are taken away as test data. Construct a logistic regression model using Loan default as the output variable and all the other variables as input variables. Perform an exhaustive-search best subset selection with the number of best subsets equal to 2. Generate lift charts for both the validation data and test data.

a. From the generated set of logistic regression models, select one that is a good fit. Express the model as a mathematical equation relating the output variable to the input variables. Do the relationships suggested by the model make sense? Try to explain them.

b. Using the default cutoff value of 0.5 for your logistic regression model, what is the overall error rate on the test data?

c. Examine the decile-wise lift chart for your model on the test data. What is the first decile lift? Interpret this value.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

42

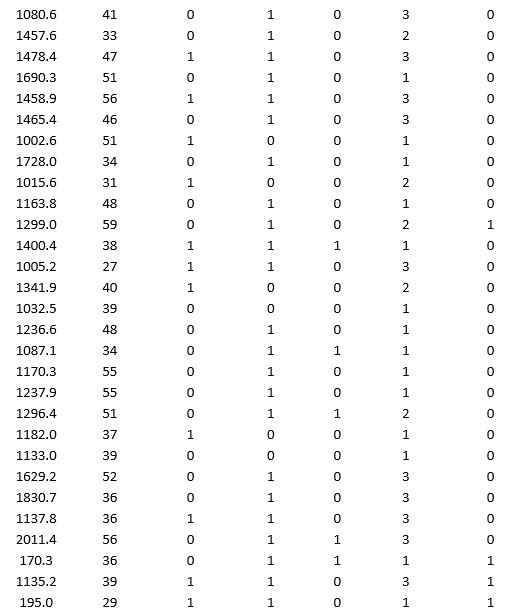

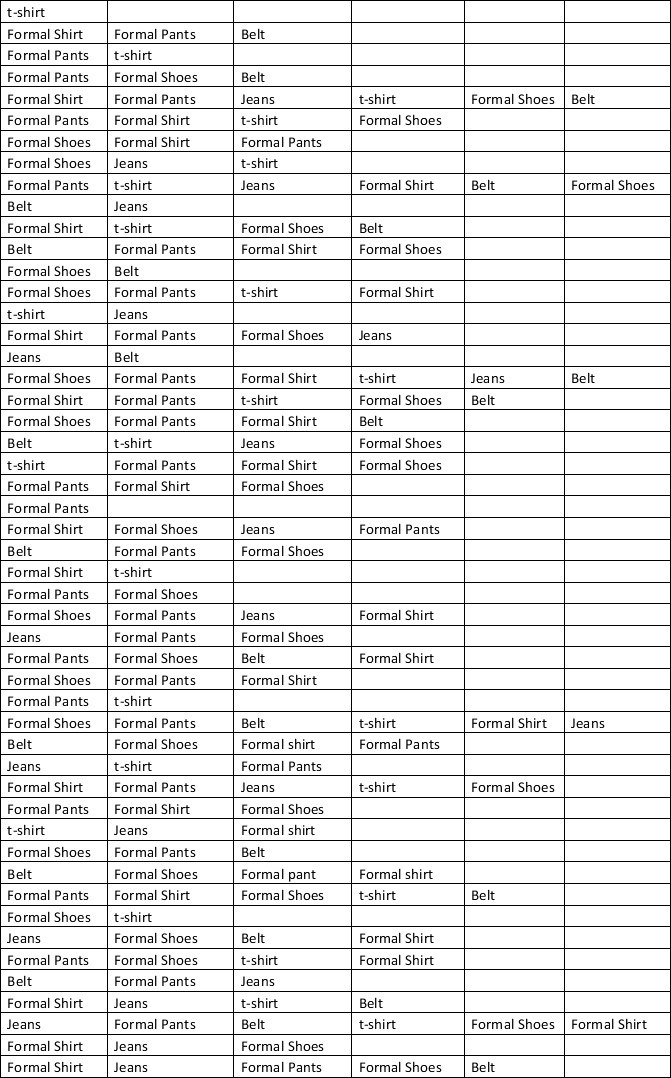

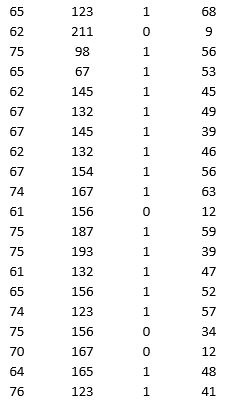

A retailer is interested in analyzing the shopping trend of men concerning the items: Shirts, pants, Jeans, t-shirts, Shoes, and Belts. A sample of 50 male customers is selected and the data are given below.

a. Using a minimum support of 20 transactions and a minimum confidence of 50 percent, use XLMiner to generate a list of association rules. How many rules satisfy this criterion?

b. Using the list of rules from part a, consider the rule with the largest lift ratio. Interpret what this rule is saying about the relationship between the antecedent item set and consequent item set.

c. Interpret the support count of the item set composed of the all the items involved in the rule with the largest lift ratio.

d. Interpret the confidence of the rule with the largest lift ratio.

e. Interpret the lift ratio of the rule with the largest lift ratio.

a. Using a minimum support of 20 transactions and a minimum confidence of 50 percent, use XLMiner to generate a list of association rules. How many rules satisfy this criterion?

b. Using the list of rules from part a, consider the rule with the largest lift ratio. Interpret what this rule is saying about the relationship between the antecedent item set and consequent item set.

c. Interpret the support count of the item set composed of the all the items involved in the rule with the largest lift ratio.

d. Interpret the confidence of the rule with the largest lift ratio.

e. Interpret the lift ratio of the rule with the largest lift ratio.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

43

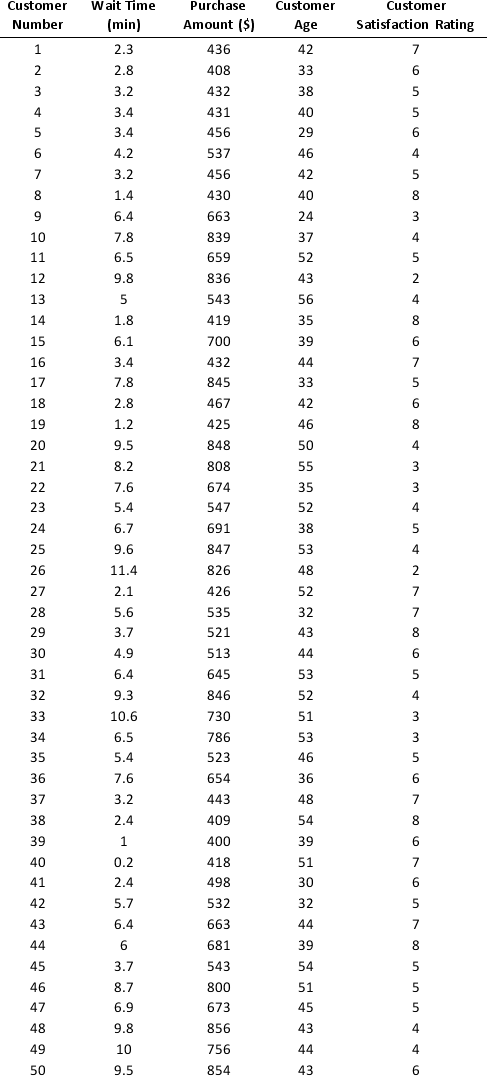

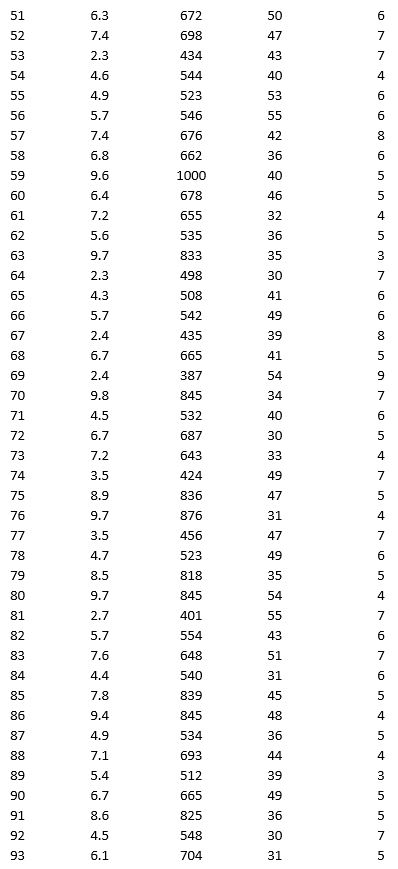

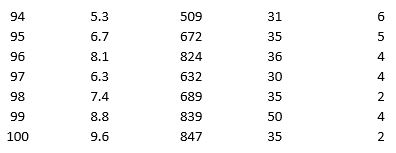

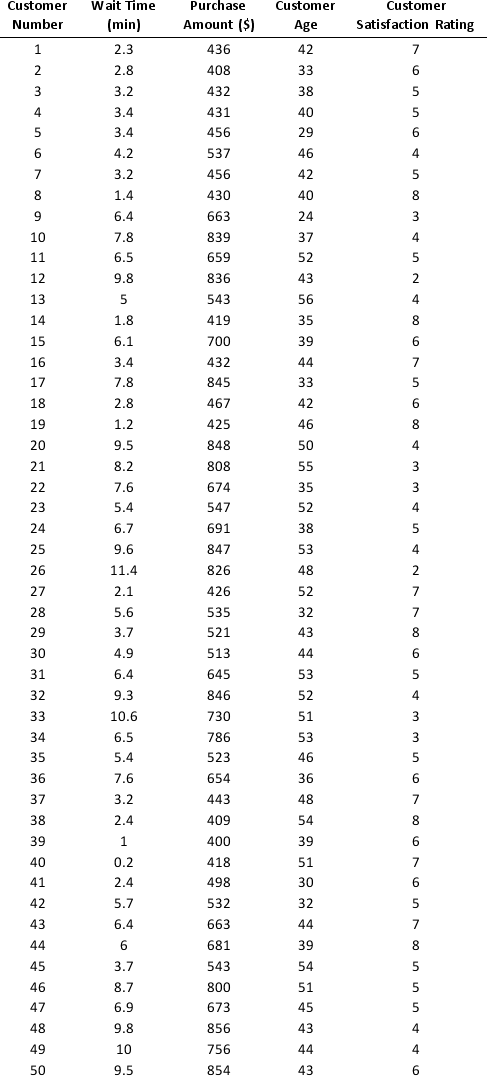

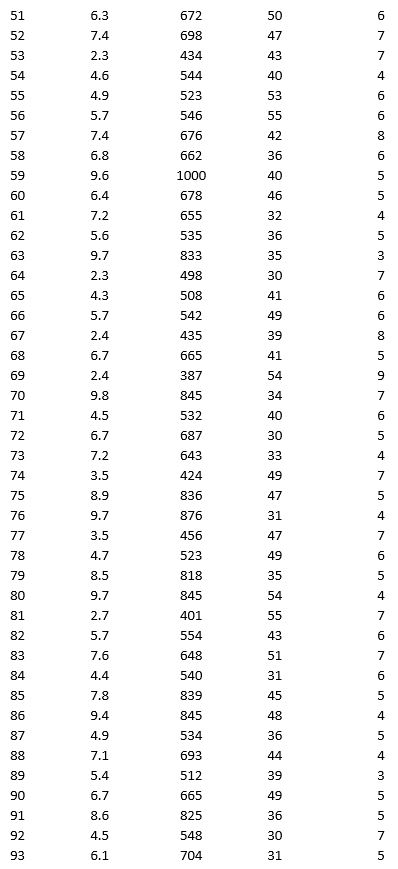

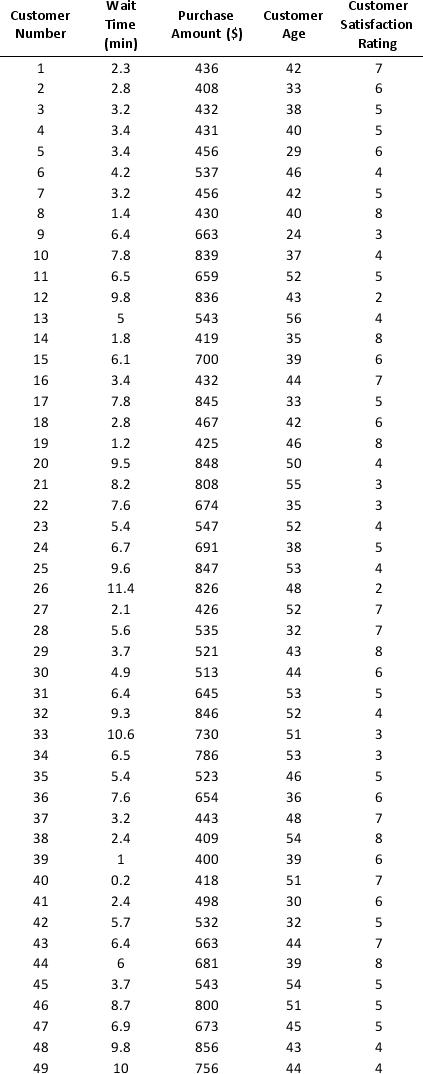

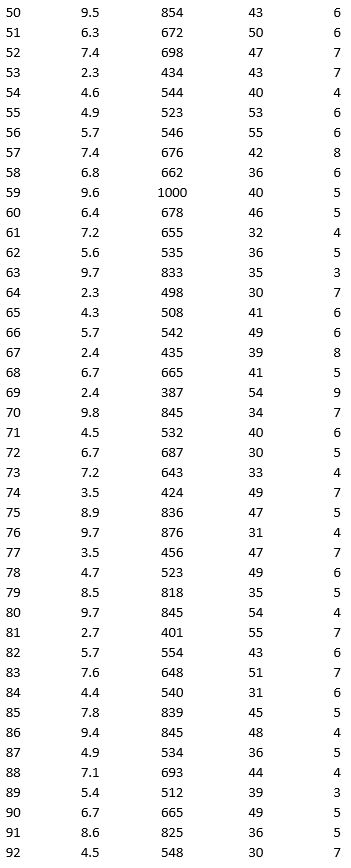

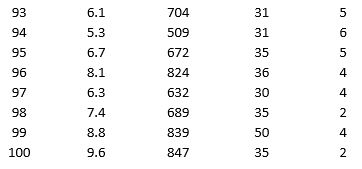

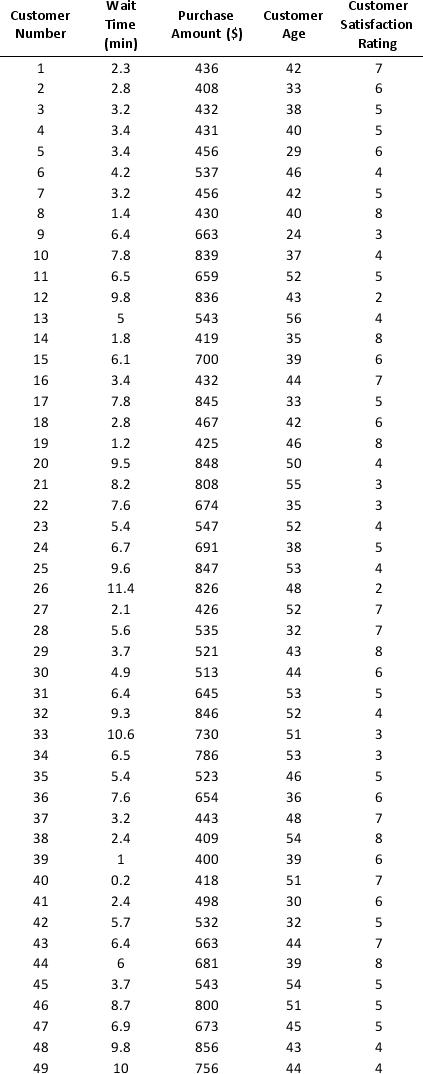

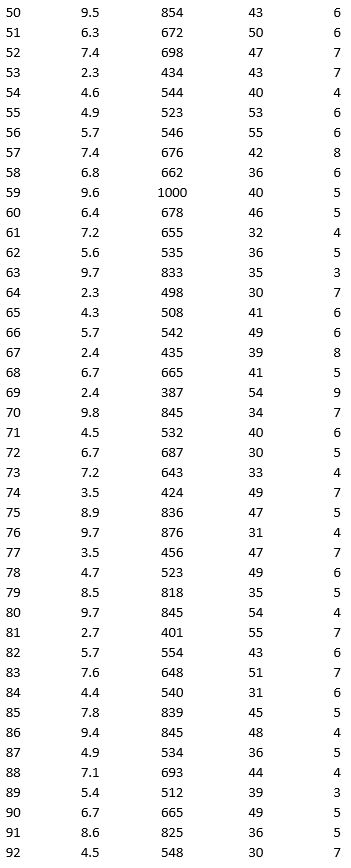

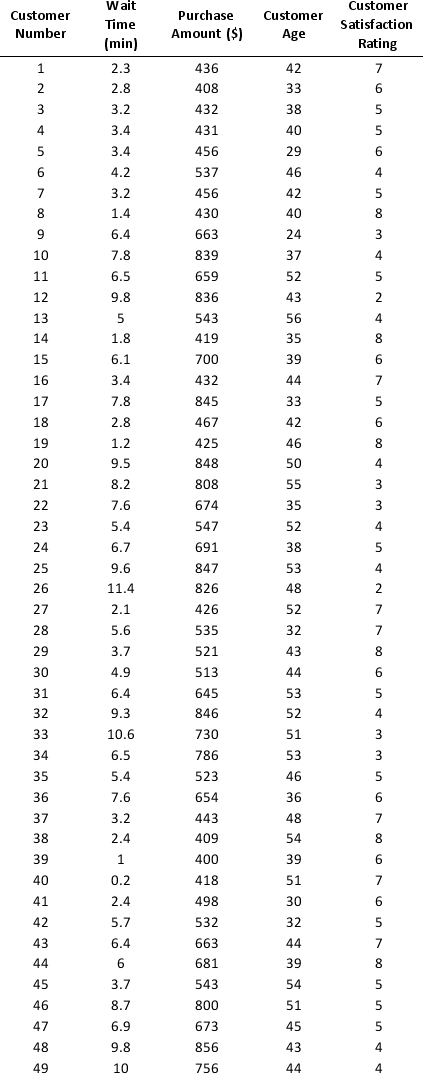

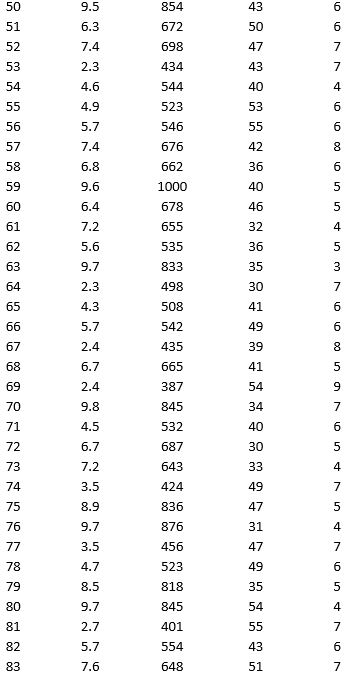

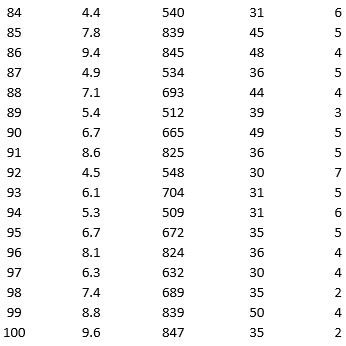

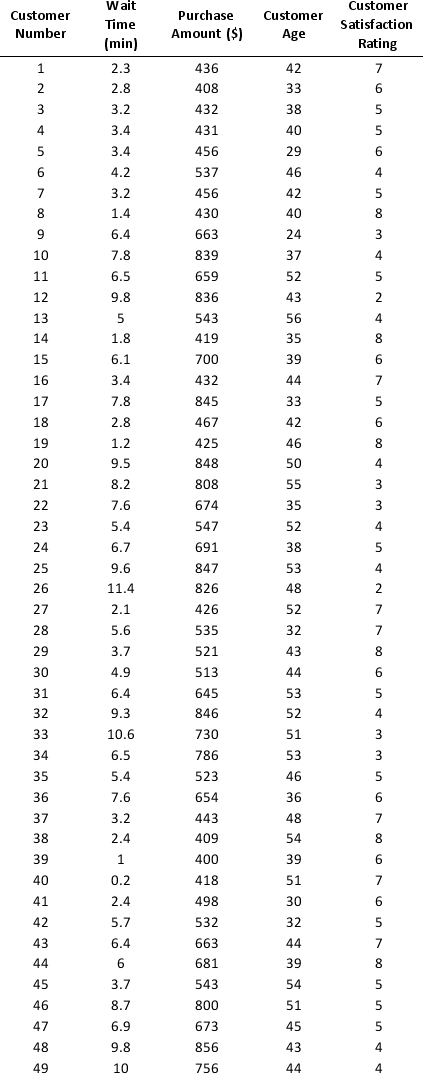

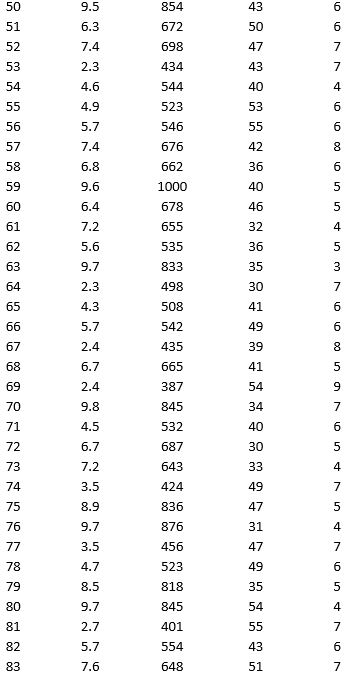

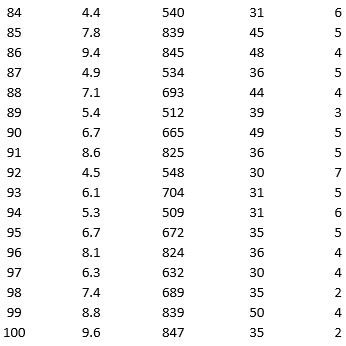

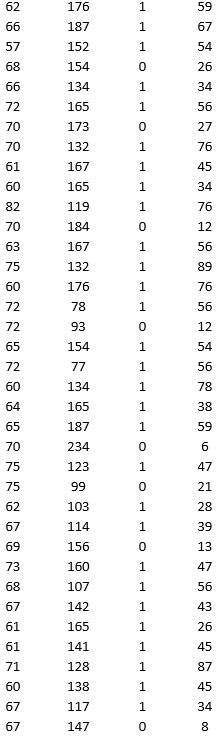

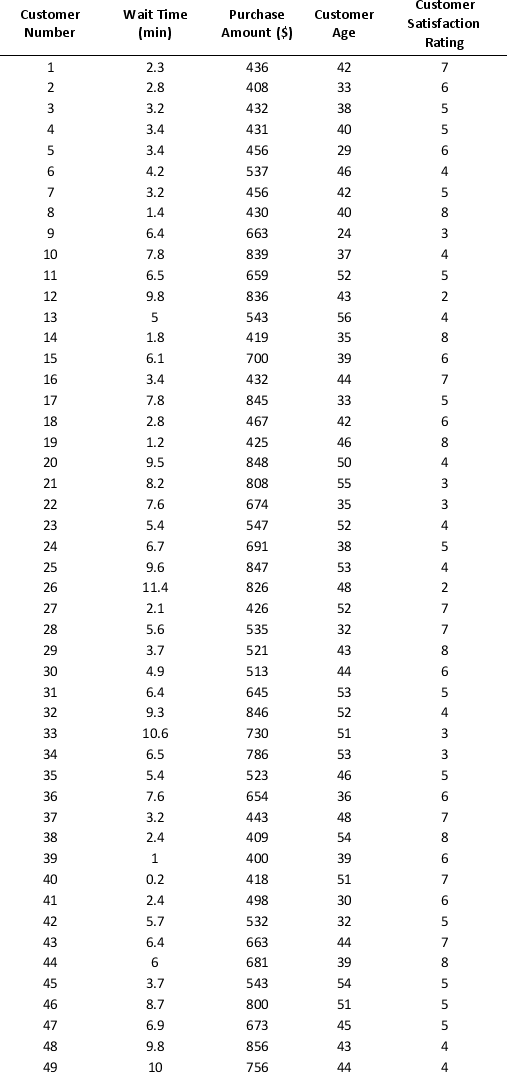

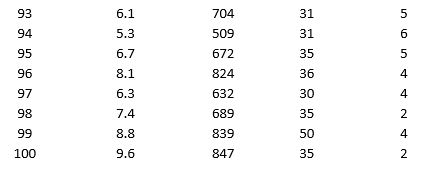

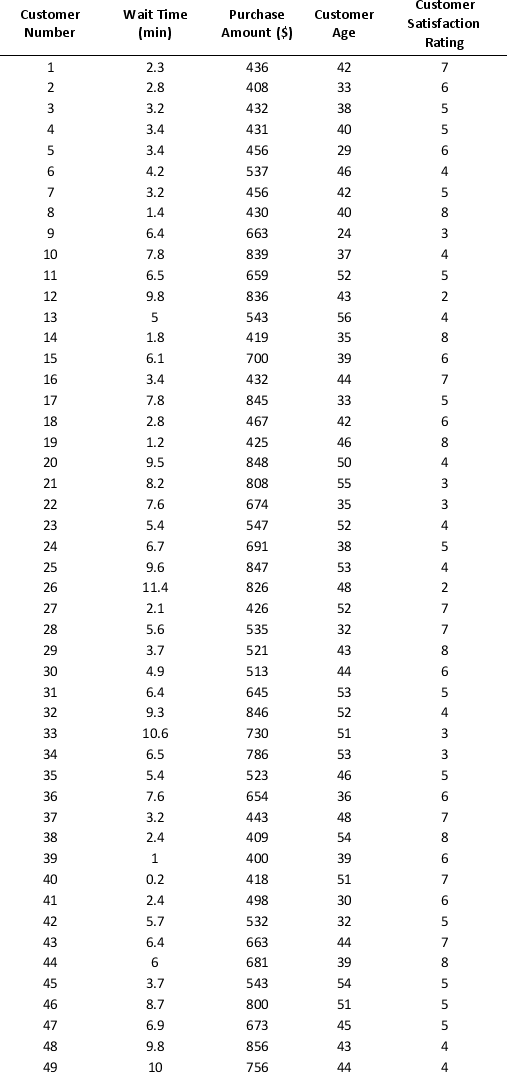

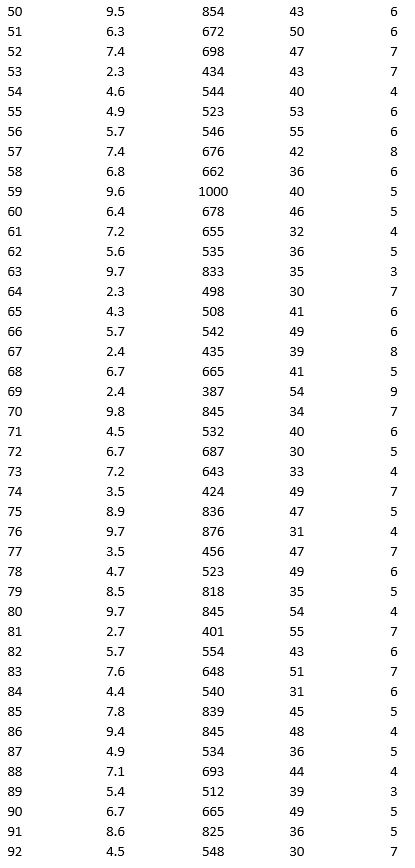

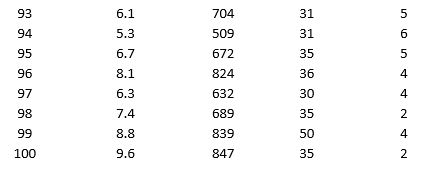

As part of the quarterly reviews, the manager of a retail store analyzes the quality of customer service based on the periodic customer satisfaction ratings (on a scale of 1 to 10 with 1 = Poor and 10 = Excellent). To understand the level of service quality, which includes the waiting times of the customers in the checkout section, he collected the following data on 100 customers who visited the store.

For the above data, apply k-means clustering using Wait time (min) as the variable with k = 3. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Then create one distinct data set for each of the three resulting clusters for waiting time.

a. For the observations composing the cluster which has the low waiting time, apply hierarchical clustering with Ward's method to form two clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

b. For the observations composing the cluster which has the medium waiting time, apply hierarchical clustering with Ward's method to form three clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

c. For the observations composing the cluster which has the high waiting time, apply hierarchical clustering with Ward's method to form two clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

For the above data, apply k-means clustering using Wait time (min) as the variable with k = 3. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Then create one distinct data set for each of the three resulting clusters for waiting time.

a. For the observations composing the cluster which has the low waiting time, apply hierarchical clustering with Ward's method to form two clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

b. For the observations composing the cluster which has the medium waiting time, apply hierarchical clustering with Ward's method to form three clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

c. For the observations composing the cluster which has the high waiting time, apply hierarchical clustering with Ward's method to form two clusters using Purchase Amount, Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters, report the characteristics of each cluster.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

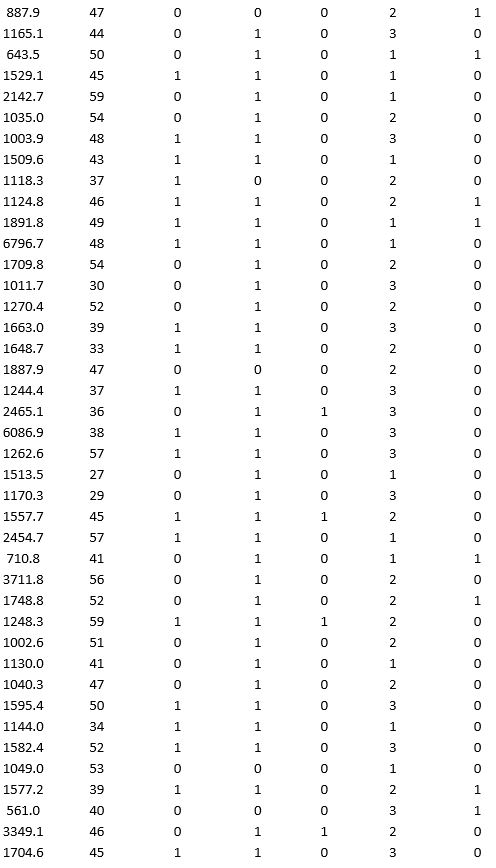

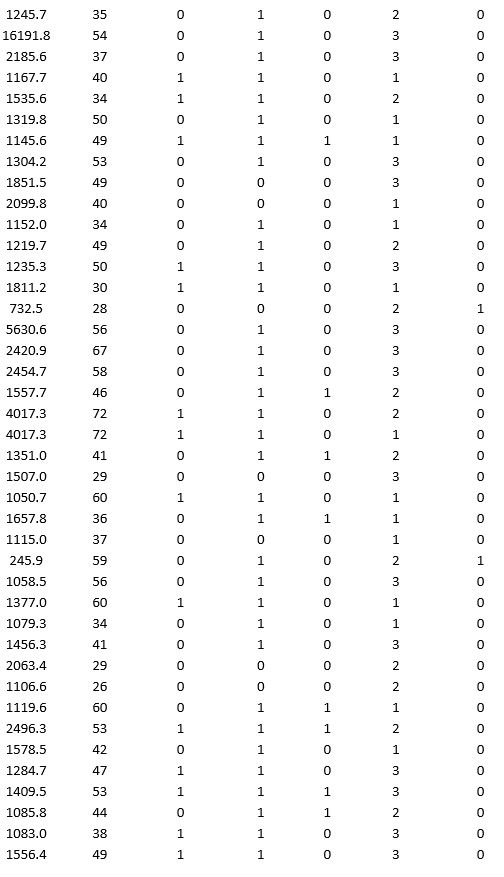

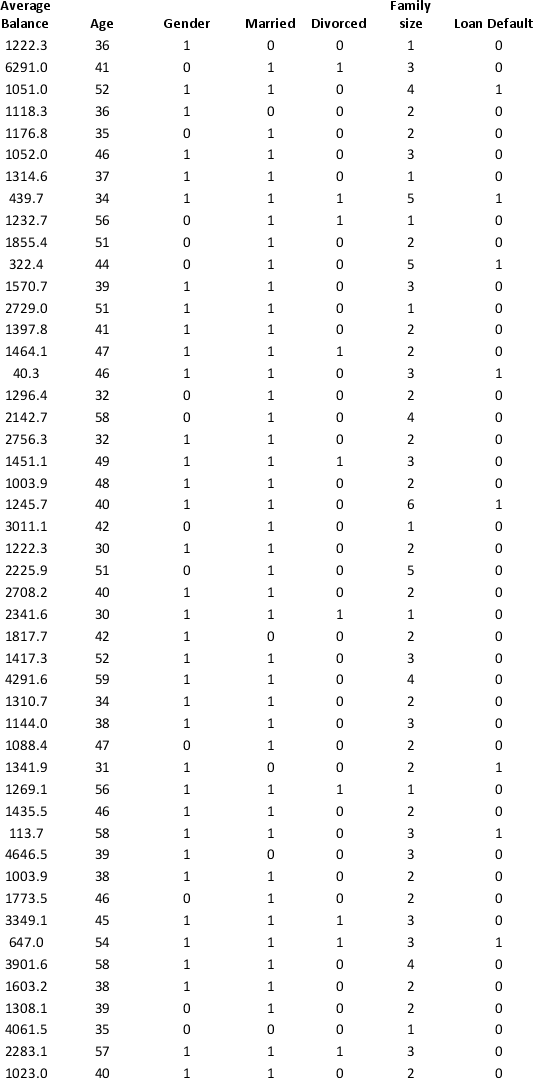

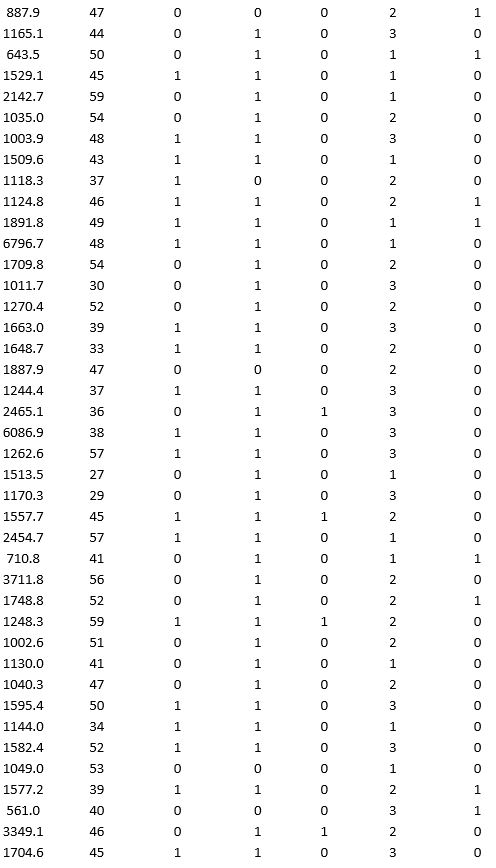

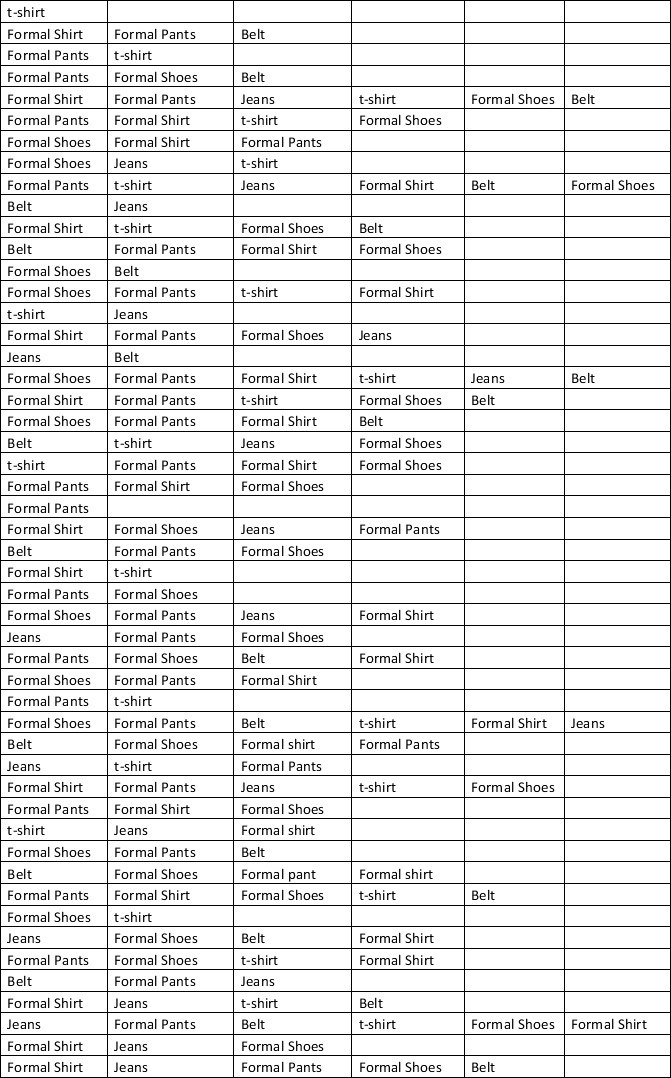

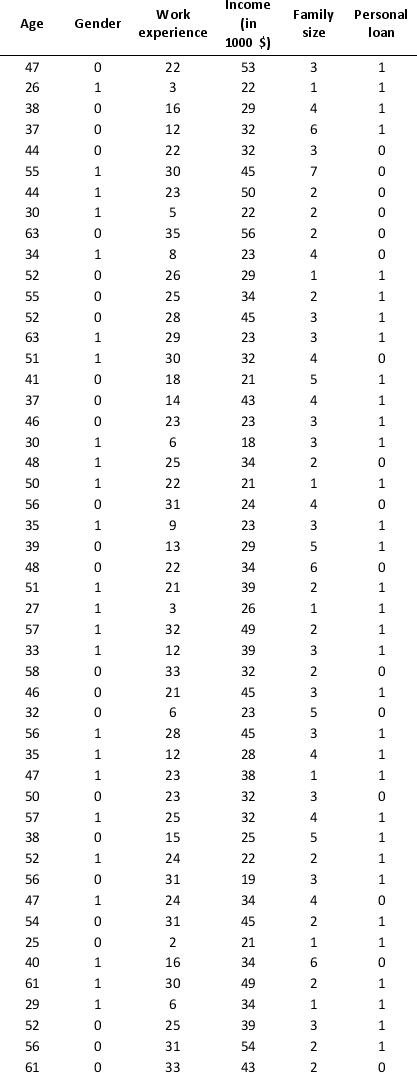

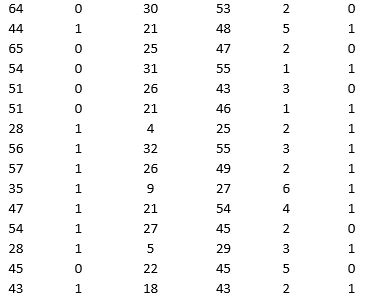

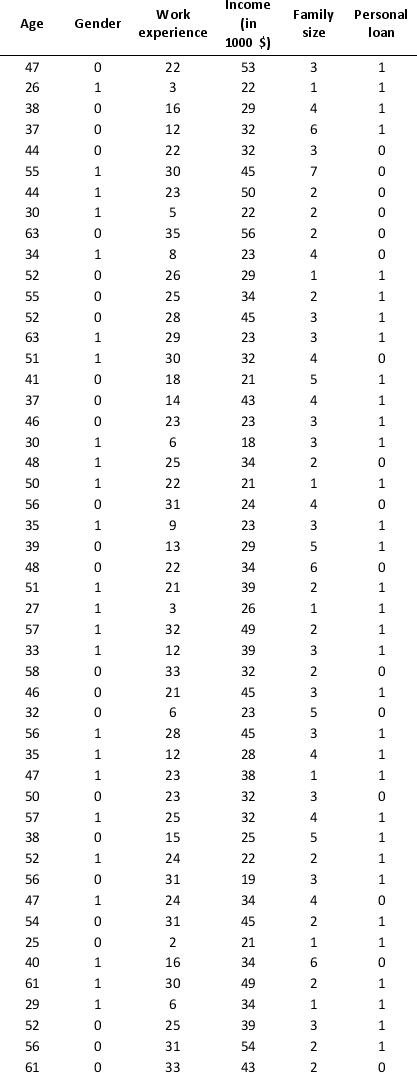

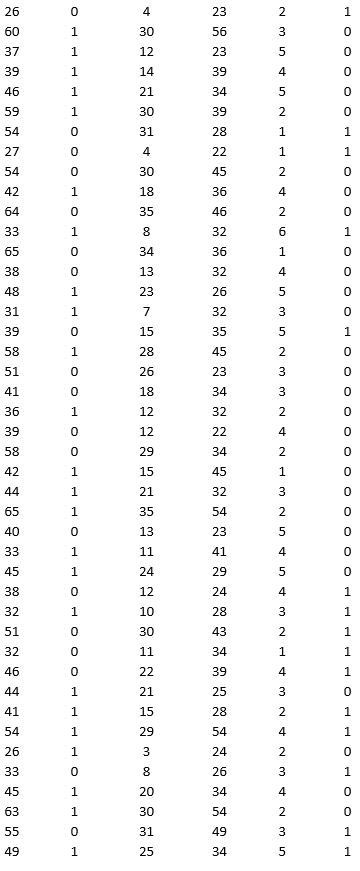

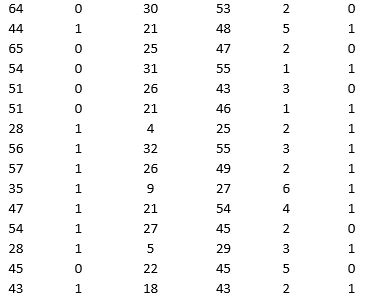

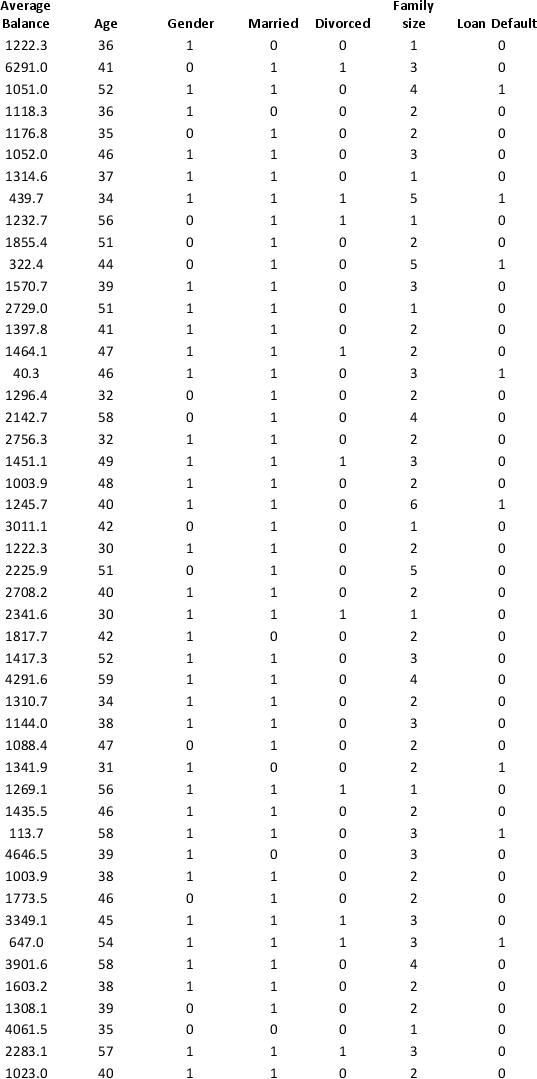

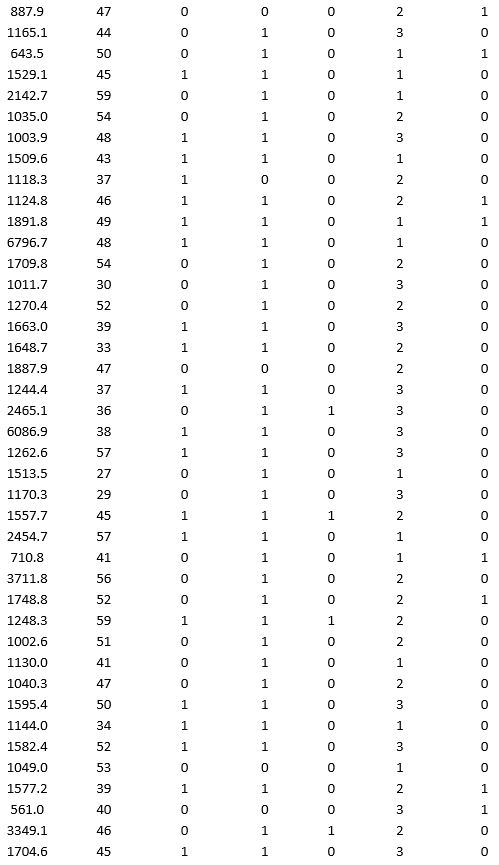

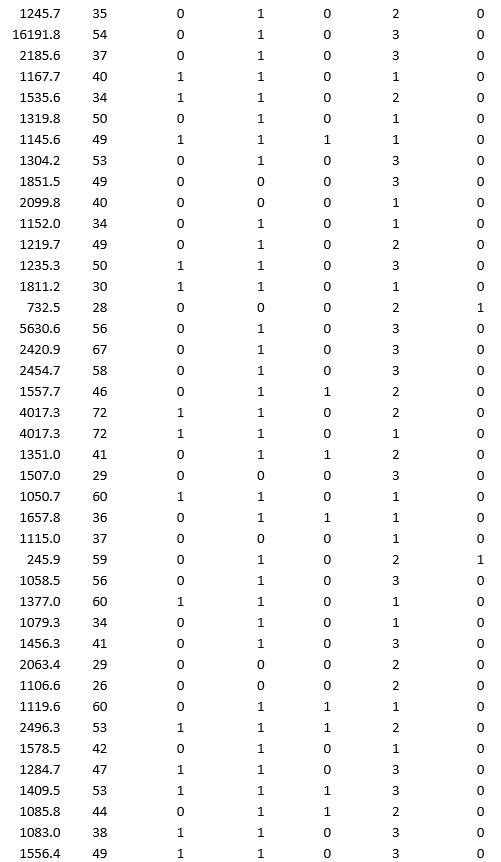

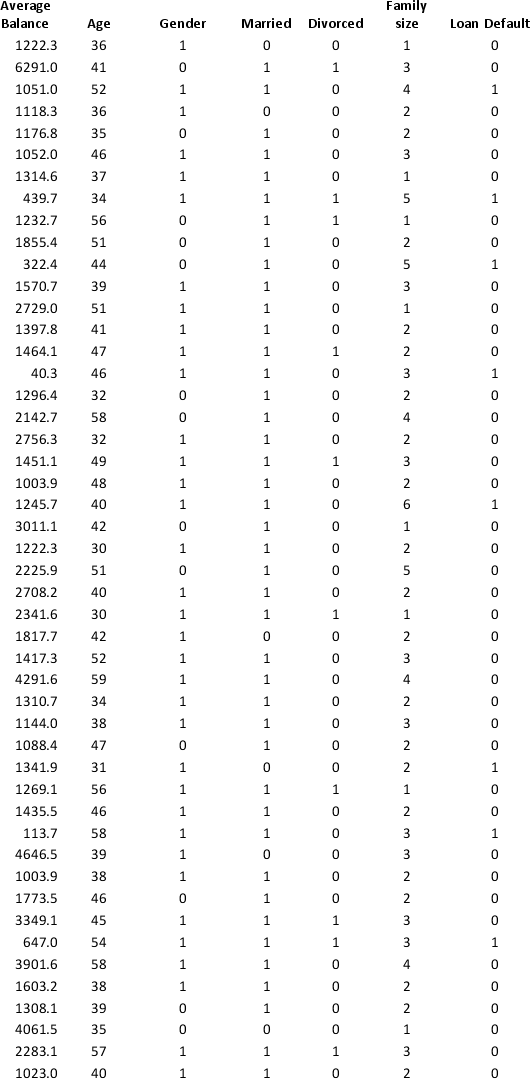

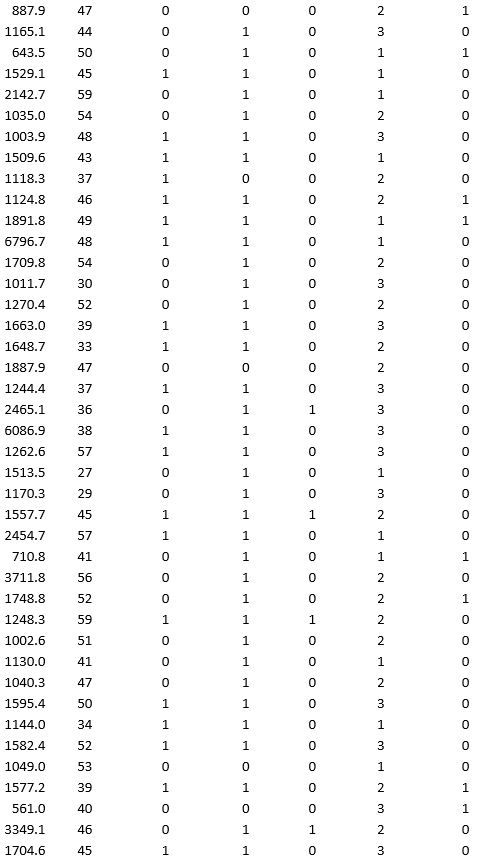

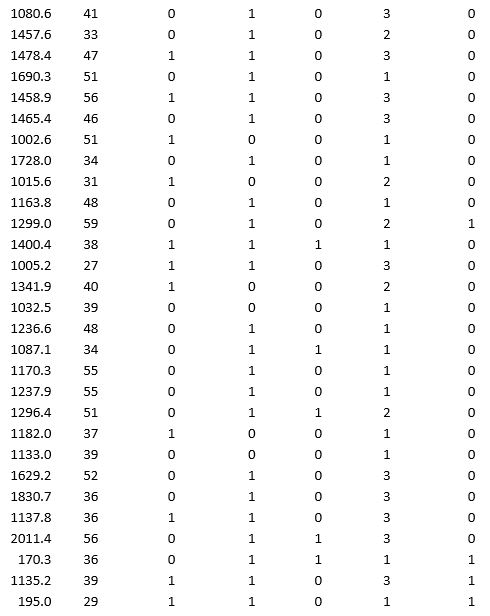

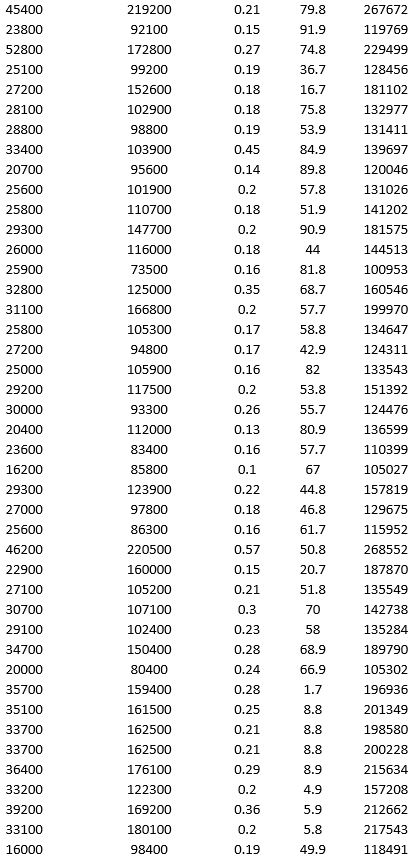

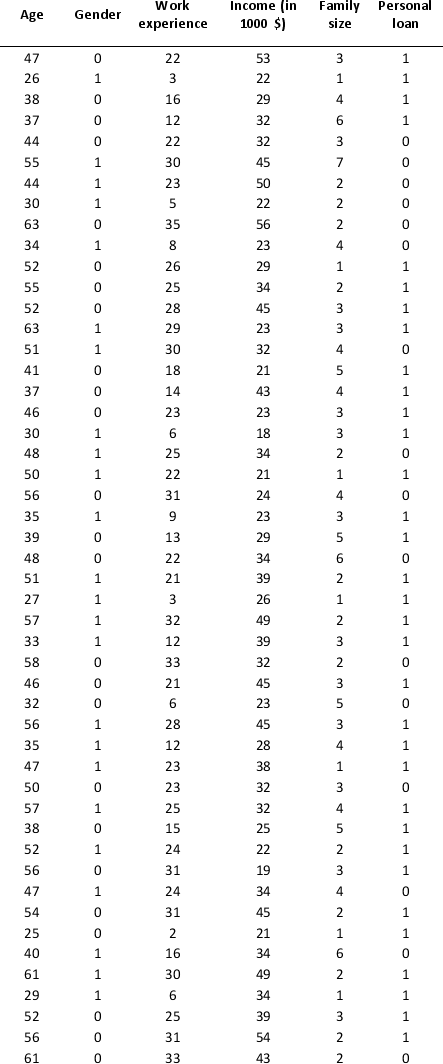

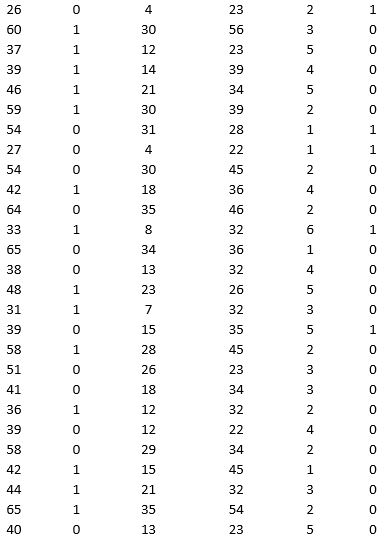

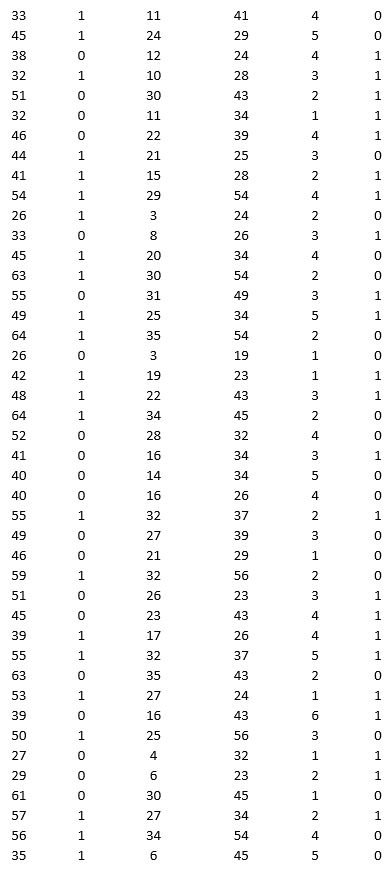

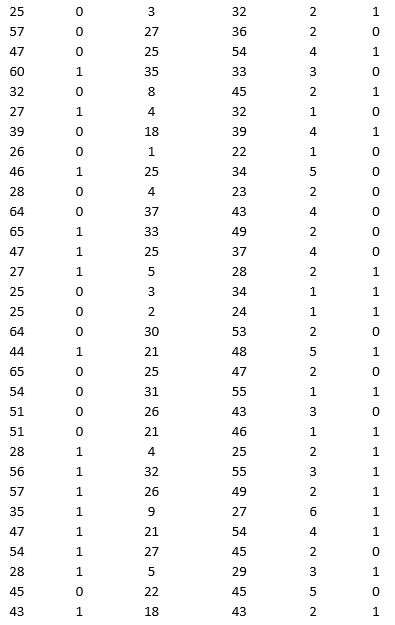

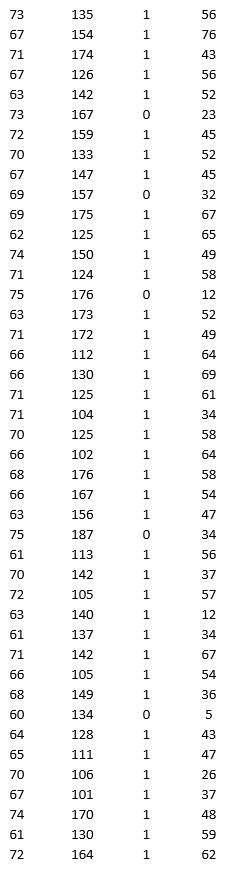

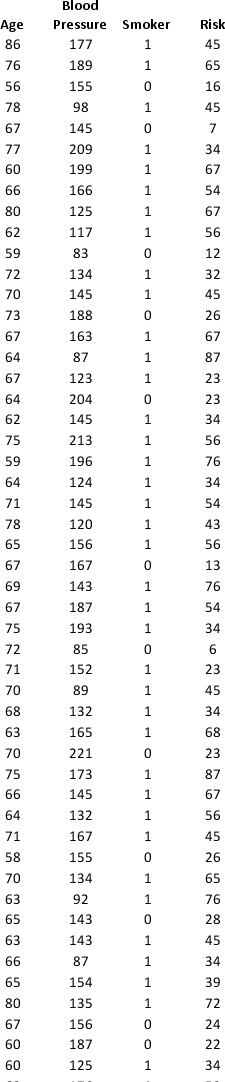

44

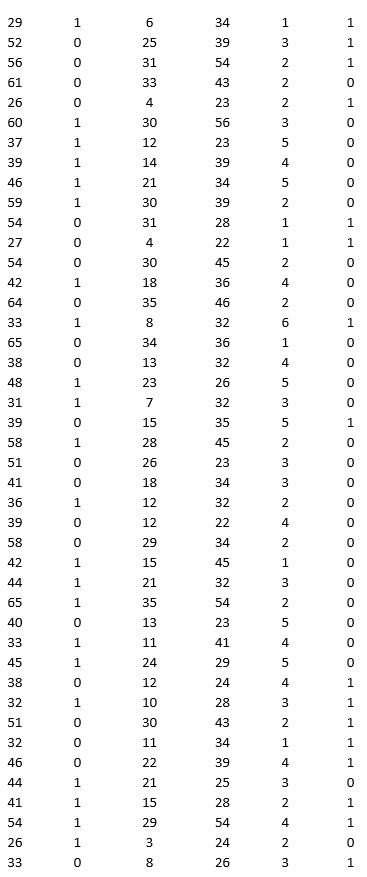

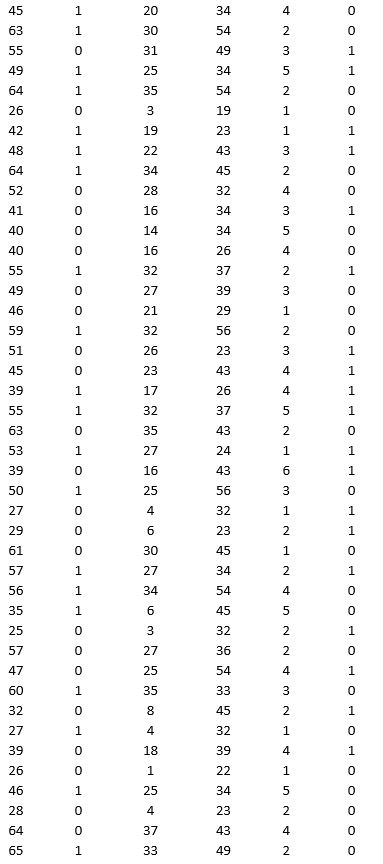

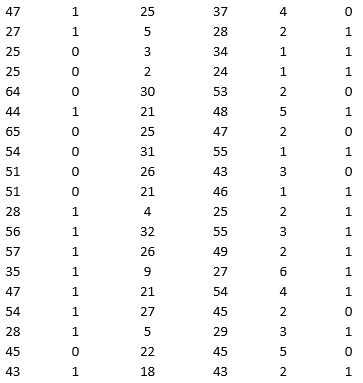

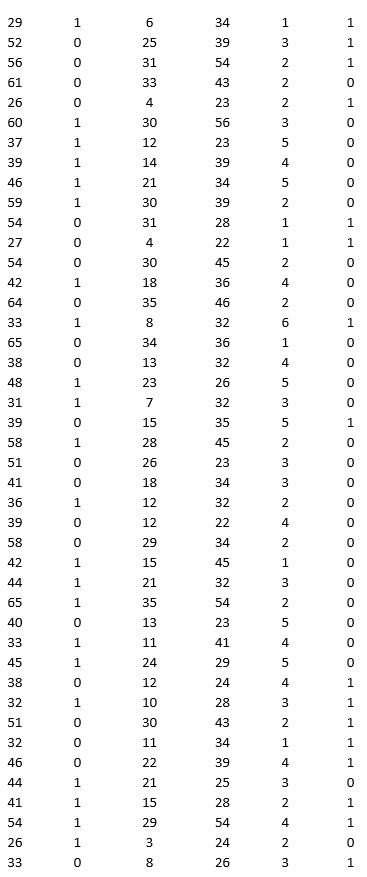

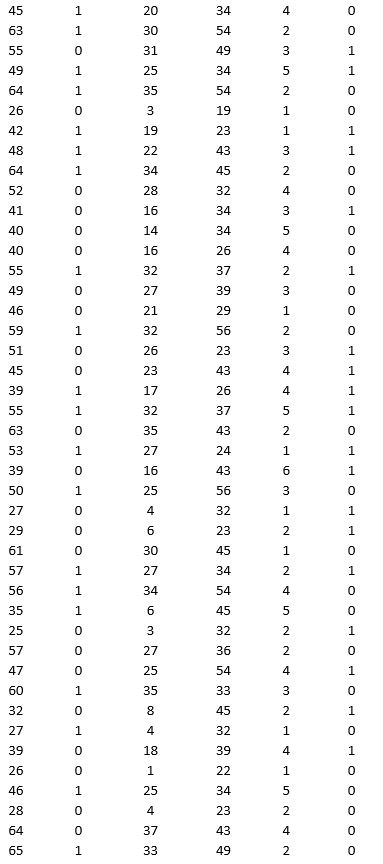

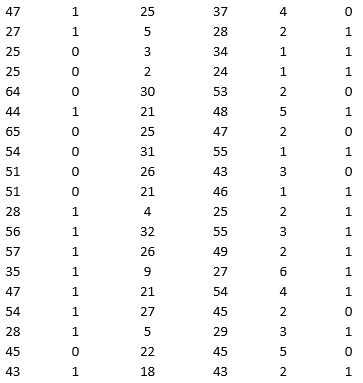

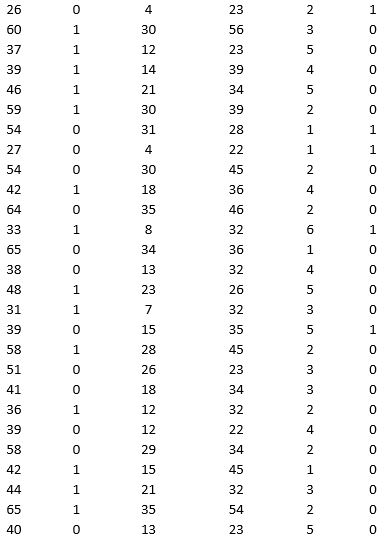

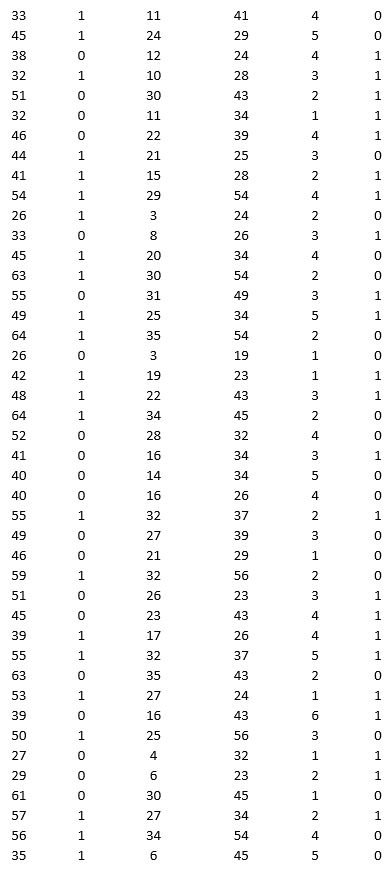

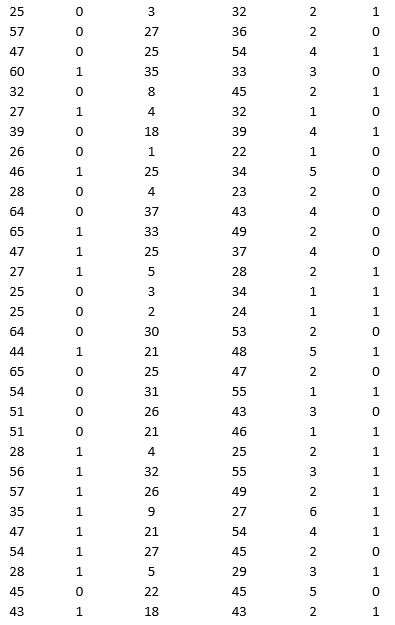

A bank is interested in identifying different attributes of its customers and below is the sample data of 150 customers. In the data table for the dummy variable Gender, 0 represents Male and 1 represents Female. And for the dummy variable Personal loan, 0 represents a customer who has not taken personal loan and 1 represents a customer who has taken personal loan.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Use logistic regression to classify observations as Personal loan taken (or not taken) using Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. Perform an exhaustive-search best subset selection with the number of best subsets equal to 2.

a. From the generated set of logistic regression models, select one that you believe is a good fit. Express the model as a mathematical equation relating the output variable to the input variables.

b. Increases in which variables increase the chance of a customer who has taken the personal loan? Increases in which variables decrease the chance of a customer who has not taken the personal loan?

c. Using the default cutoff value of 0.5 for your logistic regression model, what is the overall error rate on the test data?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Use logistic regression to classify observations as Personal loan taken (or not taken) using Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. Perform an exhaustive-search best subset selection with the number of best subsets equal to 2.

a. From the generated set of logistic regression models, select one that you believe is a good fit. Express the model as a mathematical equation relating the output variable to the input variables.

b. Increases in which variables increase the chance of a customer who has taken the personal loan? Increases in which variables decrease the chance of a customer who has not taken the personal loan?

c. Using the default cutoff value of 0.5 for your logistic regression model, what is the overall error rate on the test data?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

45

A bank wants to understand better the details of customers who are likely to default the loan. In order to analyze this, the data from a random sample of 200 customers are given below:

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data is taken away as test data. Fit a classification tree using Loan Default as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Classification Tree procedure, be sure to Normalize input data, and set the Minimum #records in a terminal node to 1. In Step 3 of XLMiner's Classification Tree procedure, set the maximum number of levels to 7. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate lift charts for both the validation data and test data.

a. Why is partitioning with oversampling advised in this case?

b. Interpret the set of rules implied by the best pruned tree that characterize loan defaulters.

c. For the default cutoff value of 0.5, what are the overall error rate, Class 1 error rate, and Class 0 error rate of the best pruned tree on the test data?

d. Examine the decile-wise lift chart for the best pruned tree on the test data. What is the first decile lift? Interpret this value.

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data is taken away as test data. Fit a classification tree using Loan Default as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Classification Tree procedure, be sure to Normalize input data, and set the Minimum #records in a terminal node to 1. In Step 3 of XLMiner's Classification Tree procedure, set the maximum number of levels to 7. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate lift charts for both the validation data and test data.

a. Why is partitioning with oversampling advised in this case?

b. Interpret the set of rules implied by the best pruned tree that characterize loan defaulters.

c. For the default cutoff value of 0.5, what are the overall error rate, Class 1 error rate, and Class 0 error rate of the best pruned tree on the test data?

d. Examine the decile-wise lift chart for the best pruned tree on the test data. What is the first decile lift? Interpret this value.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

46

A bank wants to understand better the details of customers who are likely to default the loan. In order to analyze this, the data from a random sample of 200 customers are given below:

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data is taken away as test data. Classify the data using k-nearest neighbors with up to k = 10. Use Loan default as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-nearest neighbors Classification procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate lift charts for both the validation data and test data.

a. For the cutoff probability value 0.5, what value of k minimizes the overall error rate on the validation data?

b. What is the overall error rate on the test data? Interpret this measure.

c. What are the Class 1 error rate and the Class 0 error rate on the test data?

d. Compute and interpret the sensitivity and specificity for the test data.

e. Examine the decile-wise lift chart on the test data. What is the first decile lift on the test data? Interpret this value.

In XLMiner's Partition with Oversampling procedure, partition the data so there is 50 percent successes (Loan default) in the training set and 40 percent of the validation data is taken away as test data. Classify the data using k-nearest neighbors with up to k = 10. Use Loan default as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-nearest neighbors Classification procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate lift charts for both the validation data and test data.

a. For the cutoff probability value 0.5, what value of k minimizes the overall error rate on the validation data?

b. What is the overall error rate on the test data? Interpret this measure.

c. What are the Class 1 error rate and the Class 0 error rate on the test data?

d. Compute and interpret the sensitivity and specificity for the test data.

e. Examine the decile-wise lift chart on the test data. What is the first decile lift on the test data? Interpret this value.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

47

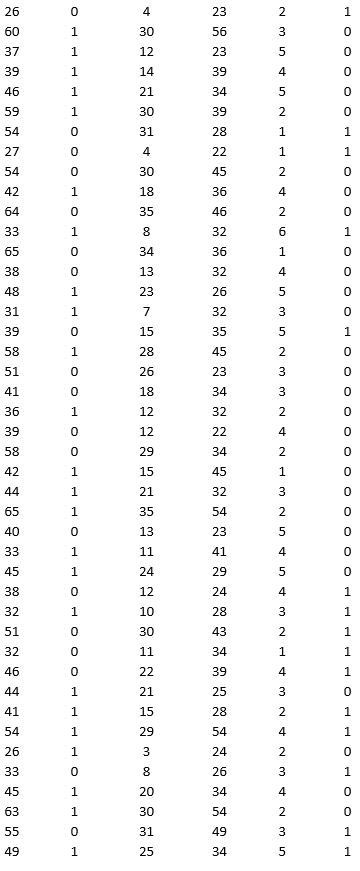

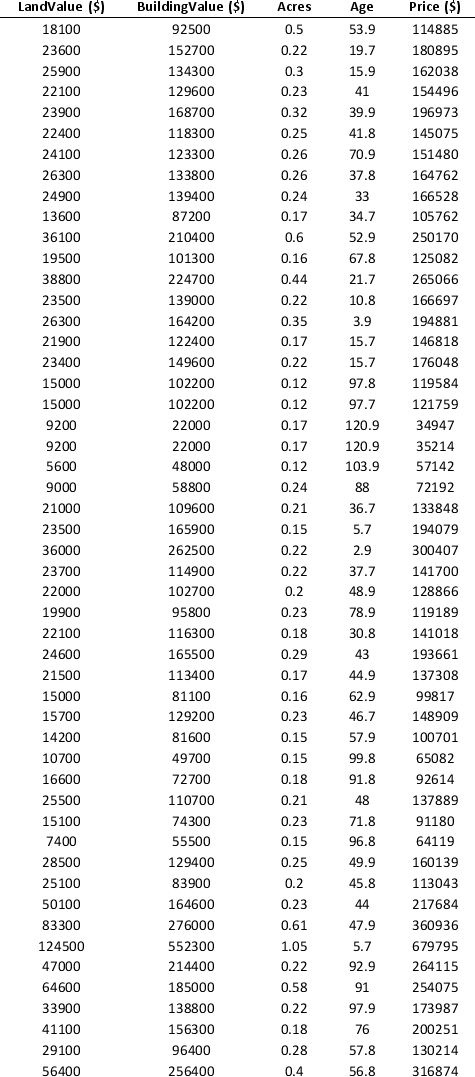

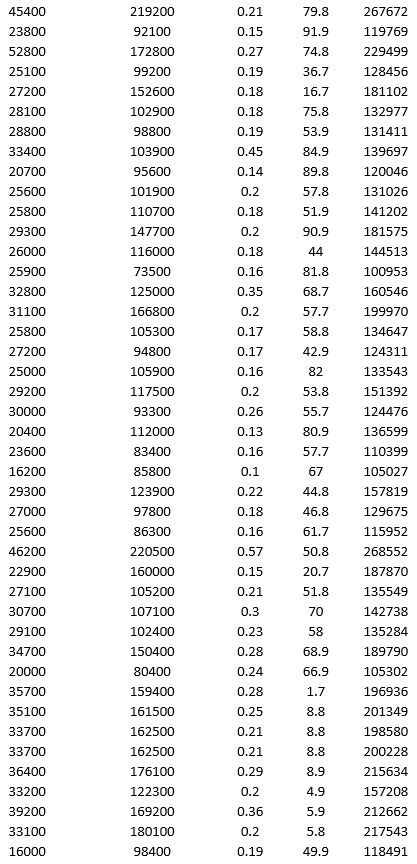

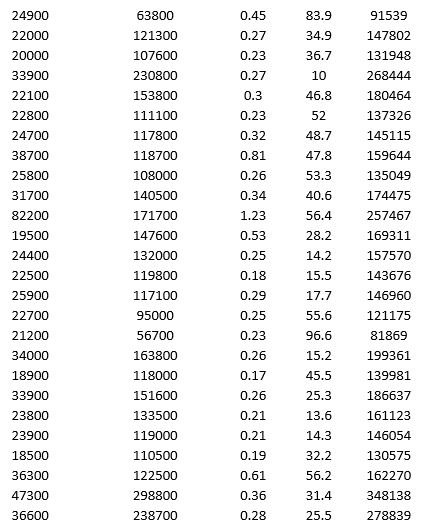

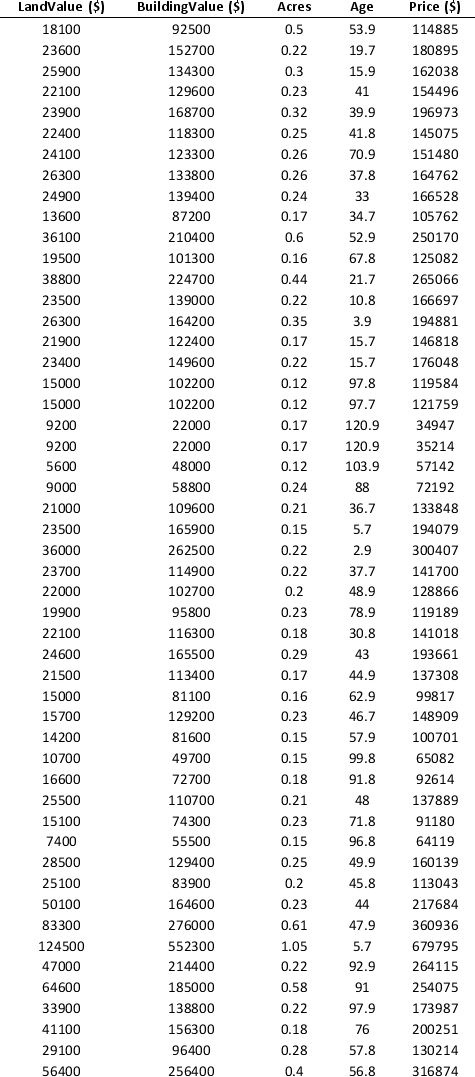

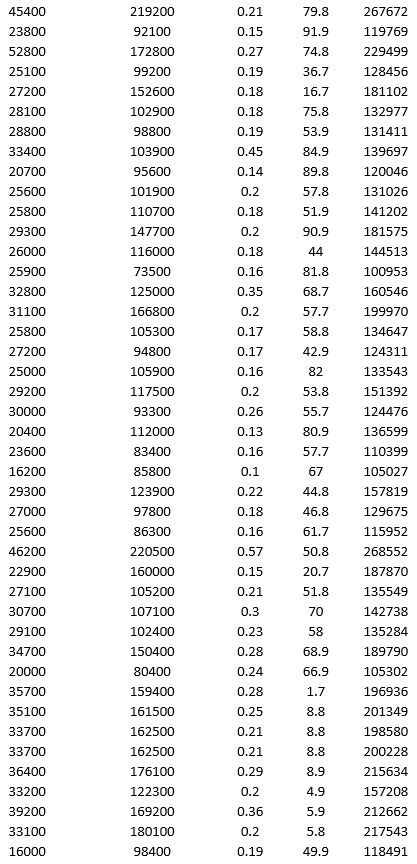

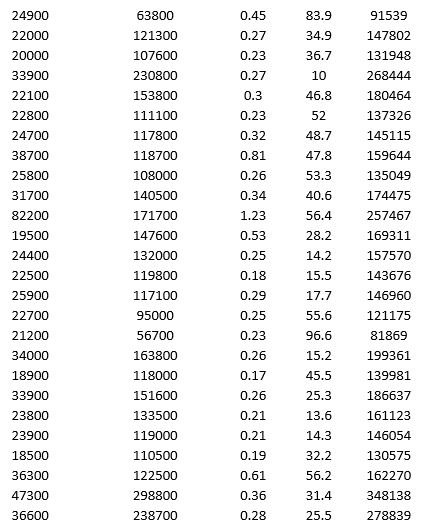

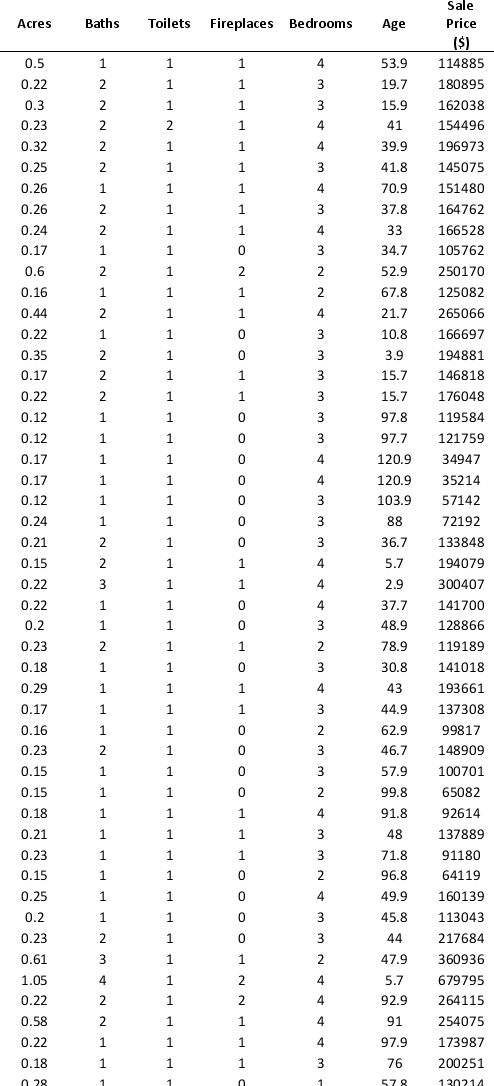

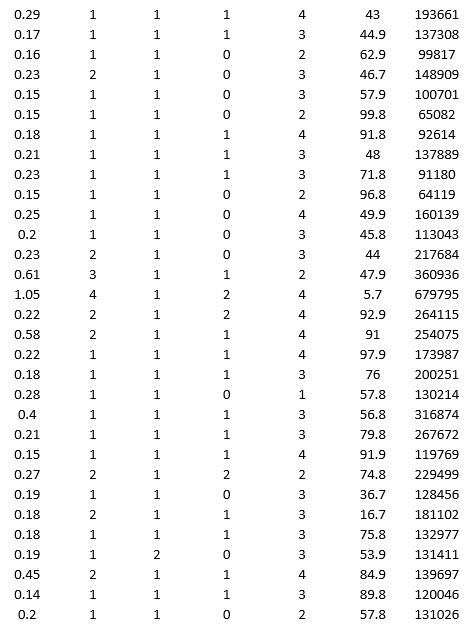

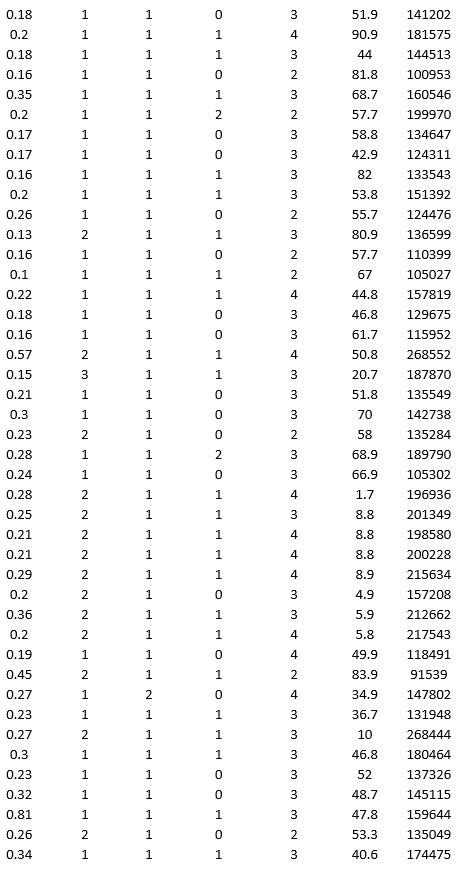

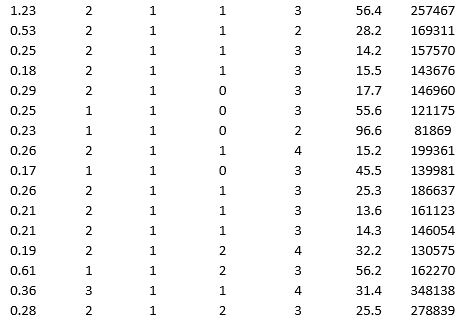

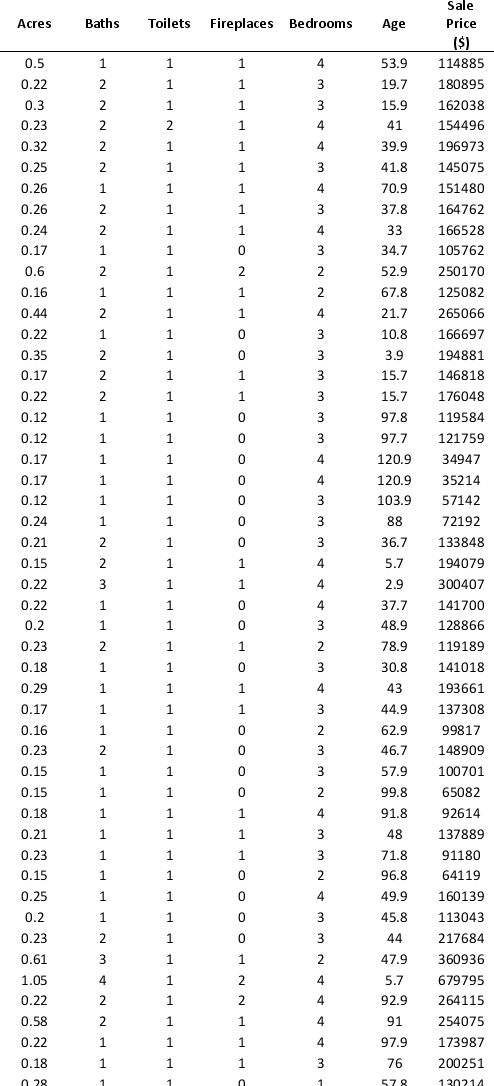

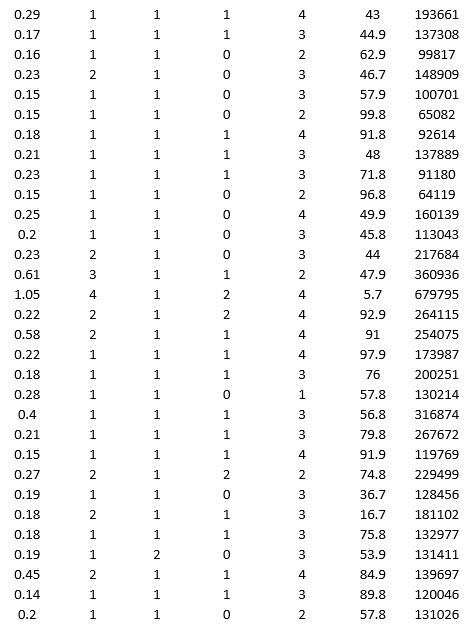

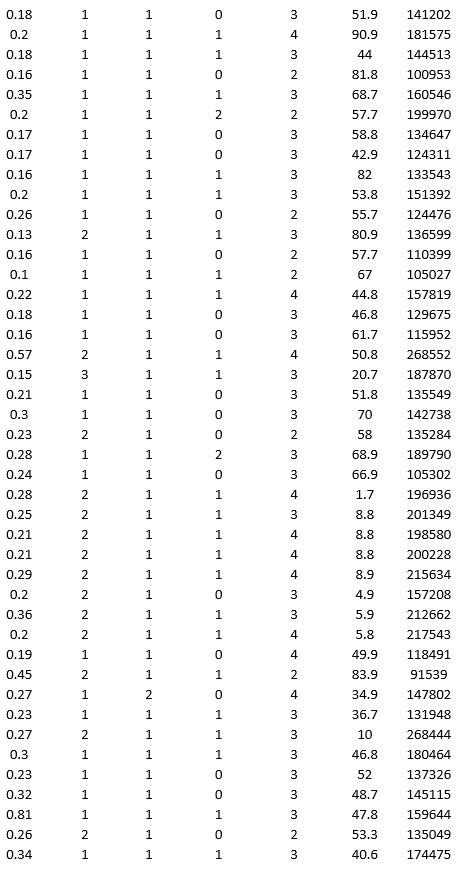

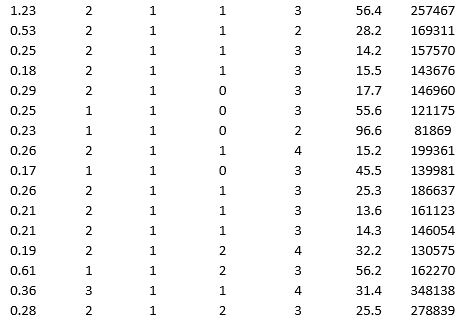

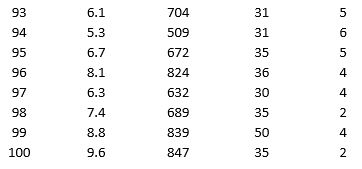

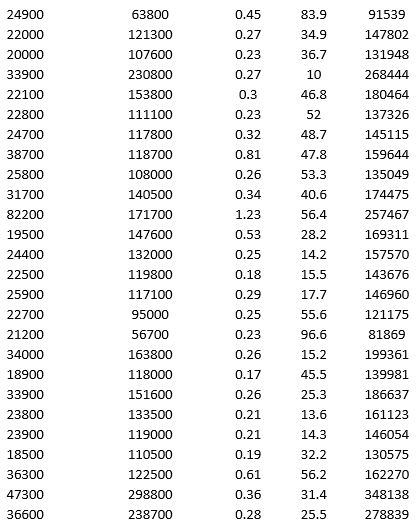

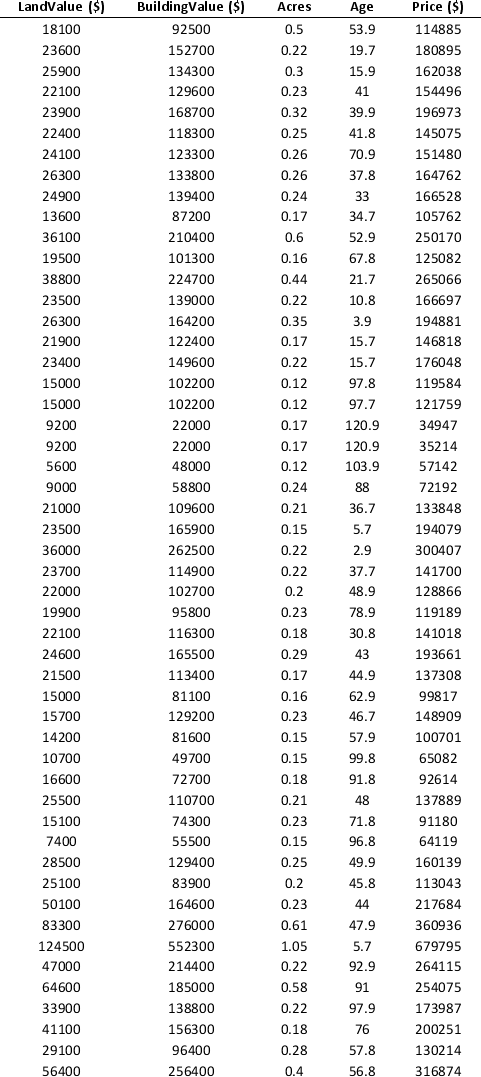

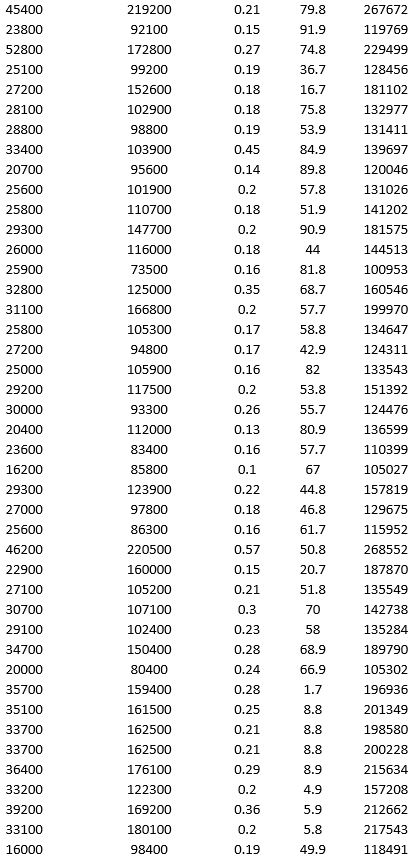

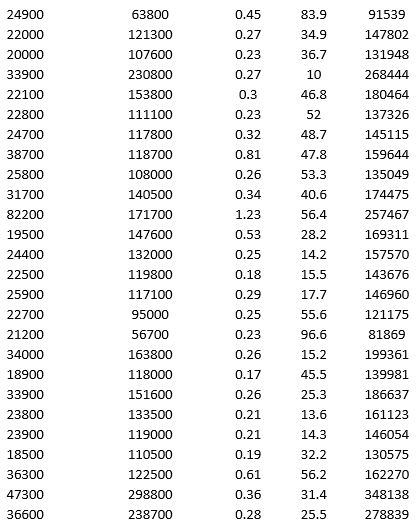

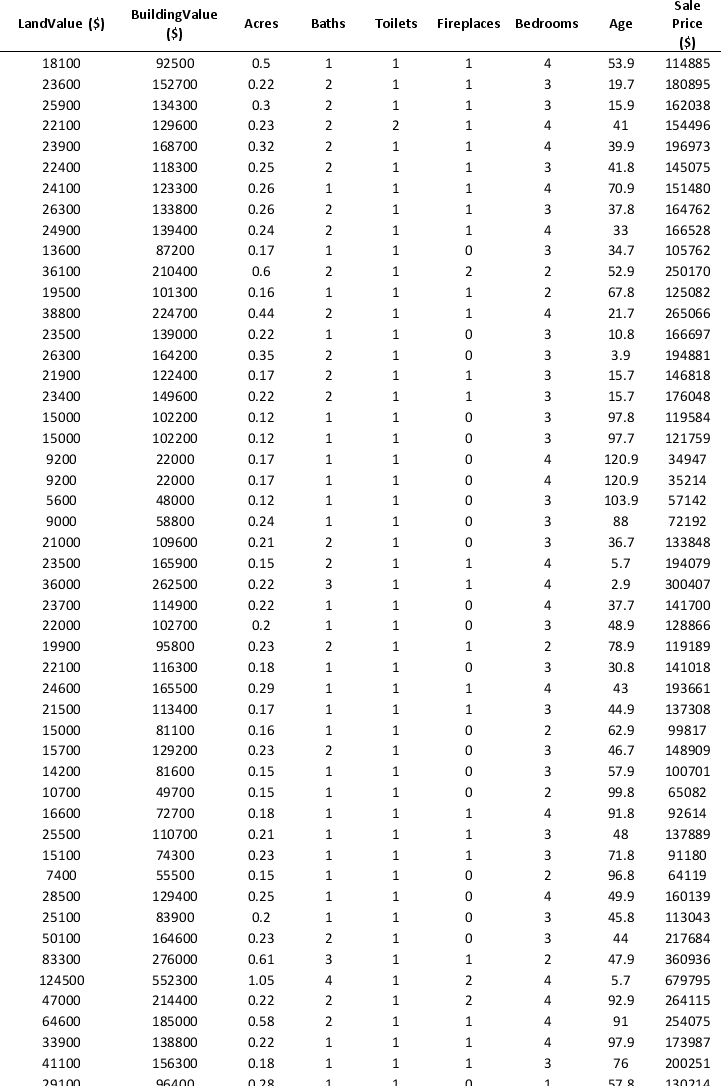

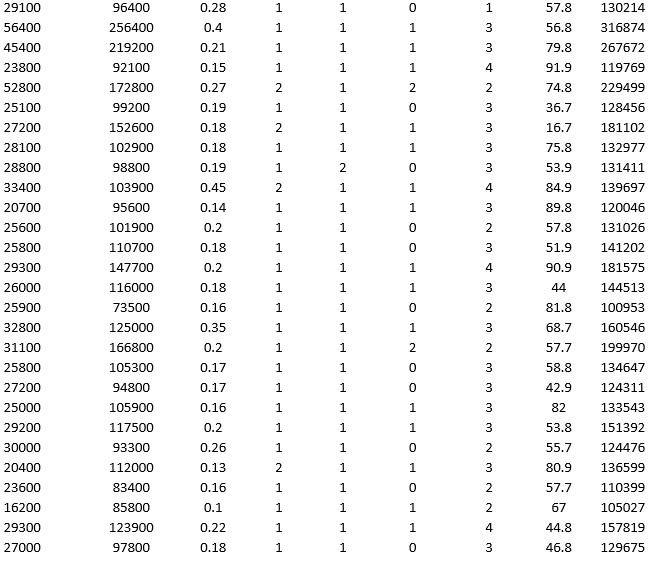

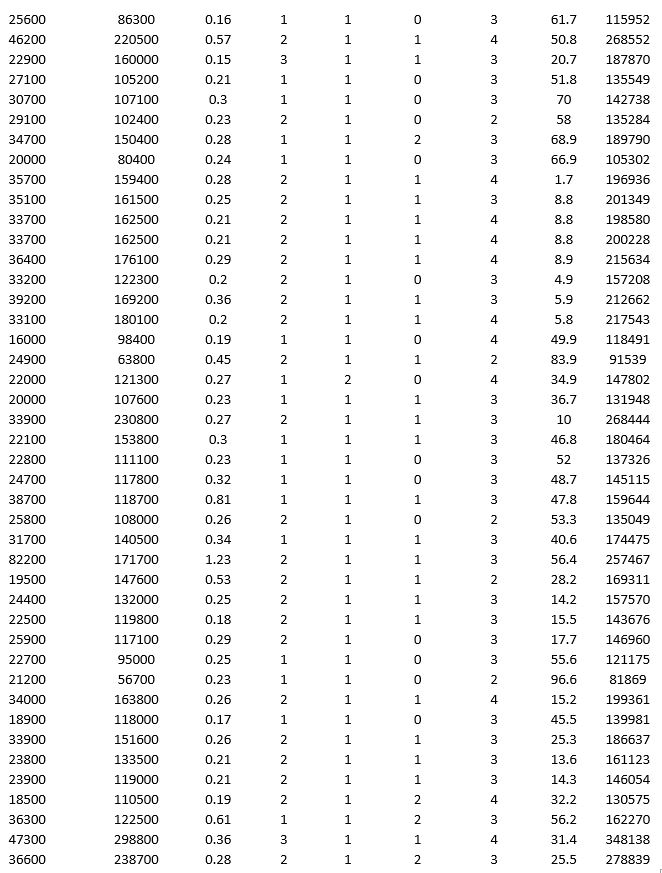

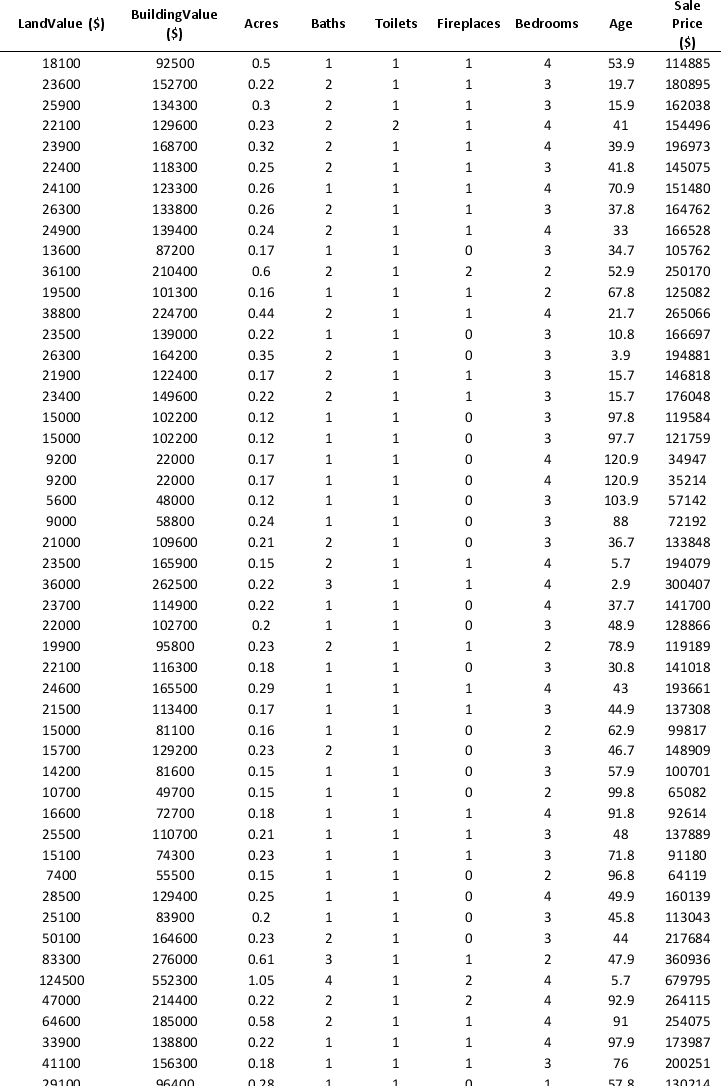

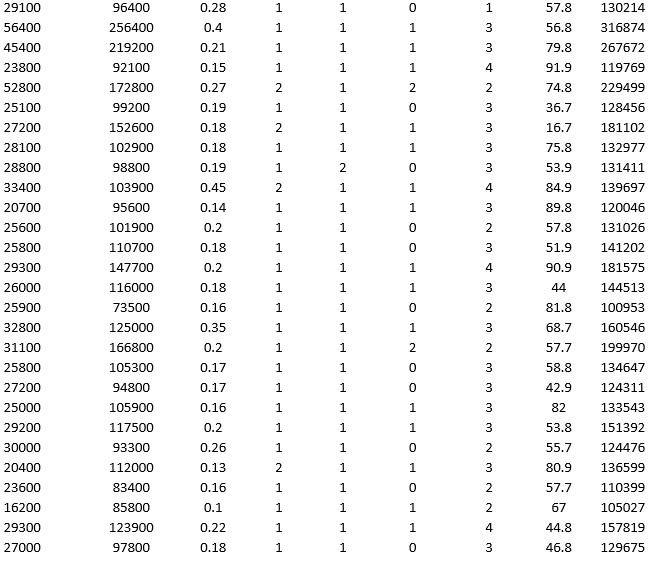

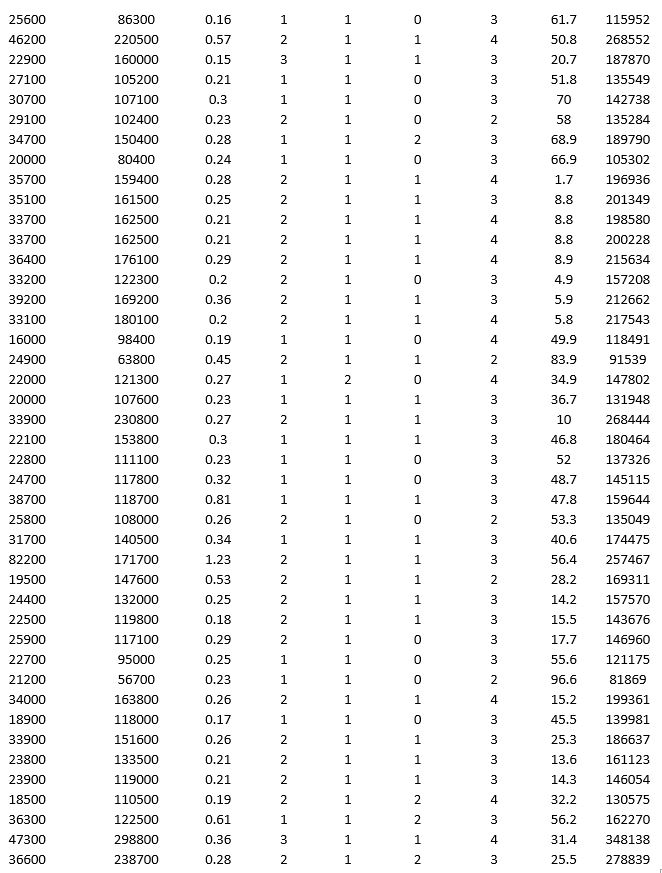

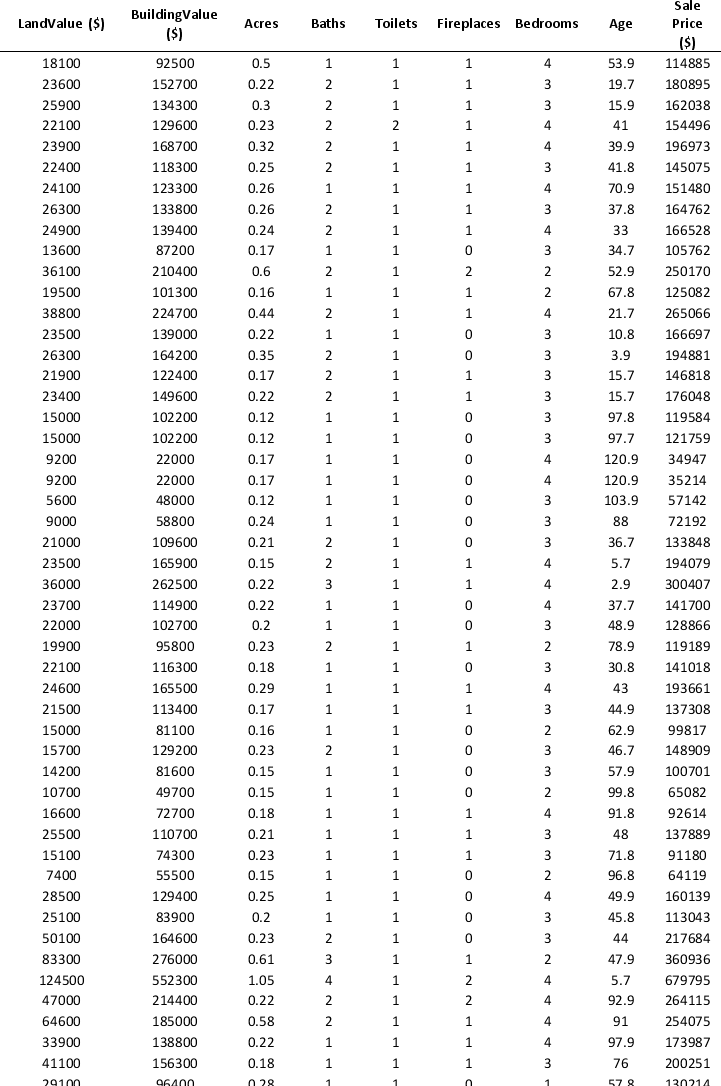

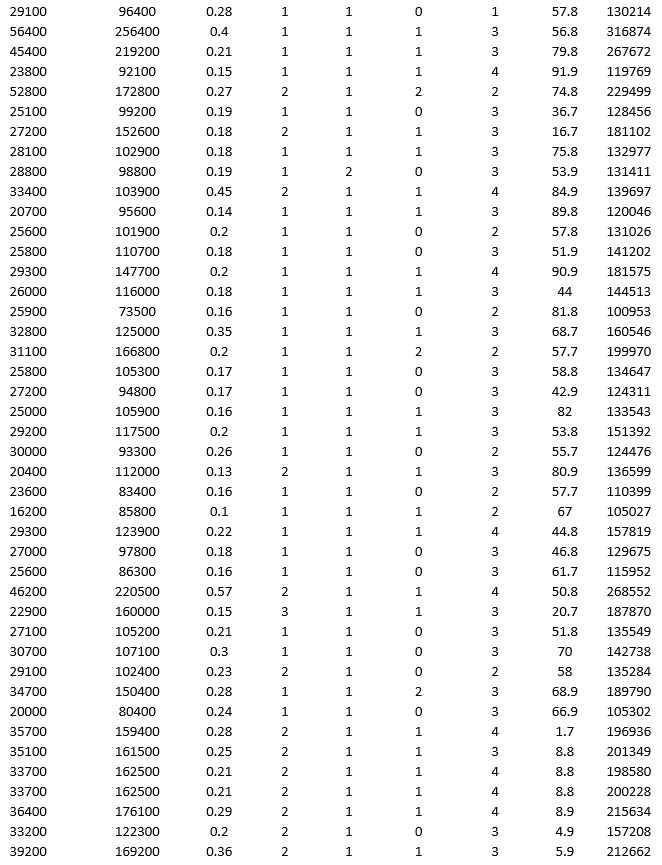

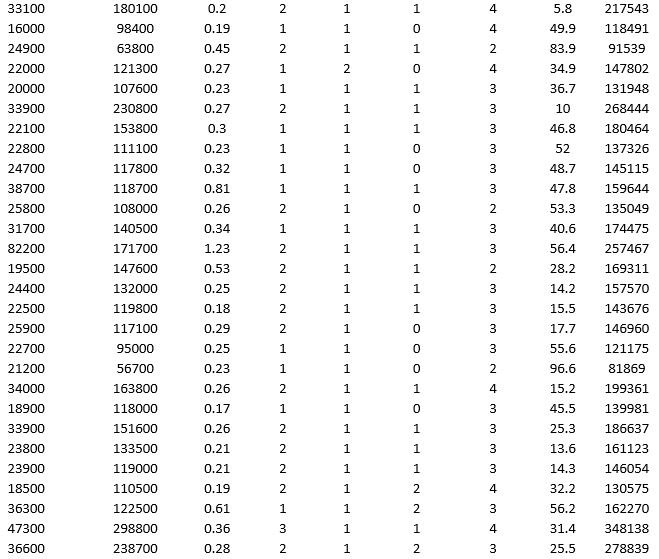

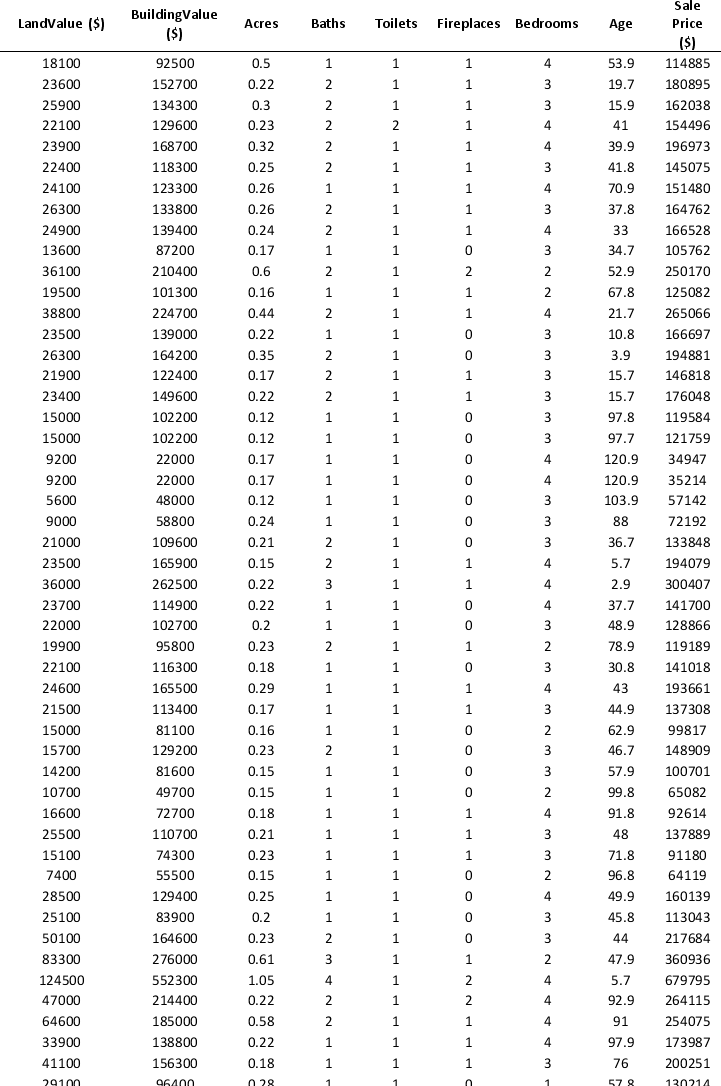

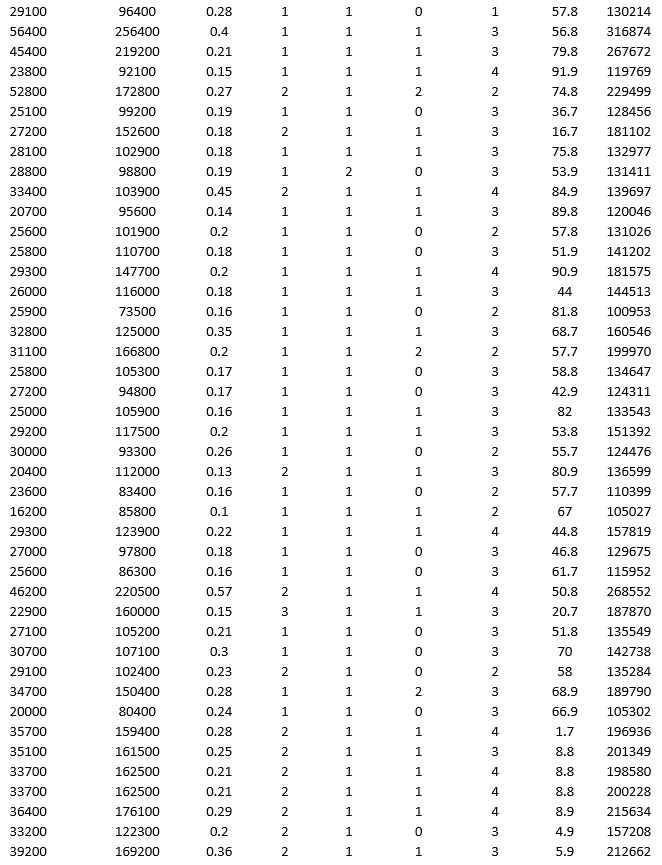

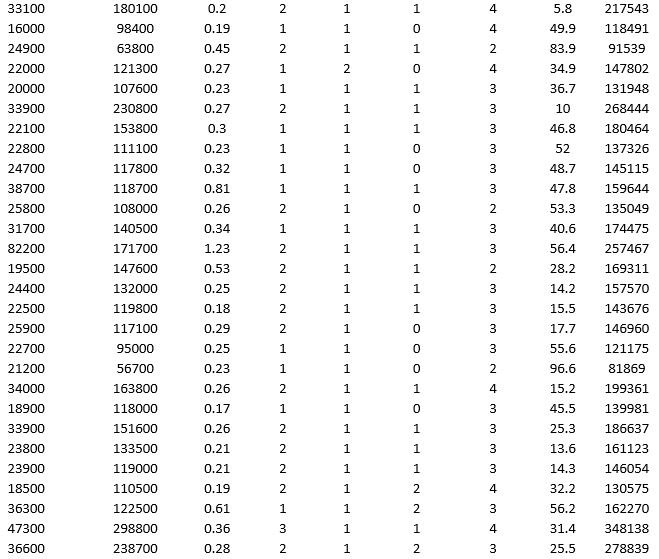

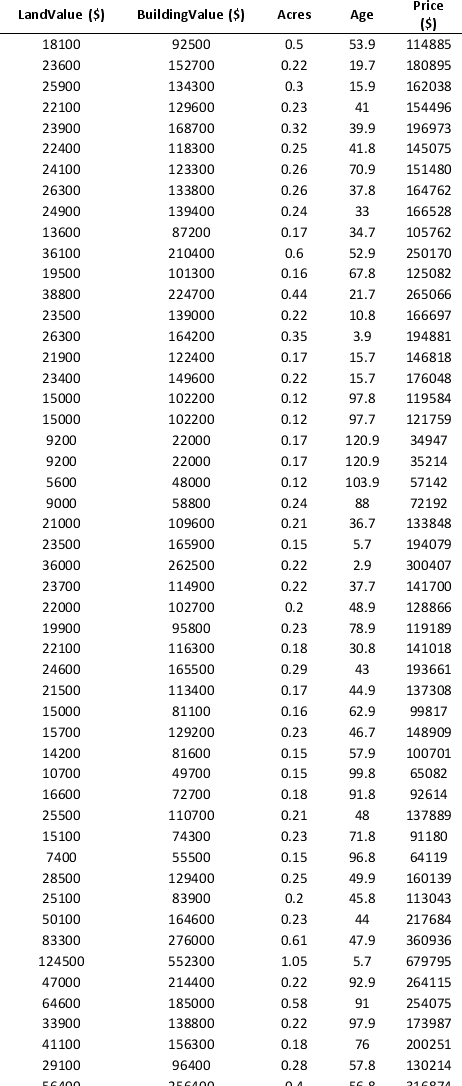

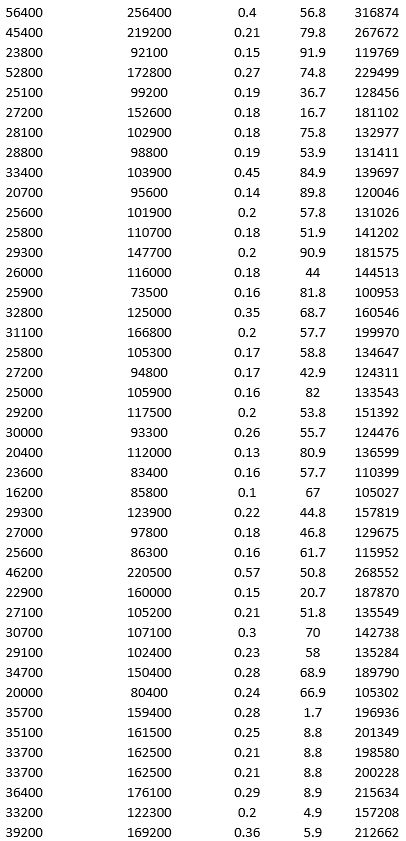

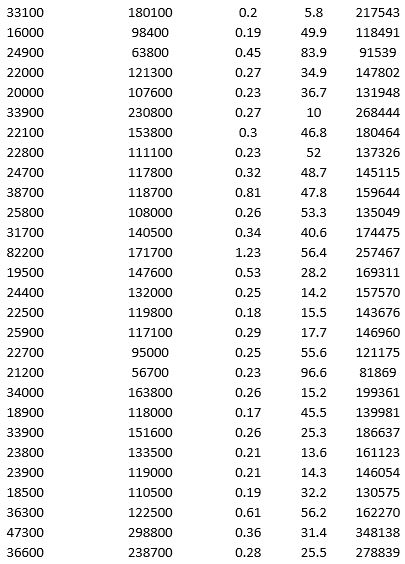

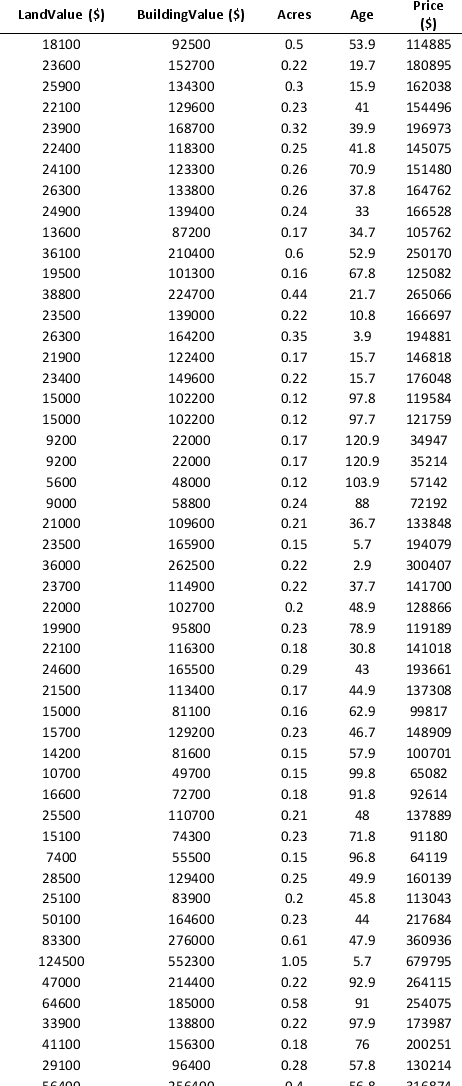

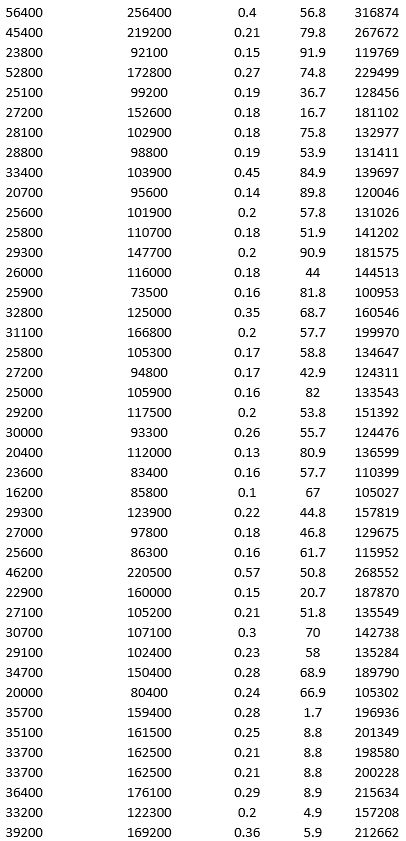

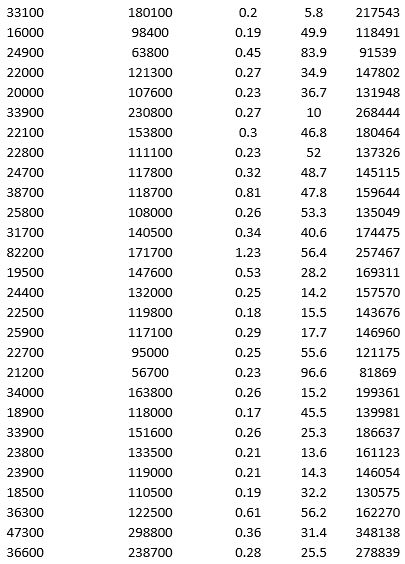

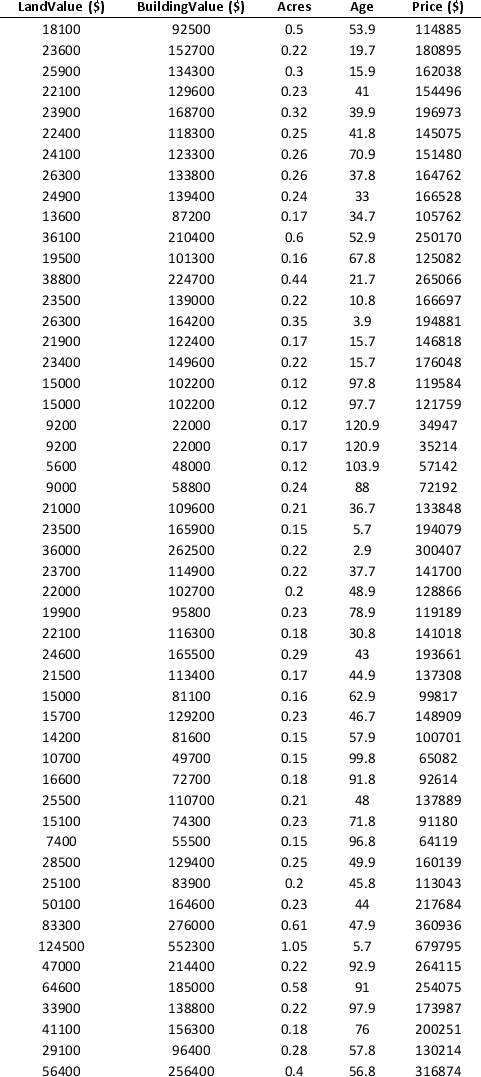

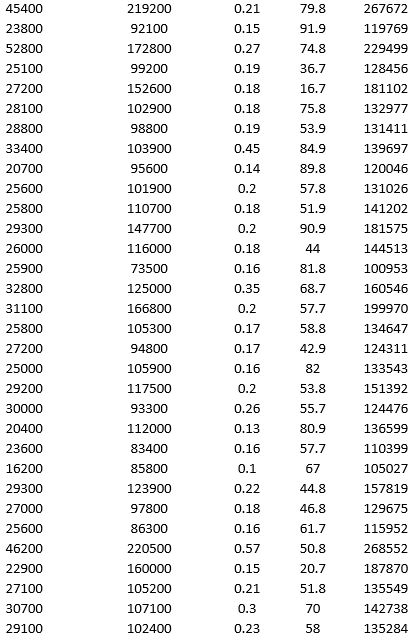

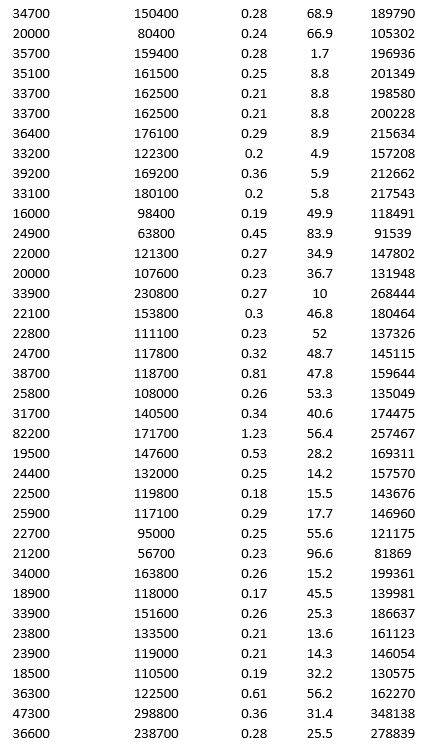

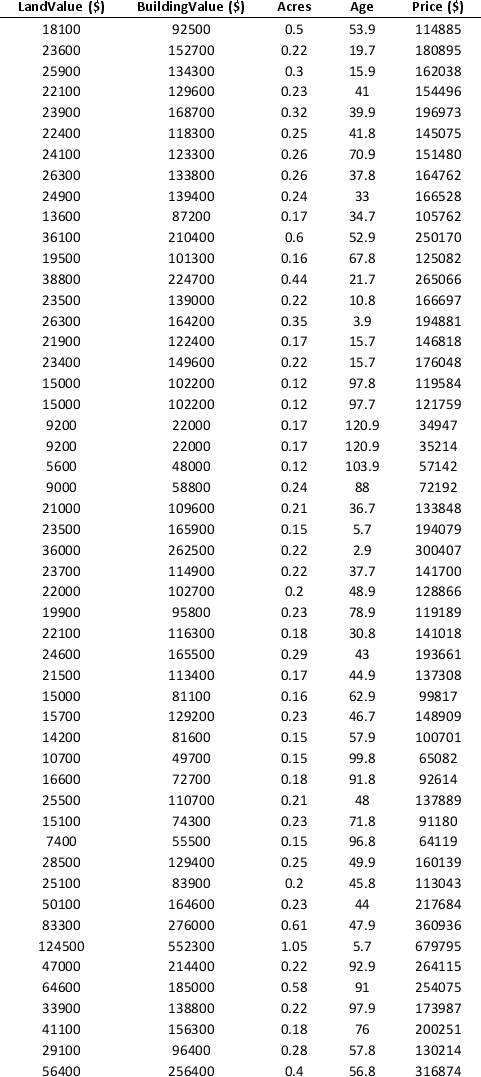

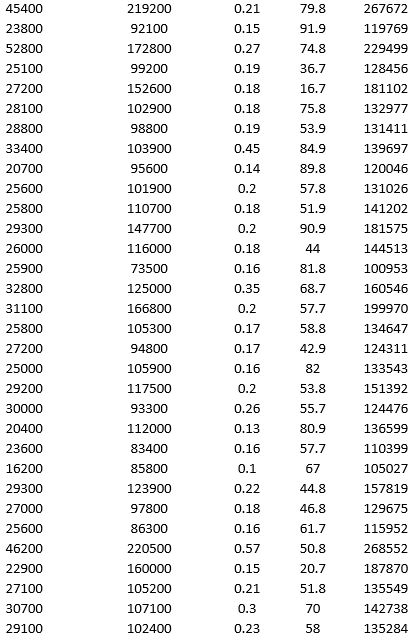

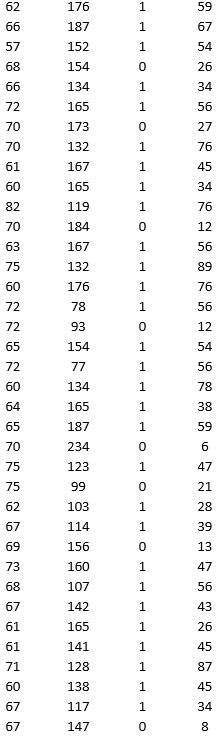

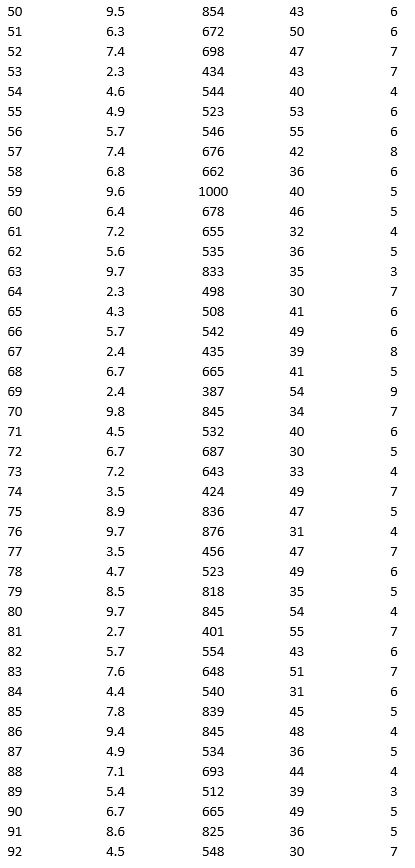

To examine the local housing market in a particular region, a sample of 120 homes sold during a year is collected. The data are given below.

Apply k-means clustering with k = 10 using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. What is the smallest cluster? What is the least dense cluster (as measured by the average distance in the cluster)?

Apply k-means clustering with k = 10 using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. What is the smallest cluster? What is the least dense cluster (as measured by the average distance in the cluster)?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

48

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data are given below:

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using multiple linear regression. Use Sale Price as the output variable and all the other variables as input variables. To generate a pool of models to consider, execute the following steps. In Step 2 of XLMiner's Multiple Linear Regression procedure, click the Best subset option. In the Best Subset dialog box, check the box next to Perform best subset selection, enter 6 in the box next to Maximum size of best subset:, enter 1 in the box next to Number of best subsets:, and check the box next to Exhaustive search.

a. From the generated set of multiple linear regression models, select one that you believe is a good fit. Express the model as a mathematical equation relating the output variable to the input variables.

b. For your model, what is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using multiple linear regression. Use Sale Price as the output variable and all the other variables as input variables. To generate a pool of models to consider, execute the following steps. In Step 2 of XLMiner's Multiple Linear Regression procedure, click the Best subset option. In the Best Subset dialog box, check the box next to Perform best subset selection, enter 6 in the box next to Maximum size of best subset:, enter 1 in the box next to Number of best subsets:, and check the box next to Exhaustive search.

a. From the generated set of multiple linear regression models, select one that you believe is a good fit. Express the model as a mathematical equation relating the output variable to the input variables.

b. For your model, what is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

49

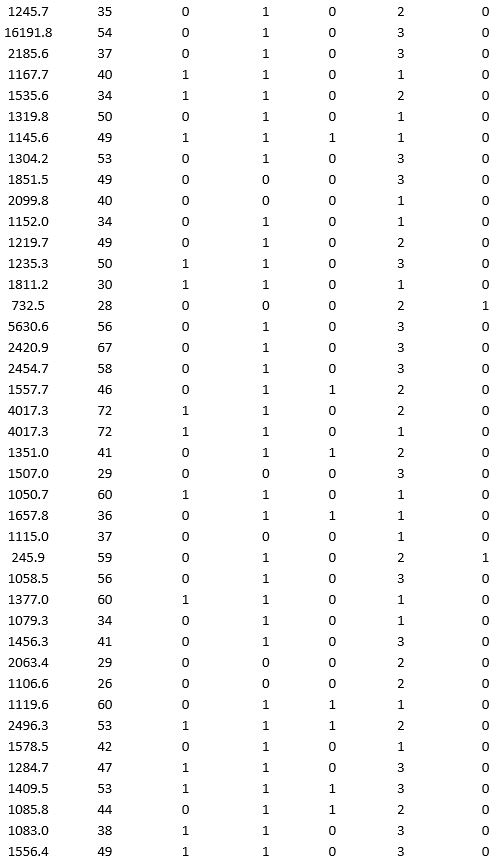

As part of the quarterly reviews, the manager of a retail store analyzes the quality of customer service based on the periodic customer satisfaction ratings (on a scale of 1 to 10 with 1 = Poor and 10 = Excellent). To understand the level of service quality, which includes the waiting times of the customers in the checkout section, he collected the following data on 100 customers who visited the store.

a. Apply hierarchical clustering with 5 clusters using Wait Time (min) and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure, and specify single linkage as the clustering method. Analyze the resulting clusters by computing the cluster size. It may be helpful to use a PivotTable on the data in the HC_Clusters worksheet generated by XLMiner to compute descriptive measures of the Wait Time and Customer Satisfaction Rating variables in each cluster. You can also visualize the clusters by creating a scatter plot with Wait Time (min) as the x-variable and Customer Satisfaction Rating as the y-variable.

b. Repeat part a using average linkage as the clustering method. Compare the clusters to the previous method.

a. Apply hierarchical clustering with 5 clusters using Wait Time (min) and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure, and specify single linkage as the clustering method. Analyze the resulting clusters by computing the cluster size. It may be helpful to use a PivotTable on the data in the HC_Clusters worksheet generated by XLMiner to compute descriptive measures of the Wait Time and Customer Satisfaction Rating variables in each cluster. You can also visualize the clusters by creating a scatter plot with Wait Time (min) as the x-variable and Customer Satisfaction Rating as the y-variable.

b. Repeat part a using average linkage as the clustering method. Compare the clusters to the previous method.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

50

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data is given below.

a. Apply hierarchical clustering with 10 clusters using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure, and specify complete linkage as the clustering method. Analyze the resulting clusters by computing the cluster size. It may be helpful to use a PivotTable on the data in the HC_Clusters worksheet generated by XLMiner. You can also visualize the clusters by creating a scatter plot with Acre as the x-variable and Price ($) as the y-variable.

b. Repeat part a using average group linkage as the clustering method. Compare the clusters to the previous method.

a. Apply hierarchical clustering with 10 clusters using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure, and specify complete linkage as the clustering method. Analyze the resulting clusters by computing the cluster size. It may be helpful to use a PivotTable on the data in the HC_Clusters worksheet generated by XLMiner. You can also visualize the clusters by creating a scatter plot with Acre as the x-variable and Price ($) as the y-variable.

b. Repeat part a using average group linkage as the clustering method. Compare the clusters to the previous method.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

51

As part of the quarterly reviews, the manager of a retail store analyzes the quality of customer service based on the periodic customer satisfaction ratings (on a scale of 1 to 10 with 1 = Poor and 10 = Excellent). To understand the level of service quality, which includes the waiting times of the customers in the checkout section, he collected the following data on 100 customers who visited the store.

Apply hierarchical clustering with 5 clusters using Wait Time (min), Purchase Amount ($), Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Use Ward's method as the clustering method.

a. Use a PivotTable on the data in the HC_Clusters1 worksheet to compute the cluster centers for the five clusters in the hierarchical clustering.

b. Identify the cluster with the largest average waiting time. Using all the variables, how would you characterize this cluster?

c. Identify the smallest cluster.

d. By examining the dendrogram on the HC_Dendrogram worksheet (as well as the sequence of clustering stages in HC_Output1), what number of clusters seems to be the most natural fit based on the distance?

Apply hierarchical clustering with 5 clusters using Wait Time (min), Purchase Amount ($), Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Use Ward's method as the clustering method.

a. Use a PivotTable on the data in the HC_Clusters1 worksheet to compute the cluster centers for the five clusters in the hierarchical clustering.

b. Identify the cluster with the largest average waiting time. Using all the variables, how would you characterize this cluster?

c. Identify the smallest cluster.

d. By examining the dendrogram on the HC_Dendrogram worksheet (as well as the sequence of clustering stages in HC_Output1), what number of clusters seems to be the most natural fit based on the distance?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

52

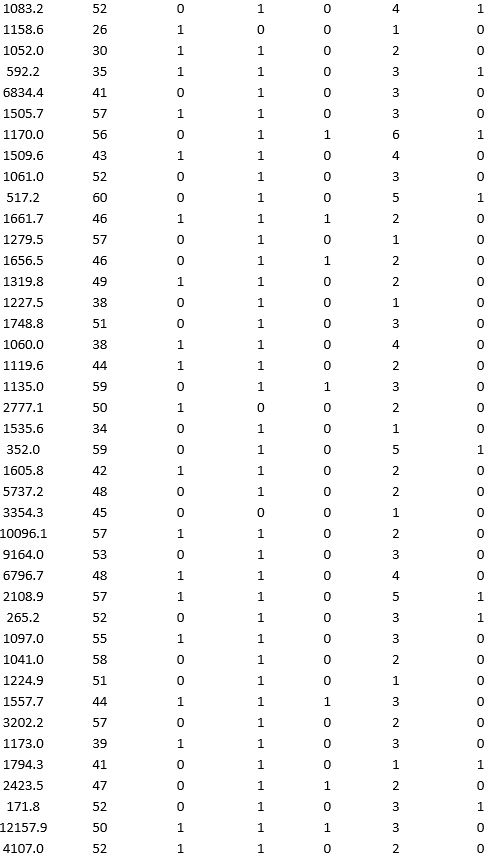

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data are given below:

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using a regression tree. Use Sale Price as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Regression Tree procedure, be sure to Normalize input data, to set the Maximum #splits for input variables to 59, to set the Minimum #records in a terminal node to 1, and specify Using Best prune tree as the scoring option. In Step 3 of XLMiner's Regression Tree procedure, set the maximum number of levels to 7. Generate the Full tree and Best pruned tree.

a. In terms of number of decision nodes, compare the size of the full tree to the size of the best pruned tree.

b. What is the root mean squared error (RMSE) of the best pruned tree on the validation data and on the test data?

c. What is the average error on the validation data and test data? What does this suggest?

d. By examining the best pruned tree, what are the critical variables in predicting the sale price of a home?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using a regression tree. Use Sale Price as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Regression Tree procedure, be sure to Normalize input data, to set the Maximum #splits for input variables to 59, to set the Minimum #records in a terminal node to 1, and specify Using Best prune tree as the scoring option. In Step 3 of XLMiner's Regression Tree procedure, set the maximum number of levels to 7. Generate the Full tree and Best pruned tree.

a. In terms of number of decision nodes, compare the size of the full tree to the size of the best pruned tree.

b. What is the root mean squared error (RMSE) of the best pruned tree on the validation data and on the test data?

c. What is the average error on the validation data and test data? What does this suggest?

d. By examining the best pruned tree, what are the critical variables in predicting the sale price of a home?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

53

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data are given below:

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using k-nearest neighbors with up to k = 10. Use Sale Price as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-Nearest Neighbors Prediction procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate a Detailed Scoring report for all three sets of data.

a. What value of k minimizes the root mean squared error (RMSE) on the validation data?

b. What is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the sale price using k-nearest neighbors with up to k = 10. Use Sale Price as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-Nearest Neighbors Prediction procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate a Detailed Scoring report for all three sets of data.

a. What value of k minimizes the root mean squared error (RMSE) on the validation data?

b. What is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

54

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data is given below.

For the above data, apply k-means clustering using Price ($) as the variable with k = 3. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Then create one distinct data set for each of the three resulting clusters of price.

a. For the observations composing the cluster with low home price, apply hierarchical clustering with Ward's method to form three clusters using Acres and Age as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters1, report the characteristics of each cluster.

b. For the observations composing the cluster with medium home price, apply hierarchical clustering with Ward's method to form three clusters using Acres and Age as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters1, report the characteristics of each cluster.

c. Comment on the cluster with high home price.

For the above data, apply k-means clustering using Price ($) as the variable with k = 3. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Then create one distinct data set for each of the three resulting clusters of price.

a. For the observations composing the cluster with low home price, apply hierarchical clustering with Ward's method to form three clusters using Acres and Age as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters1, report the characteristics of each cluster.

b. For the observations composing the cluster with medium home price, apply hierarchical clustering with Ward's method to form three clusters using Acres and Age as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Using a PivotTable on the data in HC_Clusters1, report the characteristics of each cluster.

c. Comment on the cluster with high home price.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

55

A bank is interested in identifying different attributes of its customers and below is the sample data of 150 customers. In the data table for the dummy variable Gender, 0 represents Male and 1 represents Female. And for the dummy variable Personal loan, 0 represents a customer who has not taken personal loan and 1 represents a customer who has taken personal loan.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Fit a classification tree using Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. In Step 2 of XLMiner's Classification Tree procedure, be sure to Normalize input data and to set the Minimum #records in a terminal node to 1. In Step 3 of XLMiner's Classification Tree procedure, set the maximum number of levels to seven. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate lift charts for both the validation data and the test data.

a. Interpret the set of rules implied by the best pruned tree that characterize the customers who have taken personal loan.

b. For the default cutoff value of 0.5, what is the overall error rate, Class 1 error rate, and Class 0 error rate of the best pruned tree on the test data? Interpret these respective measures.

c. Examine the decile-wise lift chart for the best pruned tree on the test data. What is the first decile lift? Interpret this value.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Fit a classification tree using Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. In Step 2 of XLMiner's Classification Tree procedure, be sure to Normalize input data and to set the Minimum #records in a terminal node to 1. In Step 3 of XLMiner's Classification Tree procedure, set the maximum number of levels to seven. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate lift charts for both the validation data and the test data.

a. Interpret the set of rules implied by the best pruned tree that characterize the customers who have taken personal loan.

b. For the default cutoff value of 0.5, what is the overall error rate, Class 1 error rate, and Class 0 error rate of the best pruned tree on the test data? Interpret these respective measures.

c. Examine the decile-wise lift chart for the best pruned tree on the test data. What is the first decile lift? Interpret this value.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

56

A bank is interested in identifying different attributes of its customers and below is the sample data of 150 customers. In the data table for the dummy variable Gender, 0 represents Male and 1 represents Female. And for the dummy variable Personal loan, 0 represents a customer who has not taken personal loan and 1 represents a customer who has taken personal loan.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Classify the data using k-nearest neighbors with up to k = 10. Use Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. In Step 2 of XLMiner's k-nearest neighbors Classification procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate lift charts for both the validation data and test data.

a. For the cutoff probability value 0.5, what value of k minimizes the overall error rate on the validation data? Explain the difference in the overall error rate on the training, validation, and test data.

b. Examine the decile-wise lift chart on the test data. Identify and interpret the first decile lift.

c. For cutoff probability values of 0.5, 0.4, 0.3, and 0.2, what are the corresponding Class 1 error rates and Class 0 error rates on the validation data?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Classify the data using k-nearest neighbors with up to k = 10. Use Age, Gender, Work experience, Income (in 1000 $), and Family size as input variables and Personal loan as the output variable. In Step 2 of XLMiner's k-nearest neighbors Classification procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate lift charts for both the validation data and test data.

a. For the cutoff probability value 0.5, what value of k minimizes the overall error rate on the validation data? Explain the difference in the overall error rate on the training, validation, and test data.

b. Examine the decile-wise lift chart on the test data. Identify and interpret the first decile lift.

c. For cutoff probability values of 0.5, 0.4, 0.3, and 0.2, what are the corresponding Class 1 error rates and Class 0 error rates on the validation data?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

57

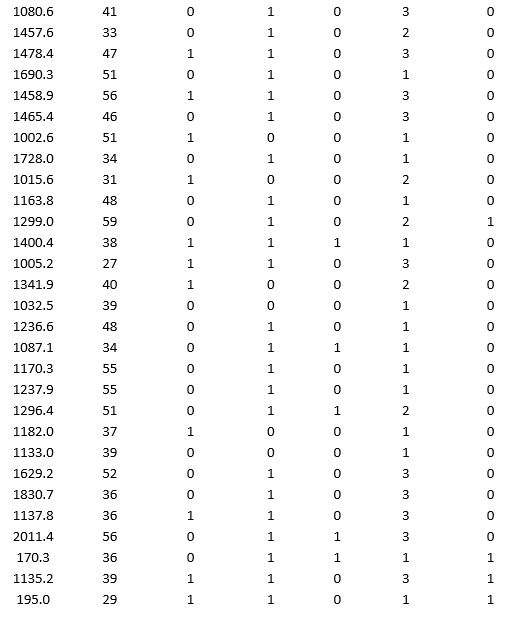

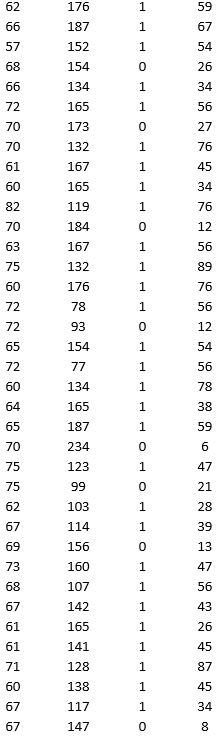

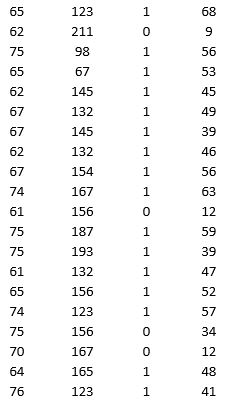

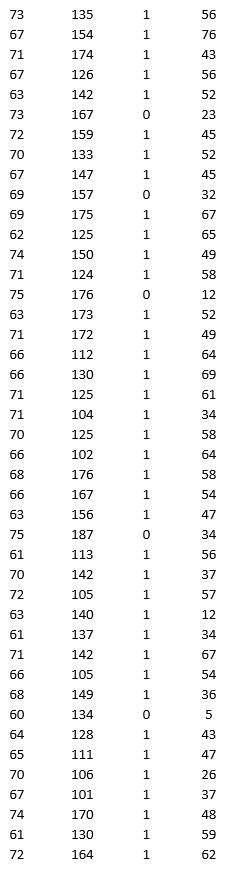

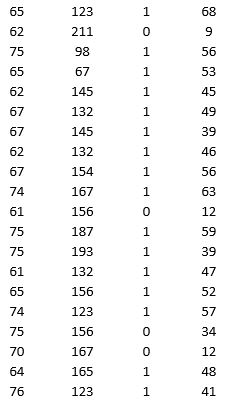

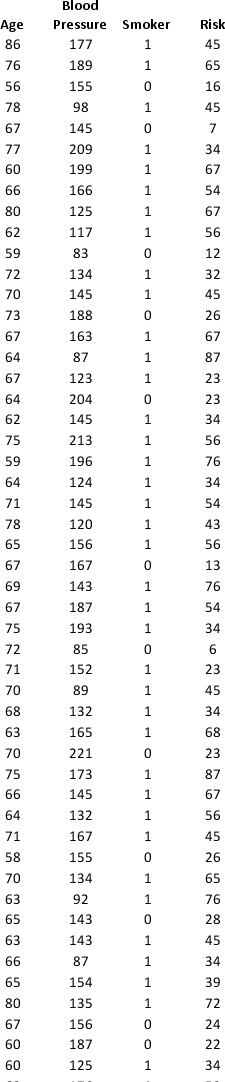

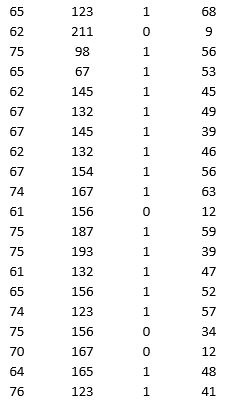

A research team wanted to assess the relationship between age, systolic blood pressure, smoking, and risk of stroke. A sample of 150 patients who had a stroke is selected and the data collected are given below. Here, for the variable Smoker, 1 represents smokers and 0 represents nonsmokers.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the Risk of stroke using k-nearest neighbors with up to k = 20. Use Risk as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-Nearest Neighbors Prediction procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate a Detailed Scoring report for all three sets of data.

a. What value of k minimizes the root mean squared error (RMSE) on the validation data?

b. What is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the Risk of stroke using k-nearest neighbors with up to k = 20. Use Risk as the output variable and all the other variables as input variables. In Step 2 of XLMiner's k-Nearest Neighbors Prediction procedure, be sure to Normalize input data and to Score on best k between 1 and specified value. Generate a Detailed Scoring report for all three sets of data.

a. What value of k minimizes the root mean squared error (RMSE) on the validation data?

b. What is the RMSE on the validation data and test data?

c. What is the average error on the validation data and test data? What does this suggest?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

58

To examine the local housing market in a particular region, a sample of 120 homes sold during a year are collected. The data is given below.

Apply hierarchical clustering with 10 clusters using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Use Ward's method as the clustering method. a. Use a PivotTable on the data in the HC_Clusters1 worksheet to compute the cluster centers for the clusters in the hierarchical clustering.

b. Identify the cluster with the largest average price. Using all the variables, how would you characterize this cluster?

c. Identify the smallest cluster.

Apply hierarchical clustering with 10 clusters using LandValue ($), BuildingValue ($), Acres, Age, and Price ($) as variables. Be sure to Normalize input data in Step 2 of the XLMiner Hierarchical Clustering procedure. Use Ward's method as the clustering method. a. Use a PivotTable on the data in the HC_Clusters1 worksheet to compute the cluster centers for the clusters in the hierarchical clustering.

b. Identify the cluster with the largest average price. Using all the variables, how would you characterize this cluster?

c. Identify the smallest cluster.

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

59

A research team wanted to assess the relationship between age, systolic blood pressure, smoking, and risk of stroke. A sample of 150 patients who had a stroke is selected and the data collected are given below. Here, for the variable Smoker, 1 represents smokers and 0 represents nonsmokers.

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the Risk of stroke using a regression tree. Use Risk as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Regression Tree procedure, be sure to Normalize input data, to set the Maximum #splits for input variables to 74, to set the Minimum #records in a terminal node to 1, and specify Using Best prune tree as the scoring option. In Step 3 of XLMiner's Regression Tree procedure, set the maximum number of levels to 7. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate a Detailed Scoring report for all three sets of data.

a. In terms of number of decision nodes, compare the size of the full tree to the size of the best pruned tree.

b. What is the root mean squared error (RMSE) of the best pruned tree on the validation data and on the test data?

c. What is the average error on the validation data and test data? What does this suggest?

d. By examining the best pruned tree, what are the critical variables in predicting the risk?

Partition the data into training (50 percent), validation (30 percent), and test (20 percent) sets. Predict the Risk of stroke using a regression tree. Use Risk as the output variable and all the other variables as input variables. In Step 2 of XLMiner's Regression Tree procedure, be sure to Normalize input data, to set the Maximum #splits for input variables to 74, to set the Minimum #records in a terminal node to 1, and specify Using Best prune tree as the scoring option. In Step 3 of XLMiner's Regression Tree procedure, set the maximum number of levels to 7. Generate the Full tree, Best pruned tree, and Minimum error tree. Generate a Detailed Scoring report for all three sets of data.

a. In terms of number of decision nodes, compare the size of the full tree to the size of the best pruned tree.

b. What is the root mean squared error (RMSE) of the best pruned tree on the validation data and on the test data?

c. What is the average error on the validation data and test data? What does this suggest?

d. By examining the best pruned tree, what are the critical variables in predicting the risk?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck

60

As part of the quarterly reviews, the manager of a retail store analyzes the quality of customer service based on the periodic customer satisfaction ratings (on a scale of 1 to 10 with 1 = Poor and 10 = Excellent). To understand the level of service quality, which includes the waiting times of the customers in the checkout section, he collected the following data on 100 customers who visited the store.

Apply k-means clustering with k = 5 using Wait Time (min), Purchase Amount ($), Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Analyze the resultant clusters. What is the smallest cluster? What is the least dense cluster (as measured by the average distance in the cluster)? What reasons do you see for low customer satisfaction ratings?

Apply k-means clustering with k = 5 using Wait Time (min), Purchase Amount ($), Customer Age, and Customer Satisfaction Rating as variables. Be sure to Normalize input data, and specify 50 iterations and 10 random starts in Step 2 of the XLMiner k-Means Clustering procedure. Analyze the resultant clusters. What is the smallest cluster? What is the least dense cluster (as measured by the average distance in the cluster)? What reasons do you see for low customer satisfaction ratings?

Unlock Deck

Unlock for access to all 60 flashcards in this deck.

Unlock Deck

k this deck