Essay

Construct a binary Huffman code for a source with three symbols and , having probabilities , and 0.1 , respectively. What is its average codeword length, in bits per symbol? What is the entropy of this source?

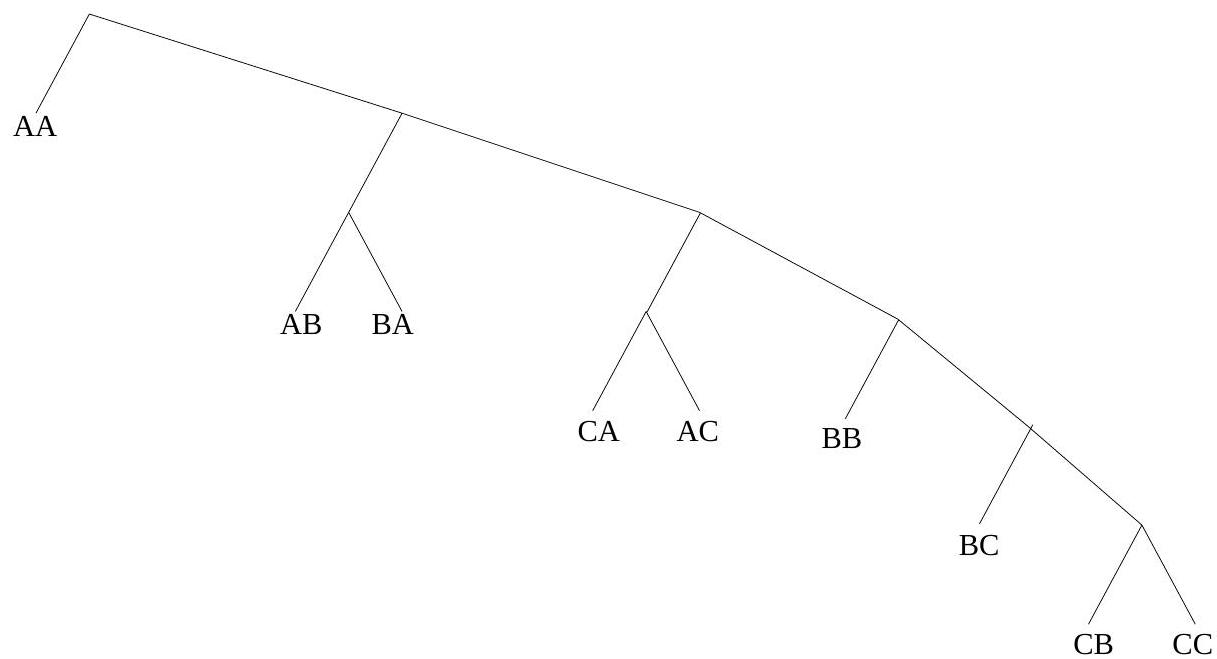

(b) Let's now extend this code by grouping symbols into 2-character groups - a type of VQ. Compare the performance now, in bits per original source symbol, with the best possible.

Fig. 7.1:

Fig. 7.1:

Correct Answer:

Verified

Correct Answer:

Verified

Q1: Consider an alphabet with three symbols

Q2: If the source string MULTIMEDIA is now

Q4: For the LZW algorithm, assume an

Q5: Consider a text string containing a set

Q6: Suppose we have a source consisting

Q7: Calculate the entropy of a "checkerboard" image

Q8: Consider the question of whether it

Q9: Using the Lempel-Ziv-Welch (LZW) algorithm, encode the

Q10: Is the following code uniquely decodable?

Q11: Suppose we wish to transmit the 10-character