Multiple Choice

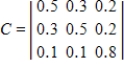

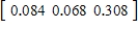

A Markov chain has transition matrix  and initial-probability vector

and initial-probability vector  . Find the probability vector for the third stage of the Markov chain.

. Find the probability vector for the third stage of the Markov chain.

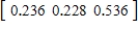

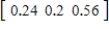

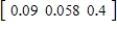

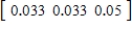

A)

B)

C)

D)

E)

Correct Answer:

Verified

Correct Answer:

Verified

Q2: If the probability of rain today is

Q3: Suppose that a marksman hits the bull's-eye

Q4: Compute <img src="https://d2lvgg3v3hfg70.cloudfront.net/TB4005/.jpg" alt="Compute .

Q6: A ball is drawn from a bag

Q7: Suppose that a person groups 18 objects

Q8: Compute <img src="https://d2lvgg3v3hfg70.cloudfront.net/TB4005/.jpg" alt="Compute .

Q9: The local community-service funding organization in a

Q10: A red ball and 19 white balls

Q11: Compute <img src="https://d2lvgg3v3hfg70.cloudfront.net/TB4005/.jpg" alt="Compute .

Q125: The probability that daughters of a mother