Exam 12: Markov Process Models

Exam 1: Introduction to Management Science Models50 Questions

Exam 2: Introduction to Management Science Models58 Questions

Exam 3: Applications of Linear and Integer Programming Models53 Questions

Exam 4: Network Models54 Questions

Exam 5: Project Scheduling Models55 Questions

Exam 6: Decision Models46 Questions

Exam 7: Forecasting49 Questions

Exam 8: Inventory Models54 Questions

Exam 9: Queuing Models54 Questions

Exam 10: Simulation Models54 Questions

Exam 11: Quality Management Models50 Questions

Exam 12: Markov Process Models49 Questions

Exam 13: Nonlinear Models:dynamic, Goal, and Nonlinear Programming53 Questions

Select questions type

Markov processes are a powerful decision making tool useful in explaining the behavior of systems and determining limiting behavior.

Free

(True/False)

4.8/5  (36)

(36)

Correct Answer:

False

Once a process reaches steady-state, the state probabilities will never change.

Free

(True/False)

4.8/5  (33)

(33)

Correct Answer:

True

"How you arrived at where you are now has no bearing on where you go next." This, simply put, is the Markovian memoryless property.

Free

(True/False)

4.8/5  (31)

(31)

Correct Answer:

True

Is this an acceptable transition matrix? Explain your answer.

| .3 .3 .4 0 |

| .2 .5 0 .3 |

| .1 .6 .2 .1 |

(Essay)

5.0/5  (27)

(27)

Which of the following is a necessary characteristic of a Markovian transition matrix?

(Multiple Choice)

4.7/5  (37)

(37)

What is the minimum percentage of transition probabilities that must be nonzero?

(Essay)

4.8/5  (43)

(43)

The values towards which state probabilities converge are the steady-state probabilities.

(True/False)

4.8/5  (37)

(37)

Although the number of possible states in a Markov process may be infinite, they must be countably infinite.

(True/False)

4.8/5  (39)

(39)

The "mean recurrence time" for a state in a Markov process:

(Multiple Choice)

4.8/5  (35)

(35)

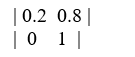

This transition matrix represents what type of Markov process?

This transition matrix represents what type of Markov process?

(Multiple Choice)

4.8/5  (32)

(32)

All of the following are necessary characteristics of a Markov process except:

(Multiple Choice)

4.7/5  (40)

(40)

The state vector for stage j of a Markov chain with n states:

(Multiple Choice)

4.8/5  (27)

(27)

Charles dines out twice a week.On Tuesdays, he always frequents the same Mexican restaurant; on Thursdays, he randomizes between Greek, Italian, or Thai (but never Mexican).Is this transient, periodic, or recurrent behavior?

(Essay)

4.8/5  (32)

(32)

In a Markovian system, is it possible to have only one absorbing state?

(Essay)

4.8/5  (44)

(44)

A firm displeased with its projected steady-state market share may try to improve the situation by taking steps which hopefully will:

(Multiple Choice)

4.8/5  (36)

(36)

In a Markovian transition matrix, each column's probabilities sum to 1.

(True/False)

4.8/5  (41)

(41)

Showing 1 - 20 of 49

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)