Exam 18: The Theory of Multiple Regression

Write the following four restrictions in the form where the hypotheses are to be tested simultaneously.

=2, +=1, =0, =-.

Can you write the following restriction in the same format? Why not?

The restriction cannot be written in the same format because it is nonlinear.

Write an essay on the difference between the OLS estimator and the GLS estimator.

Answers will vary by student, but some of the following points should be made.

The multiple regression model is

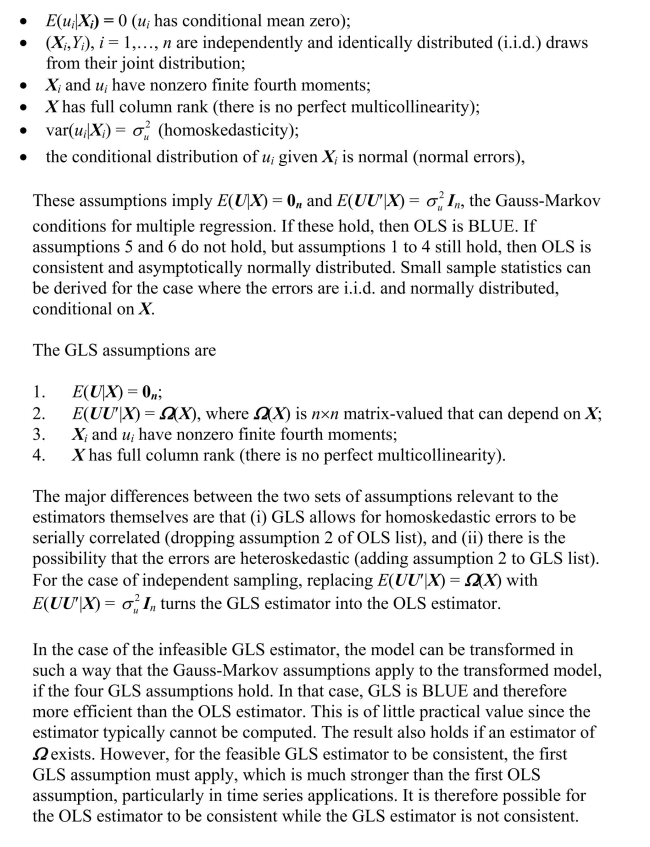

which, in matrix form, can be written as . The OLS estimator is derived by minimizing the squared prediction mistakes and results in the following formula: . There are two GLS estimators. The infeasible GLS estimator is . Since is typically unknown, the estimator cannot be calculated, and hence its name. However, a feasible GLS estimator can be calculated if is a known function of a number of parameters which can be estimated. Once these parameters have been estimated, they can then be used to calculate , the estimator of . The feasible GLS estimator is defined as . There are extended least squares assumptions.

The multiple regression model can be written in matrix form as follows: a. .

b. .

c. .

d. .

The Gauss-Markov theorem for multiple regressions states that the OLS estimator

Assume that the data looks as follows: Using the formula for the OLS estimator , derive the formula for , the only slope in this "regression through the origin."

The presence of correlated error terms creates problems for inference based on OLS. These can be overcome by

Write the following three linear equations in matrix format , where is a vector containing , and is a matrix of coefficients, and is a vector of constants. q = 5 +3 p - 2 y

q = 10 - p + 10 y

p = 6 y

A joint hypothesis that is linear in the coefficients and imposes a number of restrictions can be written as a. .

b. .

c. .

d. .

In Chapter 10 of your textbook, panel data estimation was introduced.Panel data consist

of observations on the same n entities at two or more time periods T.For two variables,

you have where n could be the U.S.states.The example in Chapter 10 used annual data from 1982

to 1988 for the fatality rate and beer taxes.Estimation by OLS, in essence, involved

"stacking" the data.

(a)What would the variance-covariance matrix of the errors look like in this case if you

allowed for homoskedasticity-only standard errors? What is its order? Use an example of

a linear regression with one regressor of 4 U.S.states and 3 time periods.

Using the model and the extended least squares assumptions, derive the OLS estimator . Discuss the conditions under which is invertible.

One of the properties of the OLS estimator is a. .

b. that the coefficient vector has full rank.

c. .

d.

The difference between the central limit theorems for a scalar and vector-valued random variables is

In the case when the errors are homoskedastic and normally distributed, conditional on X, then a. is distributed , where .

b. is distributed , where .

c. is distributed , where .

d. where .

Consider the multiple regression model from Chapter 5, where k = 2 and the assumptions

of the multiple regression model hold.

(a)Show what the X matrix and the vector would look like in this case.

In order for a matrix A to have an inverse, its determinant cannot be zero.Derive the

determinant of the following matrices:

where

One implication of the extended least squares assumptions in the multiple regression model is that a. feasible GLS should be used for estimation.

b. .

c. is singular.

d. the conditional distribution of given is .

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)