Exam 12: Markov Analysis

Exam 1: Introduction to Management Science, Modeling, and Excel Spreadsheets33 Questions

Exam 2: Forecasting75 Questions

Exam 3: Linear Programming: Basic Concepts and Graphical Solutions59 Questions

Exam 4: Linear Programming: Applications and Solutions61 Questions

Exam 5: Linear Programming: Sensitivity Analysis, Duality, and Specialized Models55 Questions

Exam 6: Transportation, Assignment, and Transshipment Problems53 Questions

Exam 7: Integer Programming58 Questions

Exam 8: Network Optimization Models61 Questions

Exam 9: Nonlinear Optimization Models60 Questions

Exam 10: Multi-Criteria Models60 Questions

Exam 11: Decision Theory59 Questions

Exam 12: Markov Analysis52 Questions

Exam 13: Waiting Line Models50 Questions

Exam 14: Simulation Cdrom Modules47 Questions

Select questions type

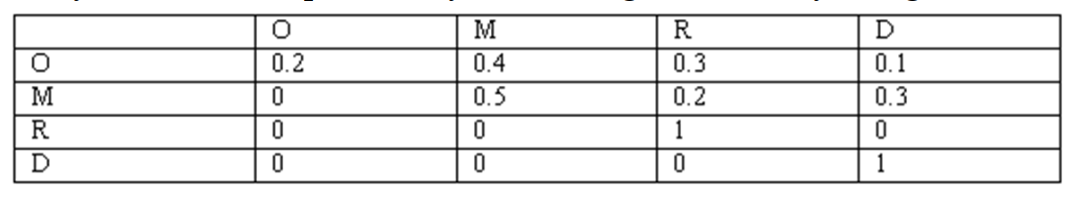

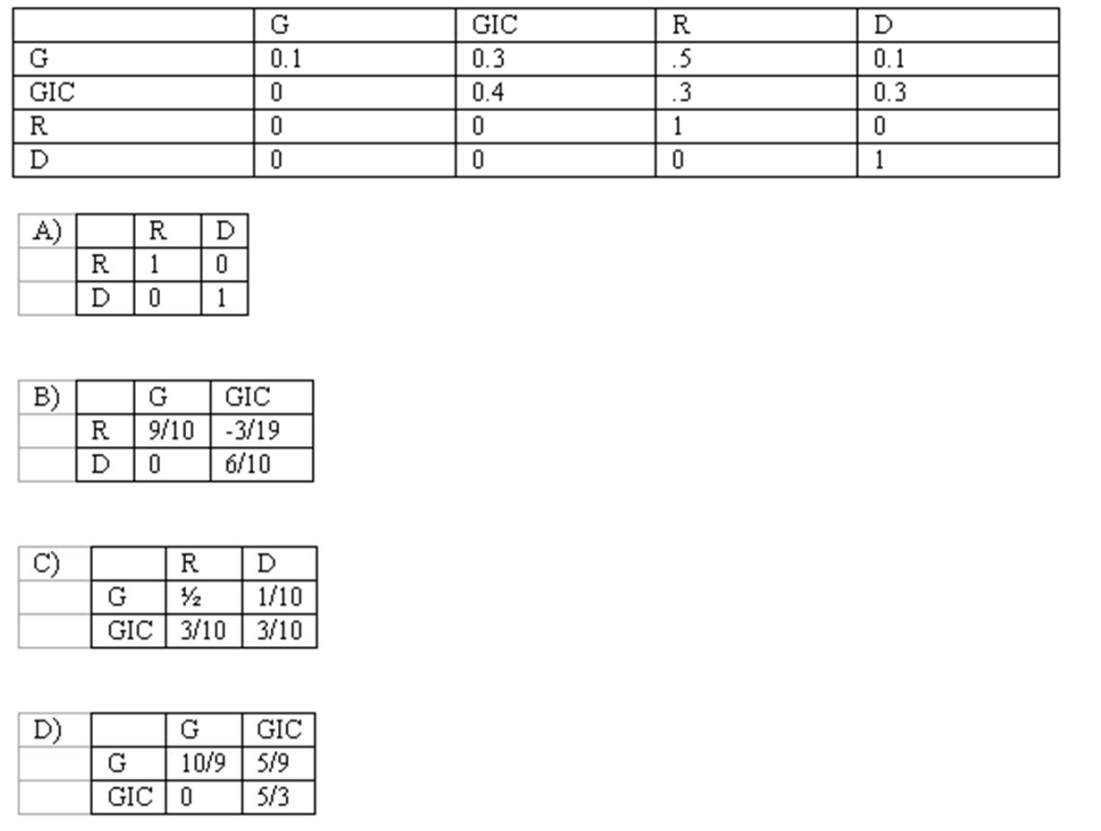

The U.S. army hires only newly minted graduates for a special officer program. They always start on January 1 and are considered for promotion every January 1st thereafter. On January 1st, existing officers (state O) - those who were officers last year-may continue as officers, be promoted to manager cadres (state M), retire (state R ), or be dead while in service (state D). Everyone in state M on January 1st may continue in state or move to states or . Using the data from the transition matrix and using 1 year as the transition time, calculate the probability of an officer eventually being retired or dead while in service. Similarly, calculate the probability of a manager eventually being retired or dead while in service.

Free

(Essay)

4.9/5  (32)

(32)

Correct Answer:

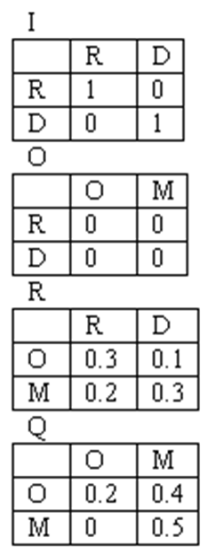

A. Partition the matrix into I, O, R, and Q

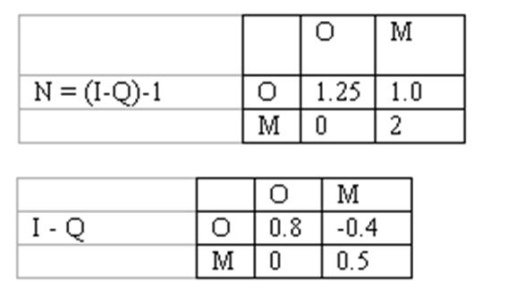

Expected number of times the system will be in any of the non-absorbing states before it gets absorbed in an absorbing state

Expected number of times the system will be in any of the non-absorbing states before it gets absorbed in an absorbing state

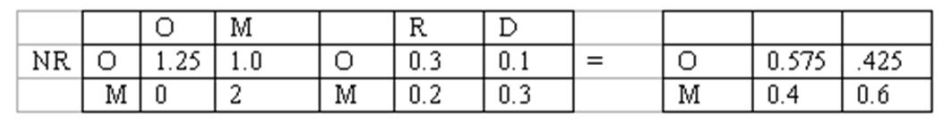

Anyone who is an officer will eventually retire with probability 0.575 , and eventually die while in service with a probability 0.425

Anyone who is an officer will eventually retire with probability 0.575 , and eventually die while in service with a probability 0.425

Anyone who is a manager will eventually retire with probability 0.4 , and eventually die while in service with a probability 0.6

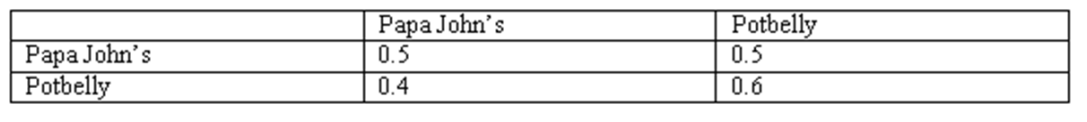

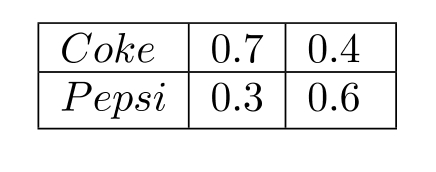

Vikram eats a sandwich for dinner every Sunday. He only likes Papa John's or Potbelly. His behavior in this context is captured by the following Markov transition matrix.

In the next 90 Sundays, on an average, how many Sundays would Vikram have eaten at Papa Johns?

In the next 90 Sundays, on an average, how many Sundays would Vikram have eaten at Papa Johns?

Free

(Multiple Choice)

4.8/5  (33)

(33)

Correct Answer:

A

Transition probabilities indicate the tendency of the system to change from one state to another after an elapse of one period.

Free

(True/False)

4.9/5  (31)

(31)

Correct Answer:

True

Vikram eats a sandwich for dinner every Sunday. He only likes Papa John's or Potbelly. His behavior in this context is captured by the following Markov transition matrix.

Suppose on the first Sunday of 2006, Vikram ate in Potbelly, what is the probability that he would eat there on the third Sunday of 2006?

Suppose on the first Sunday of 2006, Vikram ate in Potbelly, what is the probability that he would eat there on the third Sunday of 2006?

(Multiple Choice)

4.9/5  (32)

(32)

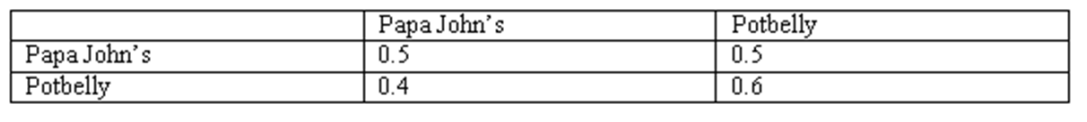

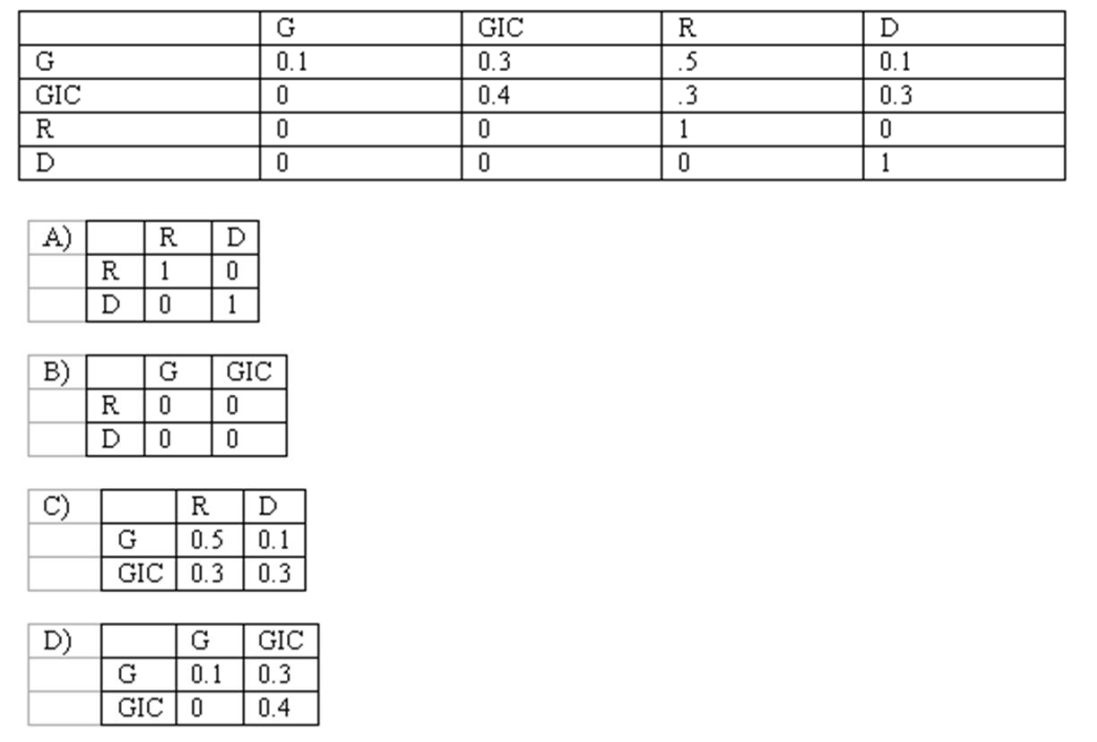

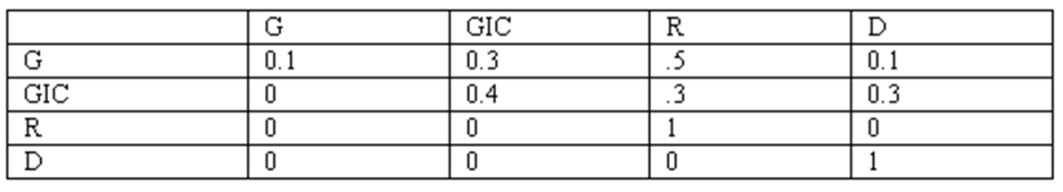

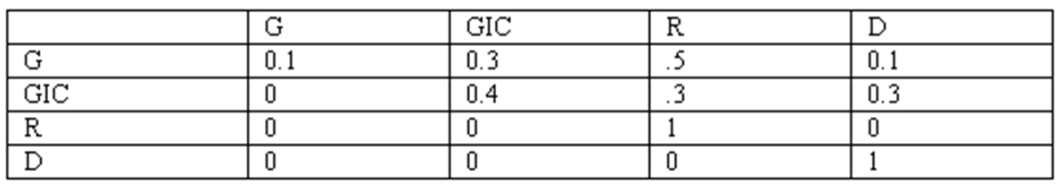

XYZ Inc. hires only retired people for its greeter's job. They always start on January 1 and are considered for promotion every January 1st thereafter. On every January 1st, any existing greeter (state G) those who were greeters last year - may be retired (state R), may be dead (sate D), may continue as a greeter (state G), or may be promoted as greeter -in-chief (state GIC). Persons in state GIC may continue in it or move to states D or R in one year. The following is the transition matrix. While trying to find the probability of eventual absorption in the absorbing states, Matrix R for this problem is:

(Multiple Choice)

4.7/5  (38)

(38)

A tree diagram is a very useful technique for analyzing the long term behavior of a Markov system.

(True/False)

4.9/5  (37)

(37)

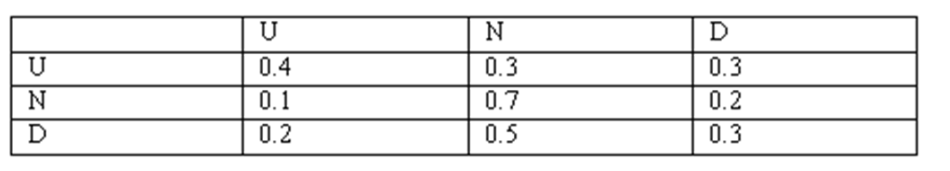

Goldman Sachs commodity analyst John Roberts wanted to use Markov Chains to analyze the price movement of gold. He looked at the changes in the closing price per ounce of gold bullion in the Chicago Board of Trade for any two consecutive trading days. He recognized three states: U (up): today's closing price - previous closing price (neutral): today's closing price - previous closing price ; and D (down): today's closing price - previous closing price . John goes on to construct a transition matrix based on these state definitions; the matrix is given below.

(A) Draw a tree diagram showing the choices for two periods, starting from and .

(B) If the current state of the bullion market were , what would be the probability distribution for the states occupied by the bullion market after 2 trading days?

(C) What would be the long-run proportions (steady-state probabilities) for each state?

(D) In a five-year period with 1,201 trading days, how many of these days would you expect the system to be in U state?

(A) Draw a tree diagram showing the choices for two periods, starting from and .

(B) If the current state of the bullion market were , what would be the probability distribution for the states occupied by the bullion market after 2 trading days?

(C) What would be the long-run proportions (steady-state probabilities) for each state?

(D) In a five-year period with 1,201 trading days, how many of these days would you expect the system to be in U state?

(Essay)

4.9/5  (35)

(35)

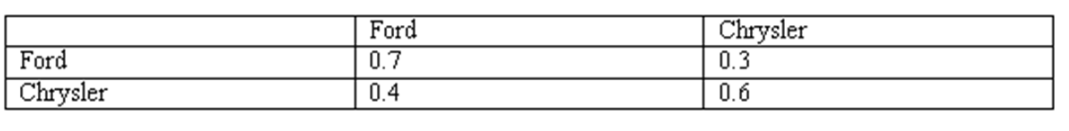

Joe Smith, a loyal lessee of American cars, changes cars exactly once every two years. Joe leases either a Ford or a Chrysler only. His leasing behavior is modeled as a Markov chain, and the transition matrix is given below.

If in year 4 (3rd lease), Joe is equally likely to be leasing a Ford or a Chrysler (0.5 each), what is the probability that he would lease a Chrysler next time (4th lease)?

If in year 4 (3rd lease), Joe is equally likely to be leasing a Ford or a Chrysler (0.5 each), what is the probability that he would lease a Chrysler next time (4th lease)?

(Multiple Choice)

4.9/5  (37)

(37)

XYZ Inc. hires only retired people for its greeter's job. They always start on January 1 and are considered for promotion every January 1st thereafter. On every January 1st, any existing greeter (state G) those who were greeters last year - may be retired (state R), may be dead (sate D), may continue as a greeter (state G), or may be promoted as greeter -in-chief (state GIC). Persons in state GIC may continue in it or move to states or in one year. The following is the transition matrix. While trying to find the probability of eventual absorption in the absorbing states, Matrix N = (I-Q)-1 for this problem is:

(Multiple Choice)

4.9/5  (36)

(36)

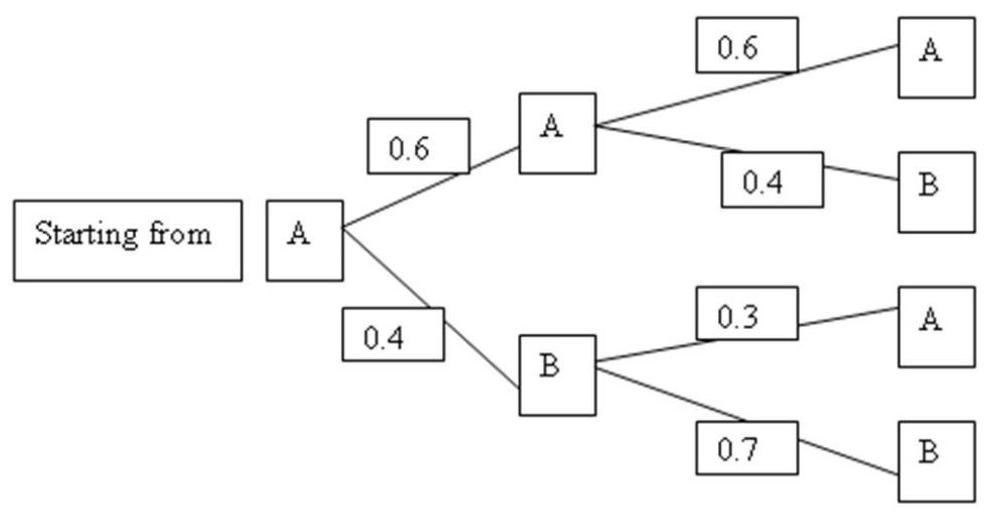

Jim Cramer, a stock analyst, models the movement of the closing price in NYSE of the stock WidgetsR-Us Inc. (symbol: WRU) as a Markov chain with a transition time of 1 day. There are two states in this Markov system-State A, the closing price increased or stayed the same from the previous day and State B, the closing price decreased from the previous day. Suppose that the system is on State A at the end of today, what is the probability that it will be in State B after two trading days (48 hours)?

(Multiple Choice)

4.8/5  (31)

(31)

Short term behavior of a Markov system depends on the current state and transition probabilities.

(True/False)

4.7/5  (35)

(35)

Judy Jones purchases groceries and pop exactly once each week on Sunday evenings. She buys either Coke or Pepsi only and switches from Coke to Pepsi and vice-versa somewhat regularly. Her purchasing behavior of these two drinks is modeled as a Markov system. The number of states required to model the system is

(Multiple Choice)

4.8/5  (36)

(36)

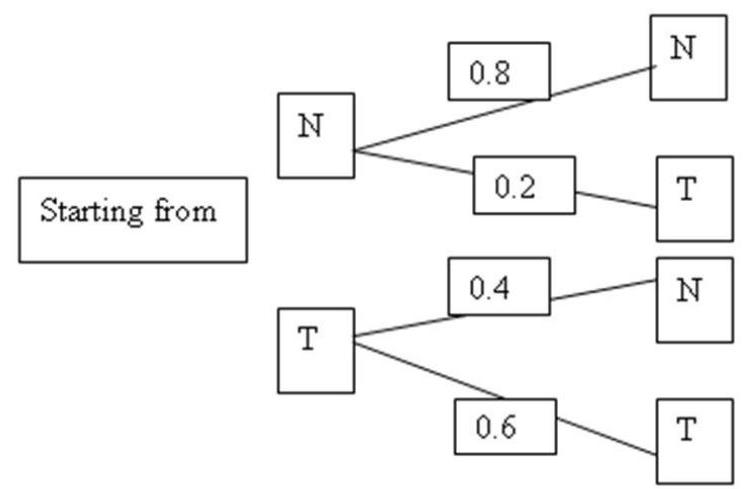

Newsweek and Time are two competing, weeklies each of which tries to keep their readership while at the same time trying to get the other's readers to switch. Among all the households holding yearly subscriptions to Newsweek or Time (but not both), let and denote the states that a household is a current Newsweek or Time subscriber, respectively. The probabilities of switching from one state to the other after one transition (when they renew) are given by the tree diagram. (For simplicity, it may be assumed that all annual subscriptions expire on December 31st and are renewed by all of them for one or the other but not both magazines for one year.) If the current number of households subscribing to Newsweek and Time is 8000 and 4000, respectively, Newsweek would have a larger number of subscribers in the long run.

(True/False)

4.8/5  (37)

(37)

Judy Jones purchases groceries and pop exactly once each week on Sunday evenings. She buys either Coke or Pepsi only and switches from Coke to Pepsi and vice-versa somewhat regularly. Her purchasing behavior of these two drinks is modeled as a Markov system. Querying Judy, a novice student came up with the following transition mattix. This matrix satisfies all the conditions for being a transition matrix.

(True/False)

4.8/5  (43)

(43)

In Markov chains having absorbing states and transient states, in order to compute probability of absorption in an absorbing state starting from a transient state, the matrix is partitioned into two submatrices.

(True/False)

4.8/5  (36)

(36)

The columns of the transition probability matrix should add up to 1.0.

(True/False)

4.9/5  (33)

(33)

XYZ Inc. hires only retired people for its greeter's job. They always start on January 1 and are considered for promotion every January 1st thereafter. On every January 1st, any existing greeter (state G) - those who were greeters last year - may be retired (state R), may be dead (sate D), may continue as a greeter (state G), or may be promoted as greeter -in-chief (state GIC). Persons in state GIC may continue in it or move to states or in one year. Using the data on transition matrix and using 1 year as the transition time, the probability of a greeter retiring eventually is the probability of a greeter being dead eventually. (Note: a person may retire and then die, and it will be still counted as retired)

(Multiple Choice)

4.8/5  (46)

(46)

The states in a Markov system are mutually exclusive and collectively exhaustive.

(True/False)

4.7/5  (35)

(35)

An absorbing state in a Markov system is one in which the system will get stuck and will not be able to get out of that state.

(True/False)

4.9/5  (30)

(30)

XYZ Inc. hires only retired people for its greeter's job. They always start on January 1 and are considered for promotion every January 1st thereafter. On every January 1st, any existing greeter (state G) - those who were greeters last year- may be retired (state R), may be dead (sate D), may continue as a greeter (state G), or may be promoted as greeter -in-chief (state GIC). Persons in state GIC may continue in it or move to states or in one year. The table below is the transition matrix; use 1 year as the transition time. The number of transient and absorbing states in this matrix is:

(Multiple Choice)

4.8/5  (40)

(40)

Showing 1 - 20 of 52

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)