Exam 4: Linear Regression With One Regressor

Exam 1: Economic Questions and Data11 Questions

Exam 2: Review of Probability61 Questions

Exam 3: Review of Statistics56 Questions

Exam 4: Linear Regression With One Regressor54 Questions

Exam 5: Regression With a Single Regressor: Hypothesis Tests and Confidence Intervals53 Questions

Exam 6: Linear Regression With Multiple Regressors54 Questions

Exam 7: Hypothesis Tests and Confidence Intervals in Multiple Regression50 Questions

Exam 8: Nonlinear Regression Functions53 Questions

Exam 9: Assessing Studies Based on Multiple Regression55 Questions

Exam 10: Regression With Panel Data40 Questions

Exam 11: Regression With a Binary Dependent Variable40 Questions

Exam 12: Instrumental Variables Regression40 Questions

Exam 13: Experiments and Quasi-Experiments40 Questions

Exam 14: Introduction to Time Series Regression and Forecasting36 Questions

Exam 15: Estimation of Dynamic Causal Effects40 Questions

Exam 16: Additional Topics in Time Series Regression40 Questions

Exam 17: The Theory of Linear Regression With One Regressor39 Questions

Exam 18: The Theory of Multiple Regression38 Questions

Select questions type

(Requires Appendix material)Consider the sample regression function where "*" indicates that the variable has been standardized. What are the units of measurement for the dependent and explanatory variable? Why would you want to transform both variables in this way? Show that the OLS estimator for the intercept equals zero. Next prove that the OLS estimator for the slope in this case is identical to the formula for the least squares estimator where the variables have not been standardized, times the ratio of the sample standard deviation of and , i.e., .

(Essay)

4.8/5  (38)

(38)

The help function for a commonly used spreadsheet program gives the following

definition for the regression slope it estimates: Prove that this formula is the same as the one given in the textbook.

(Essay)

4.8/5  (43)

(43)

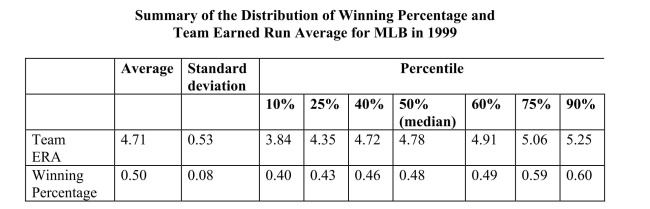

The baseball team nearest to your home town is, once again, not doing well.Given that

your knowledge of what it takes to win in baseball is vastly superior to that of

management, you want to find out what it takes to win in Major League Baseball (MLB).

You therefore collect the winning percentage of all 30 baseball teams in MLB for 1999

and regress the winning percentage on what you consider the primary determinant for

wins, which is quality pitching (team earned run average).You find the following

information on team performance:  (a)What is your expected sign for the regression slope? Will it make sense to interpret the

intercept? If not, should you omit it from your regression and force the regression line

through the origin?

(a)What is your expected sign for the regression slope? Will it make sense to interpret the

intercept? If not, should you omit it from your regression and force the regression line

through the origin?

(Essay)

4.8/5  (35)

(35)

Indicate in a scatterplot what the data for your dependent variable and your explanatory variable would look like in a regression with an R2 equal to zero. How would this change if the regression R2 was equal to one?

(Essay)

4.8/5  (42)

(42)

You have analyzed the relationship between the weight and height of individuals.

Although you are quite confident about the accuracy of your measurements, you feel that

some of the observations are extreme, say, two standard deviations above and below the

mean.Your therefore decide to disregard these individuals.What consequence will this

have on the standard deviation of the OLS estimator of the slope?

(Essay)

5.0/5  (37)

(37)

Prove that the regression is identical to the square of the correlation coefficient between two variables Y and X . Regression functions are written in a form that suggests causation running from X to Y . Given your proof, does a high regression present supportive evidence of a causal relationship? Can you think of some regression examples where the direction of causality is not clear? Is without a doubt?

(Essay)

4.9/5  (40)

(40)

The slope estimator, has a smaller standard error, other things equal, if

(Multiple Choice)

4.9/5  (30)

(30)

(Requires Appendix material)At a recent county fair, you observed that at one stand

people's weight was forecasted, and were surprised by the accuracy (within a range).

Thinking about how the person could have predicted your weight fairly accurately

(despite the fact that she did not know about your "heavy bones"), you think about how

this could have been accomplished.You remember that medical charts for children

contain 5%, 25%, 50%, 75% and 95% lines for a weight/height relationship and decide to

conduct an experiment with 110 of your peers.You collect the data and calculate the

following sums: =17,375,=7,665.5 =94,228.8,=1,248.9,=7,625.9

where the height is measured in inches and weight in pounds. (Small letters refer to deviations from means as in .) (a)Calculate the slope and intercept of the regression and interpret these.

(Essay)

4.8/5  (38)

(38)

To decide whether or not the slope coefficient is large or small,

(Multiple Choice)

4.8/5  (39)

(39)

In order to calculate the slope, the intercept, and the regression for a simple sample regression function, list the five sums of data that you need.

(Essay)

4.8/5  (34)

(34)

(Requires Appendix material) In deriving the OLS estimator, you minimize the sum of squared residuals with respect to the two parameters

. The resulting two equations imply two restrictions that OLS places on the data, namely that and

Show that you get the same formula for the regression slope and the intercept if you impose these two conditions on the sample regression function.

(Essay)

4.7/5  (39)

(39)

Interpreting the intercept in a sample regression function is

(Multiple Choice)

4.9/5  (31)

(31)

The OLS slope estimator is not defined if there is no variation in the data for the

explanatory variable.You are interested in estimating a regression relating earnings to

years of schooling.Imagine that you had collected data on earnings for different

individuals, but that all these individuals had completed a college education (16 years of

education).Sketch what the data would look like and explain intuitively why the OLS

coefficient does not exist in this situation.

(Essay)

4.8/5  (36)

(36)

(Requires Appendix material) A necessary and sufficient condition to derive the OLS estimator is that the following two conditions hold:

Show that these conditions imply that

(Essay)

4.9/5  (41)

(41)

For the simple regression model of Chapter 4, you have been given the following data: =274,745.75;=8,248.979; =5,392,705;=163,513.03;=179,878,841.13 (a)Calculate the regression slope and the intercept.

(Essay)

4.7/5  (27)

(27)

(Requires Calculus)Consider the following model:

Derive the OLS estimator for .

(Essay)

4.8/5  (35)

(35)

Showing 21 - 40 of 54

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)