Exam 16: Markov Processes

Exam 1: Introduction63 Questions

Exam 2: An Introduction to Linear Programming66 Questions

Exam 3: Linear Programming: Sensitivity Analysis and Interpretation of Solution56 Questions

Exam 4: Linear Programming Applications in Marketing, Finance, and Operations Management63 Questions

Exam 5: Advanced Linear Programming Applications46 Questions

Exam 6: Distribution and Network Models70 Questions

Exam 7: Integer Linear Programming61 Questions

Exam 8: Nonlinear Optimization Models51 Questions

Exam 9: Project Scheduling: Pertcpm59 Questions

Exam 10: Inventory Models65 Questions

Exam 11: Waiting Line Models68 Questions

Exam 12: Simulation62 Questions

Exam 13: Decision Analysis97 Questions

Exam 14: Multicriteria Decisions50 Questions

Exam 15: Time Series Analysis and Forecasting63 Questions

Exam 16: Markov Processes49 Questions

Exam 17: Linear Programming: Simplex Method51 Questions

Exam 18: Simplex-Based Sensitivity Analysis and Duality35 Questions

Exam 19: Solution Procedures for Transportation and Assignment Problems44 Questions

Exam 20: Minimal Spanning Tree19 Questions

Exam 21: Dynamic Programming38 Questions

Select questions type

Accounts receivable have been grouped into the following states:

State 1: Paid

State 2: Bad debt

State 3: 0-30 days old

State 4: 31-60 days old

Sixty percent of all new bills are paid before they are 30 days old. The remainder of these go to state 4. Seventy percent of all 30 day old bills are paid before they become 60 days old. If not paid, they are permanently classified as bad debts.

a.Set up the one month Markov transition matrix.

b.What is the probability that an account in state 3 will be paid?

(Essay)

4.7/5  (41)

(41)

The daily price of a farm commodity is up, down, or unchanged from the day before. Analysts predict that if the last price was down, there is a .5 probability the next will be down, and a .4 probability the price will be unchanged. If the last price was unchanged, there is a .35 probability it will be down and a .35 probability it will be up. For prices whose last movement was up, the probabilities of down, unchanged, and up are .1, .3, and .6.

a.Construct the matrix of transition probabilities.

b.Calculate the steady state probabilities.

(Essay)

4.8/5  (40)

(40)

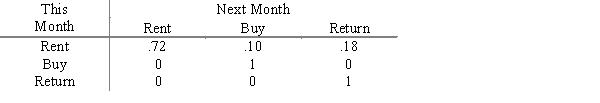

Rent-To-Keep rents household furnishings by the month. At the end of a rental month a customer can: a) rent the item for another month, b) buy the item, or c) return the item. The matrix below describes the month-to-month transition probabilities for 32-inch stereo televisions the shop stocks.

What is the probability that a customer who rented a TV this month will eventually buy it?

What is the probability that a customer who rented a TV this month will eventually buy it?

(Short Answer)

4.9/5  (32)

(32)

If a Markov chain has at least one absorbing state, steady-state probabilities cannot be calculated.

(True/False)

4.8/5  (40)

(40)

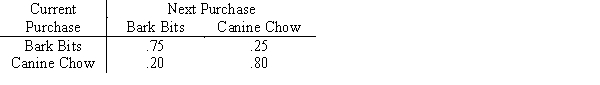

The matrix of transition probabilities below deals with brand loyalty to Bark Bits and Canine Chow dog food.

a.What are the steady state probabilities?

b.What is the probability that a customer will switch brands on the next purchase after a large number of periods?

a.What are the steady state probabilities?

b.What is the probability that a customer will switch brands on the next purchase after a large number of periods?

(Essay)

4.8/5  (34)

(34)

Bark Bits Company is planning an advertising campaign to raise the brand loyalty of its customers to .80.

a.The former transition matrix is  What is the new one?

b.What are the new steady state probabilities?

c.If each point of market share increases profit by $15000, what is the most you would pay for the advertising?

What is the new one?

b.What are the new steady state probabilities?

c.If each point of market share increases profit by $15000, what is the most you would pay for the advertising?

(Essay)

4.8/5  (35)

(35)

Showing 41 - 49 of 49

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)