Exam 11: Regression Analysis: Statistical Inference

Exam 1: Introduction to Business Analytics29 Questions

Exam 2: Describing the Distribution of a Single Variable100 Questions

Exam 3: Finding Relationships Among Variables85 Questions

Exam 4: Probability and Probability Distributions114 Questions

Exam 5: Normal, Binomial, Poisson, and Exponential Distributions125 Questions

Exam 6: Decision Making Under Uncertainty107 Questions

Exam 7: Sampling and Sampling Distributions90 Questions

Exam 8: Confidence Interval Estimation84 Questions

Exam 9: Hypothesis Testing87 Questions

Exam 10: Regression Analysis: Estimating Relationships92 Questions

Exam 11: Regression Analysis: Statistical Inference82 Questions

Exam 12: Time Series Analysis and Forecasting106 Questions

Exam 13: Introduction to Optimization Modeling97 Questions

Exam 14: Optimization Models114 Questions

Exam 15: Introduction to Simulation Modeling82 Questions

Exam 16: Simulation Models102 Questions

Exam 17: Data Mining20 Questions

Exam 18: Importing Data Into Excel19 Questions

Exam 19: Analysis of Variance and Experimental Design20 Questions

Exam 20: Statistical Process Control20 Questions

Select questions type

In regression analysis, extrapolation is performed when you:

(Multiple Choice)

4.8/5  (33)

(33)

The Durbin-Watson statistic can be used to test for autocorrelation.

(True/False)

4.9/5  (36)

(36)

A confidence interval constructed around a point prediction from a regression model is called a prediction interval, because the actual point being estimated is not a population parameter.

(True/False)

4.8/5  (45)

(45)

The assumptions of regression are: 1) there is a population regression line, 2) the dependent variable is normally distributed, 3) the standard deviation of the response variable remains constant as the explanatory variables increase, and 4) the errors are probabilistically independent.

(True/False)

4.8/5  (33)

(33)

Suppose you forecast the values of all of the independent variables and insert them into a multiple regression equation and obtain a point prediction for the dependent variable. You could then use the standard error of the estimate to obtain an approximate:

(Multiple Choice)

4.8/5  (30)

(30)

In simple linear regression, if the error variable  is normally distributed, the test statistic for testing

is normally distributed, the test statistic for testing  is t-distributed with n - 2 degrees of freedom.

is t-distributed with n - 2 degrees of freedom.

(True/False)

4.9/5  (29)

(29)

In time series data, errors are often not probabilistically independent.

(True/False)

4.9/5  (32)

(32)

The ANOVA table splits the total variation into two parts. They are the:

(Multiple Choice)

4.8/5  (28)

(28)

Many statistical packages have three types of equation-building procedures. They are:

(Multiple Choice)

5.0/5  (32)

(32)

Which of the following is the relevant sampling distribution for regression coefficients?

(Multiple Choice)

4.8/5  (43)

(43)

When there is a group of explanatory variables that are in some sense logically related, all of them must be included in the regression equation.

(True/False)

4.9/5  (35)

(35)

Which of the following is not one of the assumptions of regression?

(Multiple Choice)

4.9/5  (36)

(36)

When determining whether to include or exclude a variable in regression analysis, if the p-value associated with the variable's t-value is above some accepted significance value, such as 0.05, then the variable:

(Multiple Choice)

5.0/5  (28)

(28)

The objective typically used in the tree types of equation-building procedures is to:

(Multiple Choice)

4.9/5  (31)

(31)

Suppose that one equation has 3 explanatory variables and an F-ratio of 49. Another equation has 5 explanatory variables and an F-ratio of 38. The first equation will always be considered a better model.

(True/False)

4.8/5  (38)

(38)

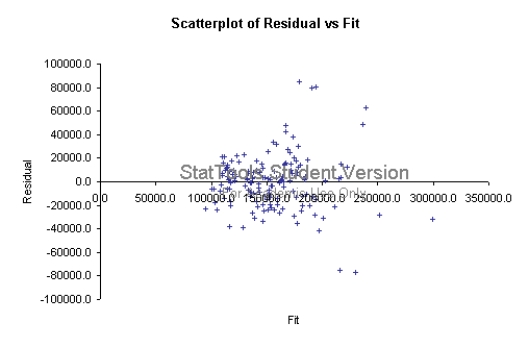

Do you see any problems evident in the plot below of residuals versus fitted values from a multiple regression analysis? Explain your answer.

(Essay)

4.8/5  (31)

(31)

A forward procedure is a type of equation building procedure that begins with only one explanatory variable in the regression equation and successively adds one variable at a time until no remaining variables make a significant contribution.

(True/False)

4.9/5  (27)

(27)

The residuals are observations of the error variable  . Consequently, the minimized sum of squared deviations is called the sum of squared error, labeled SSE.

. Consequently, the minimized sum of squared deviations is called the sum of squared error, labeled SSE.

(True/False)

4.8/5  (39)

(39)

There is evidence that the regression equation provides little explanatory power when the F-ratio:

(Multiple Choice)

5.0/5  (30)

(30)

Showing 21 - 40 of 82

Filters

- Essay(0)

- Multiple Choice(0)

- Short Answer(0)

- True False(0)

- Matching(0)